| Citation: |

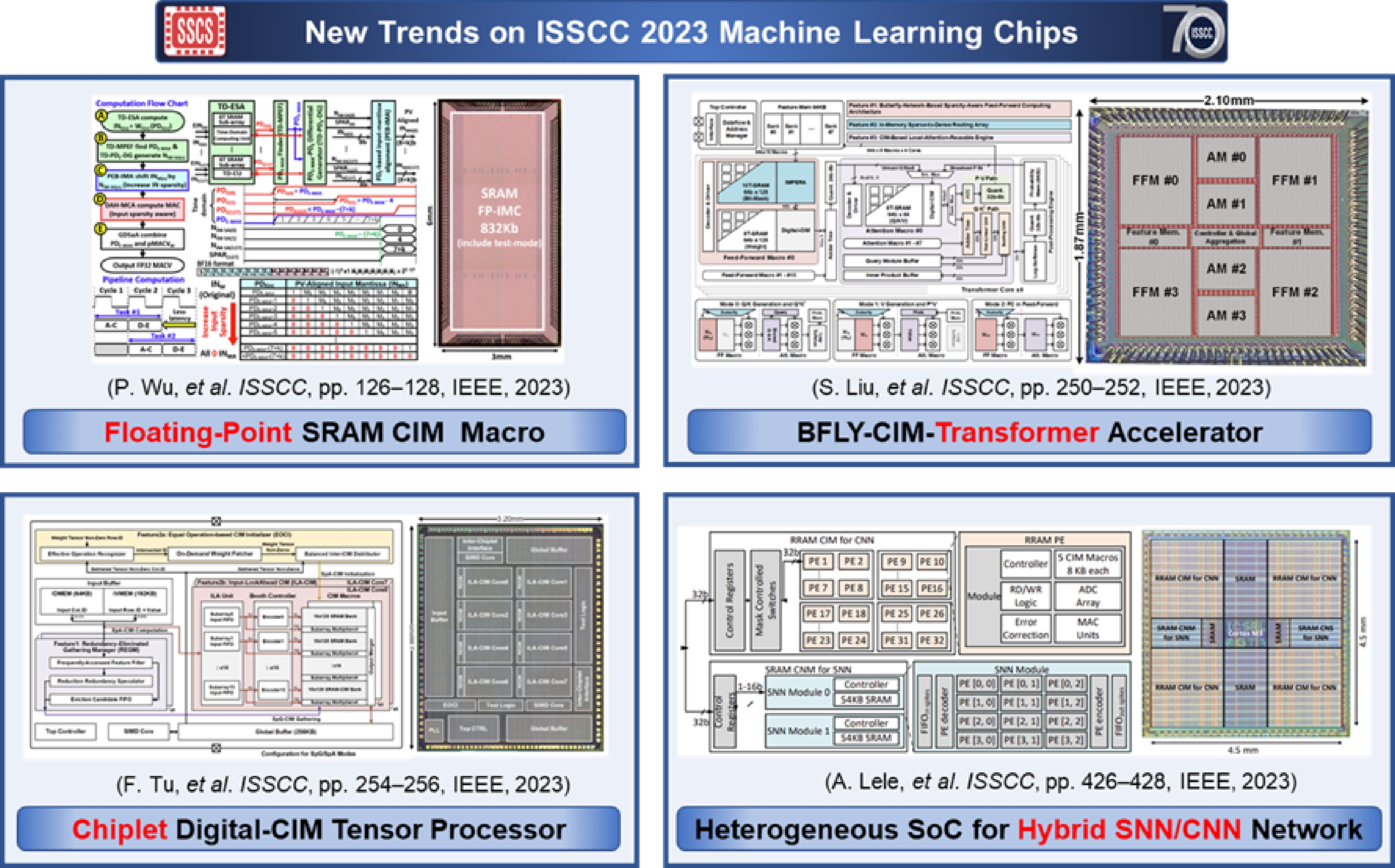

Chen Mu, Jiapei Zheng, Chixiao Chen. Beyond convolutional neural networks computing: New trends on ISSCC 2023 machine learning chips[J]. Journal of Semiconductors, 2023, 44(5): 050203. doi: 10.1088/1674-4926/44/5/050203

****

C Mu, J P Zheng, C X Chen. Beyond convolutional neural networks computing: New trends on ISSCC 2023 machine learning chips[J]. J. Semicond, 2023, 44(5): 050203. doi: 10.1088/1674-4926/44/5/050203

|

Beyond convolutional neural networks computing: New trends on ISSCC 2023 machine learning chips

DOI: 10.1088/1674-4926/44/5/050203

More Information

-

References

[1] Wu P C, Su J W, Hong L Y, et al. A 22nm 832Kb hybrid-domain floating-point SRAM in-memory-compute macro with 16.2-70.2TFLOPS/W for high-accuracy AI-edge devices. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 126 doi: 10.1109/ISSCC42615.2023.10067527[2] Guo A, Si X, Chen X, et al. A 28nm 64-kb 31.6-TFLOPS/W digital-domain floating-point-computing-unit and double-bit 6T-SRAM computing-in-memory macro for floating-point CNNs. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 128 doi: 10.1109/ISSCC42615.2023.10067260[3] Yue J S, He C J, Wang Z, et al. A 28nm 16.9-300TOPS/W computing-in-memory processor supporting floating-point NN inference/training with intensive-CIM sparse-digital architecture. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 252 doi: 10.1109/ISSCC42615.2023.10067779[4] Tu F B, Wu Z H, Wang Y Q, et al. MuITCIM: A 28nm 2.24 μj/token attention-token-bit hybrid sparse digital CIM-based accelerator for multimodal transformers. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 248 doi: 10.1109/ISSCC42615.2023.10067842[5] Liu S, Li P, Zhang J, et al. A 28nm 53.8TOPS/W 8b sparse transformer accelerator with in-memory butterfly zero skipper for unstructured-pruned NN and CIM-based local-attention-reusable engine. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 250[6] Tambe T, Zhang J, Hooper C, et al. A 12nm 18.1TFLOPs/W sparse transformer processor with entropy-based early exit, mixed-precision predication and fine-grained power management. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 342 doi: 10.1109/ISSCC42615.2023.10067817[7] Chang M Y, Lele A S, Spetalnick S D, et al. A 73.53TOPS/W 14.74TOPS heterogeneous RRAM In-memory and SRAM near-memory SoC for hybrid frame and event-based target tracking. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 426 doi: 10.1109/ISSCC42615.2023.10067544[8] Kim S, Kim S, Hong S, et al. C-DNN: A 24.5-85.8TOPS/W complementary-deep-neural-network processor with heterogeneous CNN/SNN core architecture and forward-gradient-based sparsity generation. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 334 doi: 10.1109/ISSCC42615.2023.10067497[9] Zhang J L, Huo D X, Zhang J, et al. ANP-I: A 28nm 1.5pJ/SOP asynchronous spiking neural network processor enabling sub-O.1 μJ/sample on-chip learning for edge-AI applications. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 21 doi: 10.1109/ISSCC42615.2023.10067650[10] Su L S, Naffziger S. Innovation for the next decade of compute efficiency. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 8 doi: 10.1109/ISSCC42615.2023.10067810[11] Munger B, Wilcox K, Sniderman J, et al. “Zen 4”: The AMD 5nm 5.7GHz x86-64 microprocessor core. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 38 doi: 10.1109/ISSCC42615.2023.10067540[12] Seong K, Park D, Bae G, et al. A 4nm 32Gb/s 8Tb/s/mm Die-to-Die chiplet using NRZ single-ended transceiver with equalization schemes and training techniques. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 114 doi: 10.1109/ISSCC42615.2023.10067477[13] Fischer T C, Nivarti A K, Ramachandran R, et al. 9.1 D1: A 7nm ML training processor with wave clock distribution. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 8 doi: 10.1109/ISSCC42615.2023.10067658[14] Tu F B, Wang Y Q, Wu Z H, et al. 16.4 TensorCIM: A 28nm 3.7nJ/gather and 8.3TFLOPS/W FP32 digital-CIM tensor processor for MCM-CIM-based beyond-NN acceleration. 2023 IEEE International Solid- State Circuits Conference (ISSCC), 2023, 254 doi: 10.1109/ISSCC42615.2023.10067285 -

Proportional views

DownLoad:

DownLoad:

Chen Mu:received the B.S. degree from Southeast University, Nanjing, China, in 2020. He is currently pursuing Ph.D. degree with State Key Laboratory of Integrated Chips and Systems, Frontier Institute of Chips and Systems, Fudan University, Shanghai, China. His current research interests include computing-in-memory, high-efficient AI accelerator and chiplets

Chen Mu:received the B.S. degree from Southeast University, Nanjing, China, in 2020. He is currently pursuing Ph.D. degree with State Key Laboratory of Integrated Chips and Systems, Frontier Institute of Chips and Systems, Fudan University, Shanghai, China. His current research interests include computing-in-memory, high-efficient AI accelerator and chiplets Jiapei Zheng:received the B.S. degree in microelectronics from Fudan University in 2021. He is currently pursuing the Ph.D. degree with State Key Laboratory of Integrated Chips and Systems, Frontier Institute of Chips and Systems, Fudan University, Shanghai, China. His research interests include custom intelligent software-hardware co-designs and intelligent 3-D vision accelerators

Jiapei Zheng:received the B.S. degree in microelectronics from Fudan University in 2021. He is currently pursuing the Ph.D. degree with State Key Laboratory of Integrated Chips and Systems, Frontier Institute of Chips and Systems, Fudan University, Shanghai, China. His research interests include custom intelligent software-hardware co-designs and intelligent 3-D vision accelerators Chixiao Chen:received the B.S. and Ph.D. degrees in Microelectronics from Fudan University, Shanghai, China in 2010 and 2015, respectively. From 2016 to 2018, he was a post-doctoral research associate with the University of Washington, Seattle. Since 2019, he has been with Fudan University, Shanghai, China as an Assistant Professor, where he is currently an Associate Professor. He is also an adjunct research associate with the National Key Laboratory of Integrated Chips and Systems, Fudan University. His research interest includes mixed-signal integrated circuit design and custom intelligent software-hardware co-designs

Chixiao Chen:received the B.S. and Ph.D. degrees in Microelectronics from Fudan University, Shanghai, China in 2010 and 2015, respectively. From 2016 to 2018, he was a post-doctoral research associate with the University of Washington, Seattle. Since 2019, he has been with Fudan University, Shanghai, China as an Assistant Professor, where he is currently an Associate Professor. He is also an adjunct research associate with the National Key Laboratory of Integrated Chips and Systems, Fudan University. His research interest includes mixed-signal integrated circuit design and custom intelligent software-hardware co-designs