| Citation: |

Cheng Luo, Man-Kit Sit, Hongxiang Fan, Shuanglong Liu, Wayne Luk, Ce Guo. Towards efficient deep neural network training by FPGA-based batch-level parallelism[J]. Journal of Semiconductors, 2020, 41(2): 022403. doi: 10.1088/1674-4926/41/2/022403

****

C Luo, M K Sit, H X Fan, S L Liu, W Luk, C Guo, Towards efficient deep neural network training by FPGA-based batch-level parallelism[J]. J. Semicond., 2020, 41(2): 022403. doi: 10.1088/1674-4926/41/2/022403.

|

Towards efficient deep neural network training by FPGA-based batch-level parallelism

DOI: 10.1088/1674-4926/41/2/022403

More Information

-

Abstract

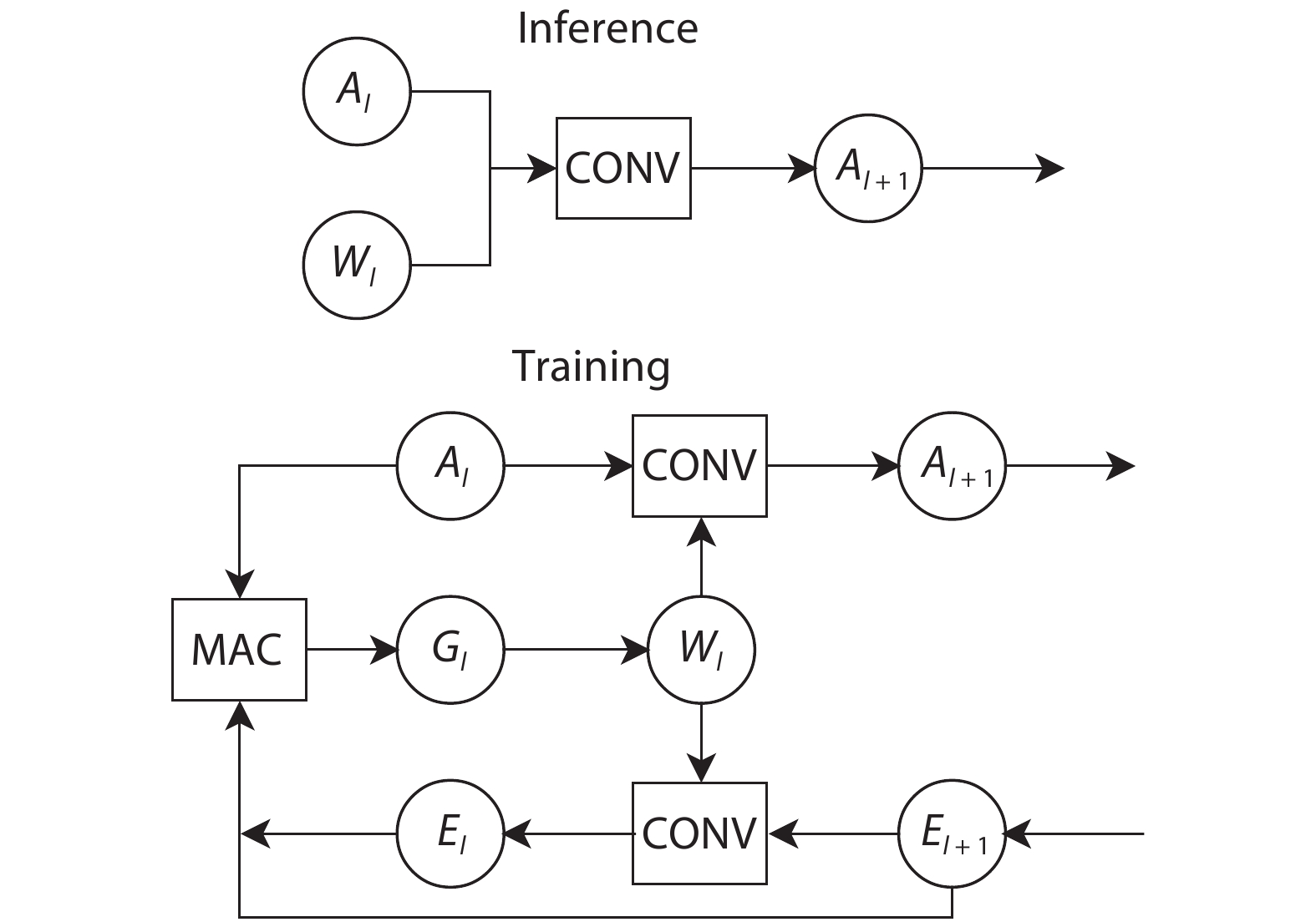

Training deep neural networks (DNNs) requires a significant amount of time and resources to obtain acceptable results, which severely limits its deployment in resource-limited platforms. This paper proposes DarkFPGA, a novel customizable framework to efficiently accelerate the entire DNN training on a single FPGA platform. First, we explore batch-level parallelism to enable efficient FPGA-based DNN training. Second, we devise a novel hardware architecture optimised by a batch-oriented data pattern and tiling techniques to effectively exploit parallelism. Moreover, an analytical model is developed to determine the optimal design parameters for the DarkFPGA accelerator with respect to a specific network specification and FPGA resource constraints. Our results show that the accelerator is able to perform about 10 times faster than CPU training and about a third of the energy consumption than GPU training using 8-bit integers for training VGG-like networks on the CIFAR dataset for the Maxeler MAX5 platform.-

Keywords:

- deep neural network,

- training,

- FPGA,

- batch-level parallelism

-

References

[1] LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition. Proc IEEE, 1998[2] Russakovsky O, Deng J, Su H, et al. Imagenet large scale visual recognition challenge. IJCV, 2015[3] Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in Neural Information Processing Systems, 2015, 91[4] He K, Gkioxari G, Dollár P, et al. Mask r-cnn. Proceedings of the IEEE International Conference on Computer Vision, 2017, 2961[5] Jia Y, Learning semantic image representations at a large scale. PhD Thesis, UC Berkeley, 2014[6] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015[7] Umuroglu Y, Fraser N J, Gambardella G, et al. Finn: A framework for fast, scalable binarized neural network inference. Acm/sigda International Symposium on Field-Programmable Gate Arrays, 2016[8] Nurvitadhi E, Venkatesh G, Sim J, et al. Can FPGAs beat GPUs in accelerating next-generation deep neural networks. ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, 2017[9] Guo K, Zeng S, Yu J, et al. A survey of FPGA-based neural network accelerator. arXiv: 171208934, 2017[10] Parisi G I, Kemker R, Part J L, et al. Continual lifelong learning with neural networks: A review. arXiv: 180207569, 2018[11] Micikevicius P, Narang S, Alben J, et al. Mixed precision training. arXiv: 171003740, 2017[12] Das D, Mellempudi N, Mudigere D, et al. Mixed precision training of convolutional neural networks using integer operations. arXiv: 180200930, 2018[13] Banner R, Hubara I, Hoffer E, et al. Scalable methods for 8-bit training of neural networks. arXiv: 180511046, 2018[14] De Sa C, Leszczynski M, Zhang J, et al. High-accuracy low-precision training. arXiv: 180303383, 2018[15] Wu S, Li G, Chen F, et al. Training and inference with integers in deep neural networks. arXiv: 180204680, 2018[16] Wen W, Xu C, Yan F, et al. Terngrad: Ternary gradients to reduce communication in distributed deep learning. Advances in Neural Information Processing Systems, 2017[17] Zhu H, Akrout M, Zheng B, et al. Benchmarking and analyzing deep neural network training. IEEE International Symposium on Workload Characterization (IISWC), 2018[18] Redmon J. Darknet: Open source neural networks in C. http://pjreddie.com/darknet/[19] Pell O, Mencer O, Tsoi K H, et al. Maximum performance computing with dataflow engines. High-performance computing using FPGAs, 2013[20] Luo C, Sit M K, Fan H, et al. Towards efficient deep neural network training by FPGA-based batch-level parallelism. 2019 IEEE 27th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), 2019, 45[21] Kingma D P, Ba J. Adam: A method for stochastic optimization. arXiv: 14126980, 2014[22] Qiu J, Wang J, Yao S, et al. Going deeper with embedded FPGA platform for convolutional neural network. Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, 2016[23] Suda N, Chandra V, Dasika G, et al. Throughput-optimized opencl-based FPGA accelerator for large-scale convolutional neural networks. Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, 2016[24] Motamedi M, Gysel P, Akella V, et al. Design space exploration of FPGA-based deep convolutional neural networks. ASP-DAC, 2016[25] Zhang C, Sun G, Fang Z, et al. Caffeine: Towards uniformed representation and acceleration for deep convolutional neural networks. IEEE Trans Comput-Aid Des Integr Circuits Syst, 2019, 38, 2072 doi: 10.1109/TCAD.2017.2785257[26] Ma Y, Cao Y, Vrudhula S, et al. An automatic rtl compiler for high-throughput FPGA implementation of diverse deep convolutional neural networks. 2017 27th International Conference on Field Programmable Logic and Applications (FPL), 2017, 1[27] Venkataramanaiah S K, Ma Y, Yin S, et al. Automatic compiler based FPGA accelerator for cnn training. 2019 29th International Conference on Field Programmable Logic and Applications (FPL), 2019, 166[28] Xiao Q, Liang Y, Lu L, et al. Exploring heterogeneous algorithms for accelerating deep convolutional neural networks on FPGAs. Proceedings of the 54th Annual Design Automation Conference, 2017[29] Zhao W, Fu H, Luk W, et al. F-CNN: An FPGA-based framework for training convolutional neural networks. IEEE 27th International Conference on Application-specific Systems, Architectures and Processors (ASAP), 2016[30] Geng T, Wang T, Li A, et al. A scalable framework for acceleration of cnn training on deeply-pipelined FPGA clusters with weight and workload balancing. arXiv: 190101007, 2019[31] Geng T, Wang T, Sanaullah A, et al. Fpdeep: Acceleration and load balancing of CNN training on FPGA clusters. IEEE 26th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), 2018[32] Li Y, Pedram A, Caterpillar: Coarse grain reconfigurable architecture for accelerating the training of deep neural networks. IEEE 28th International Conference on Application-Specific Systems, Architectures and Processors (ASAP), 2017[33] Dicecco R, Sun L, Chow P. FPGA-based training of convolutional neural networks with a reduced precision floating-point library. International Conference on Field Programmable Technology, 2018[34] Nakahara H, Sada Y, Shimoda M, et al. FPGA-based training accelerator utilizing sparseness of convolutional neural network. 2019 29th International Conference on Field Programmable Logic and Applications (FPL), 2019, 180[35] Fox S, Faraone J, Boland D, et al. Training deep neural networks in low-precision with high accuracy using FPGAs. International Conference on Field-Programmable Technology (FPT), 2019[36] Moss D J, Krishnan S, Nurvitadhi E, et al. A customizable matrix multiplication framework for the Intel HARPv2 Xeon+ FPGA platform: A deep learning case study. ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, 2018, 107[37] He K, Zhang X, Ren S, et al. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. Proceedings of the IEEE International Conference on Computer Vision, 2015, 1026[38] Zhou S, Wu Y, Ni Z, et al. Dorefa-net: Training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv preprint arXiv: 160606160, 2016[39] Matsumoto M, Nishimura T. Mersenne twister: a 623-dimensionally equidistributed uniform pseudo-random number generator. ACM Trans Model Comput Simul, 1998, 8(1), 3 doi: 10.1145/272991.272995[40] Performance guide of using nchw image data format. [Online]. Available: https://www.tensorflow.org/guide/performance/overview[41] Ma Y, Cao Y, Vrudhula S, et al. Optimizing loop operation and dataflow in FPGA acceleration of deep convolutional neural networks. Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, 2017[42] Steinkraus D, Buck I, Simard P. Using GPUs for machine learning algorithms. Eighth International Conference on Document Analysis and Recognition (ICDAR), 2005[43] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv: 14091556, 2014[44] Wei X, Yu C H, Zhang P, et al. Automated systolic array architecture synthesis for high throughput cnn inference on FPGAs. Proceedings of the 54th Annual Design Automation Conference, 2017,[45] Krishnan S, Ratusziak P, Johnson C, et al. Accelerator templates and runtime support for variable precision CNN. CISC Workshop, 2017[46] Abadi M, Barham P, Chen J, et al. TensorFlow: a system for large-scale machine learning. OSDI, 2016, 265[47] Chetlur S, Woolley C, Vandermersch P, et al. cuDNN: Efficient primitives for deep learning. arXiv: 14100759, 2014 -

Proportional views

DownLoad:

DownLoad: