| Citation: |

Zheng Wang, Libing Zhou, Wenting Xie, Weiguang Chen, Jinyuan Su, Wenxuan Chen, Anhua Du, Shanliao Li, Minglan Liang, Yuejin Lin, Wei Zhao, Yanze Wu, Tianfu Sun, Wenqi Fang, Zhibin Yu. Accelerating hybrid and compact neural networks targeting perception and control domains with coarse-grained dataflow reconfiguration[J]. Journal of Semiconductors, 2020, 41(2): 022401. doi: 10.1088/1674-4926/41/2/022401

****

Z Wang, L B Zhou, W T Xie, W G Chen, J Y Su, W X Chen, A H Du, S L Li, M L Liang, Y J Lin, W Zhao, Y Z Wu, T F Sun, W Q Fang, Z B Yu, Accelerating hybrid and compact neural networks targeting perception and control domains with coarse-grained dataflow reconfiguration[J]. J. Semicond., 2020, 41(2): 022401. doi: 10.1088/1674-4926/41/2/022401.

|

Accelerating hybrid and compact neural networks targeting perception and control domains with coarse-grained dataflow reconfiguration

DOI: 10.1088/1674-4926/41/2/022401

More Information

-

Abstract

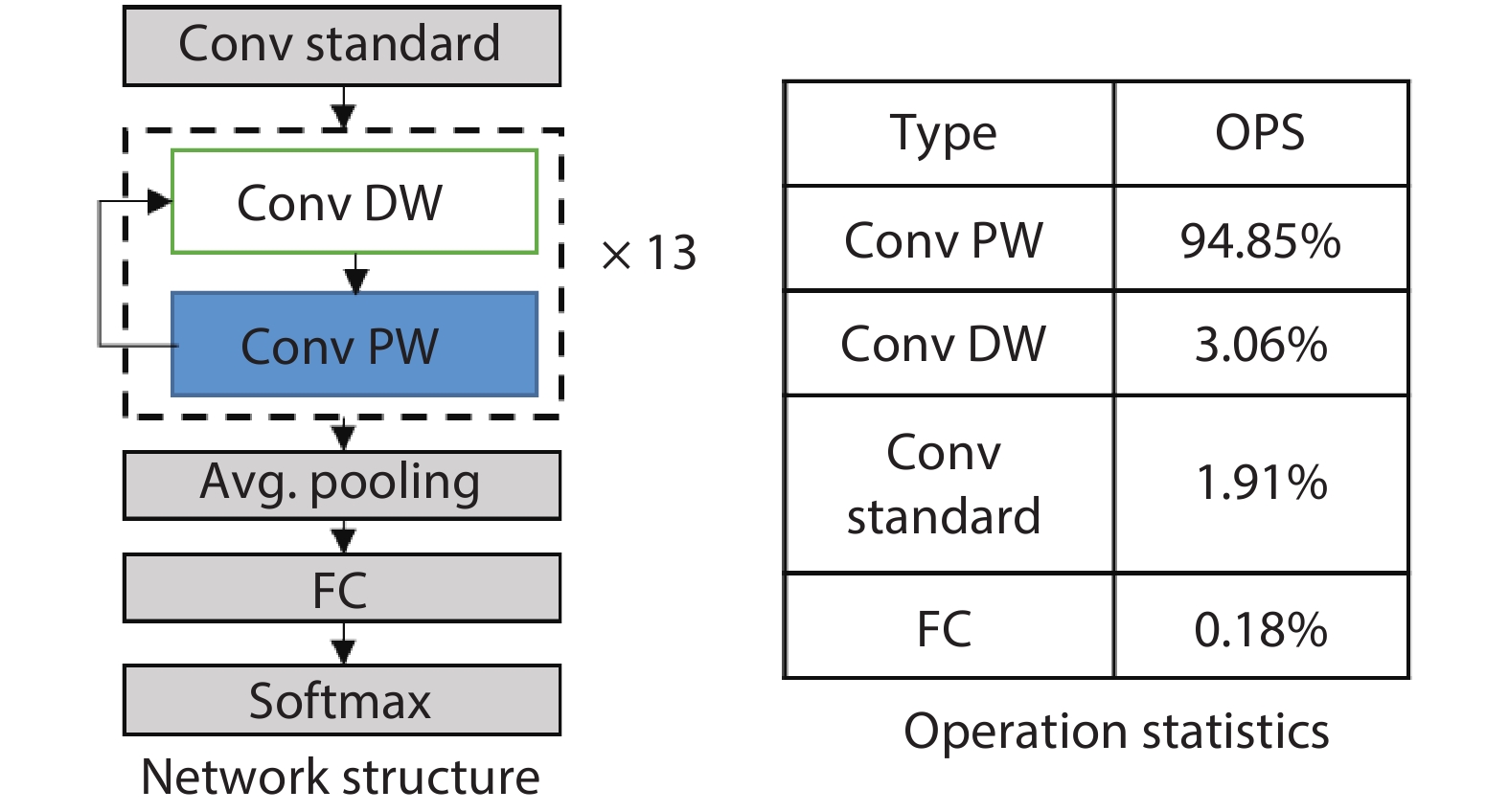

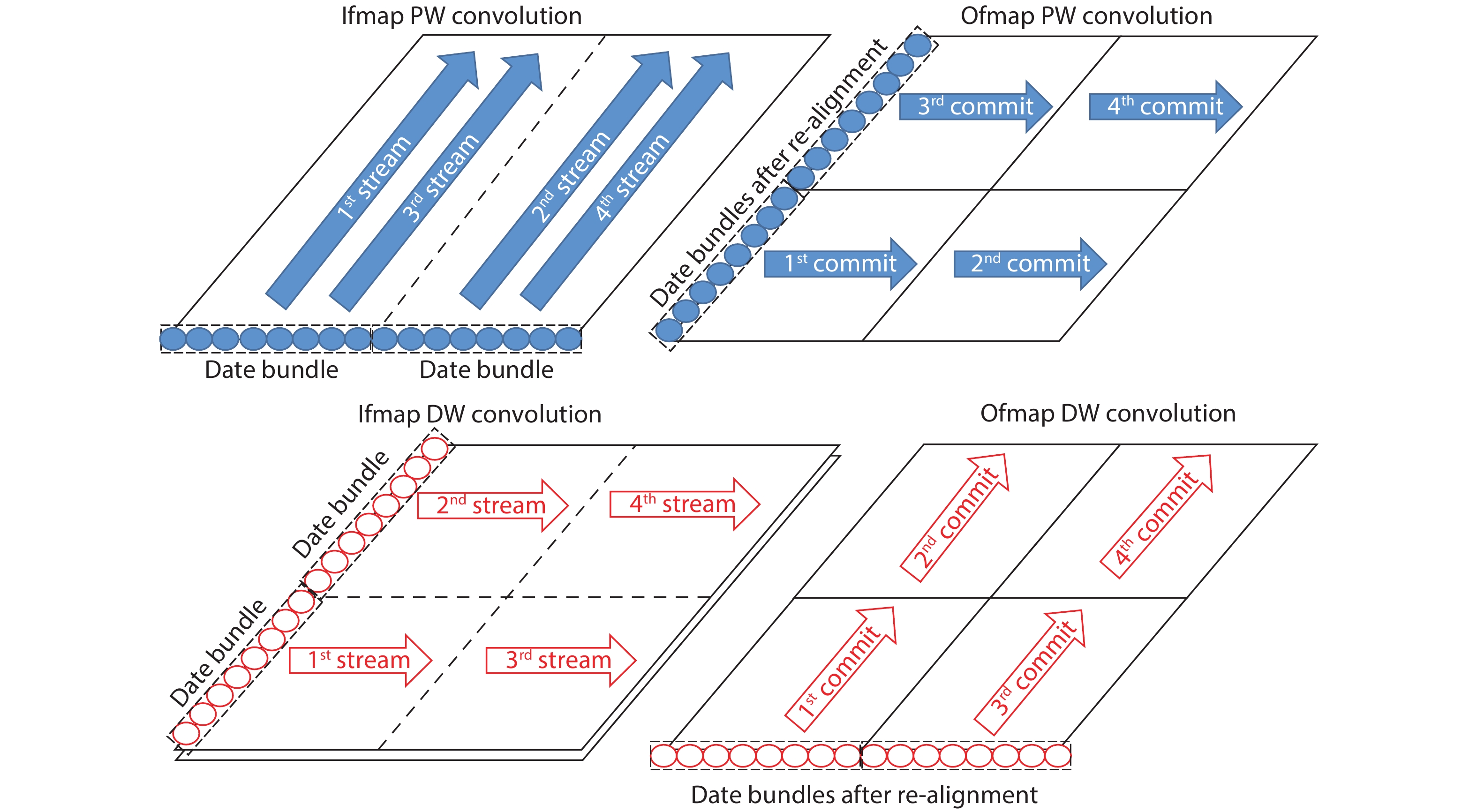

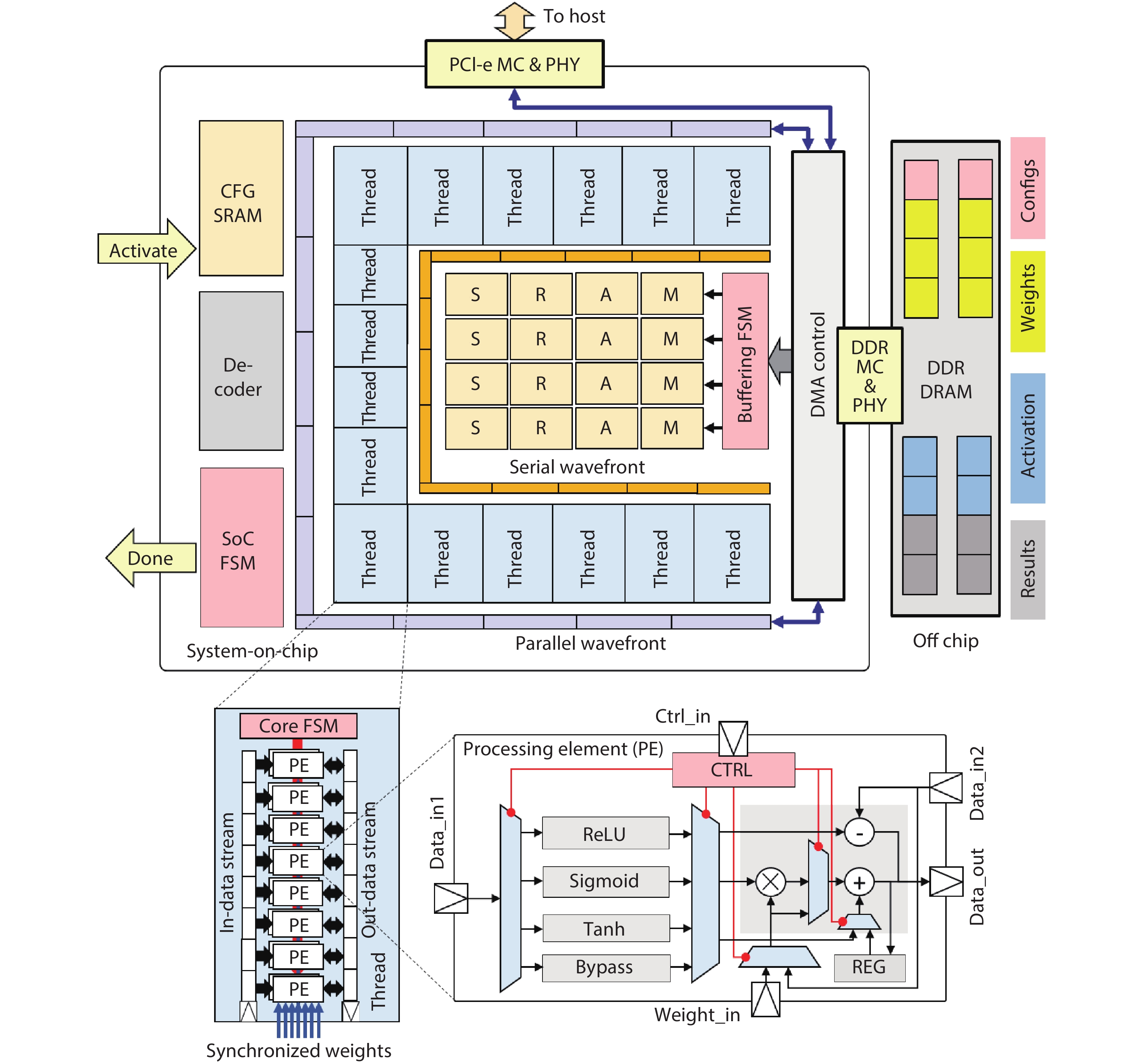

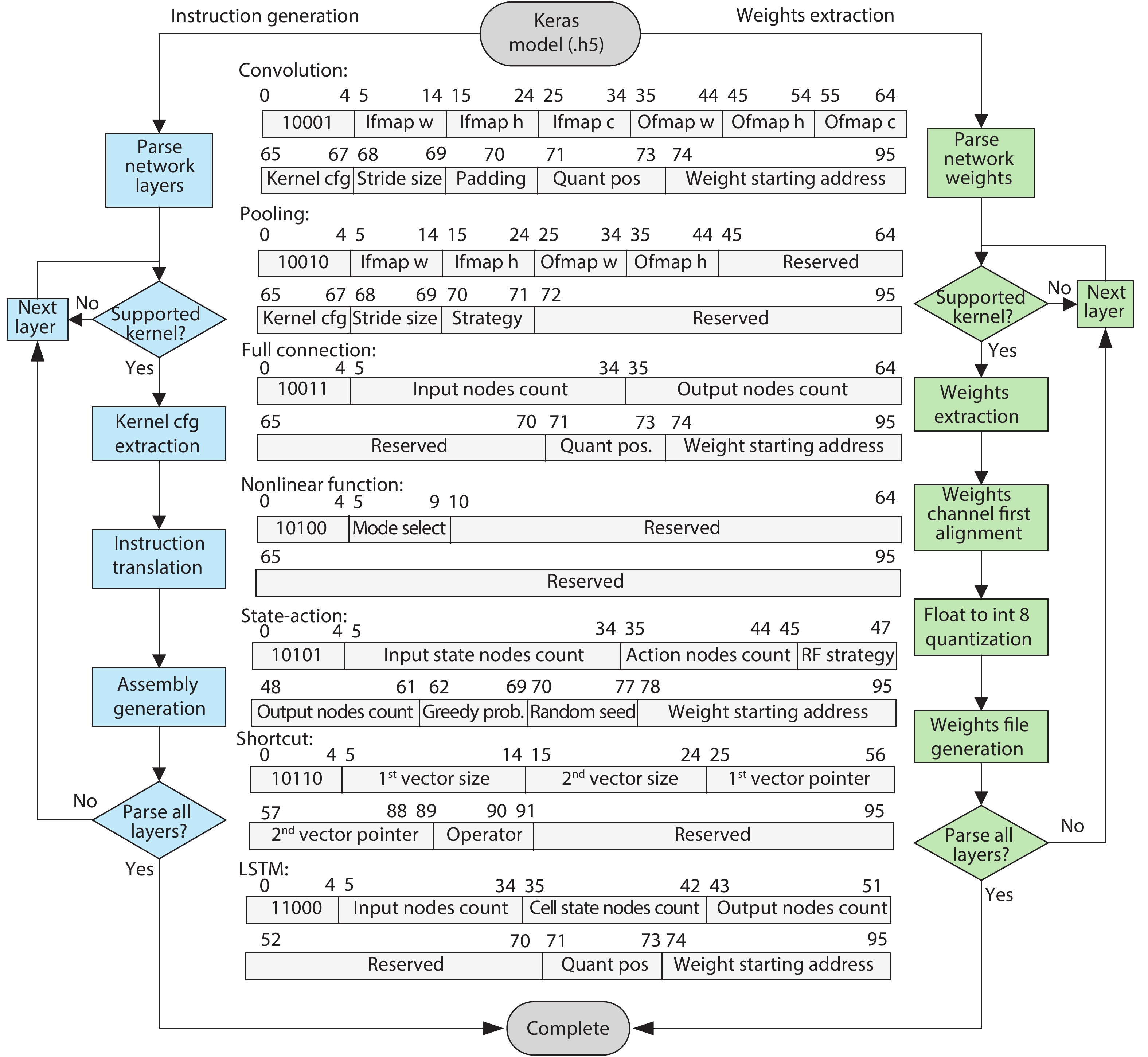

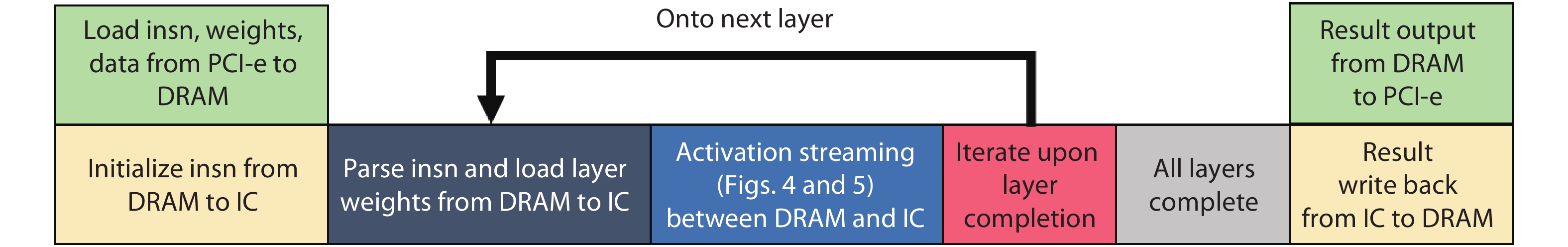

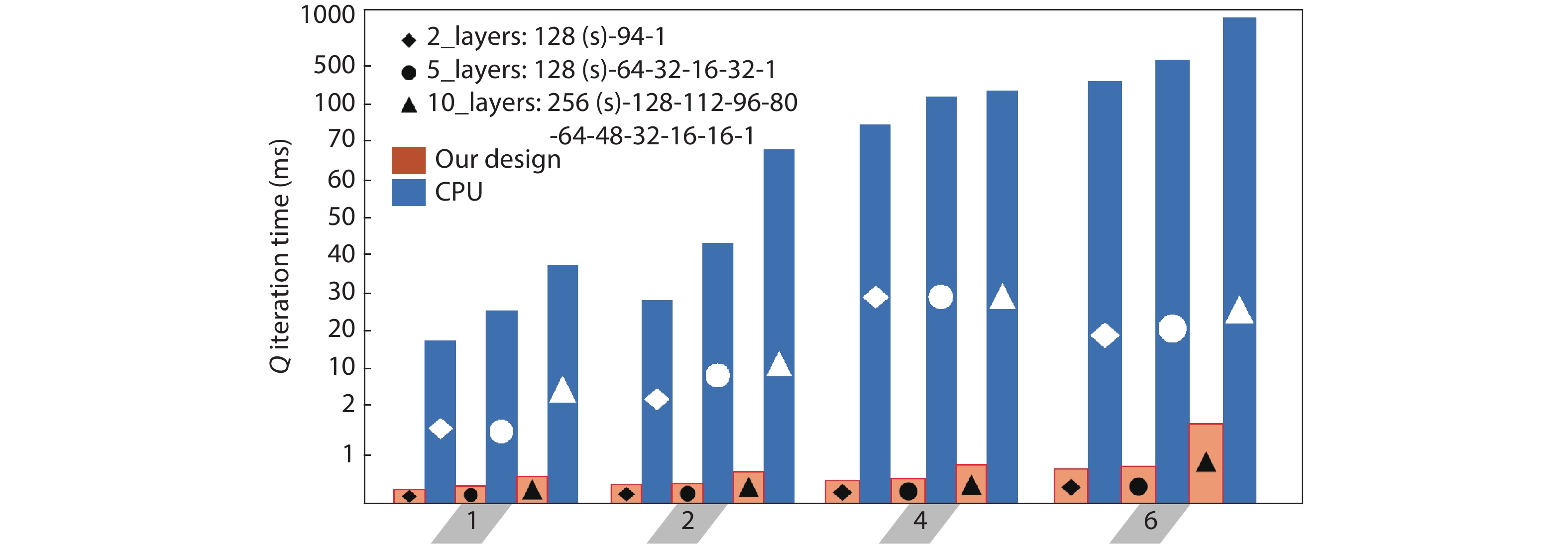

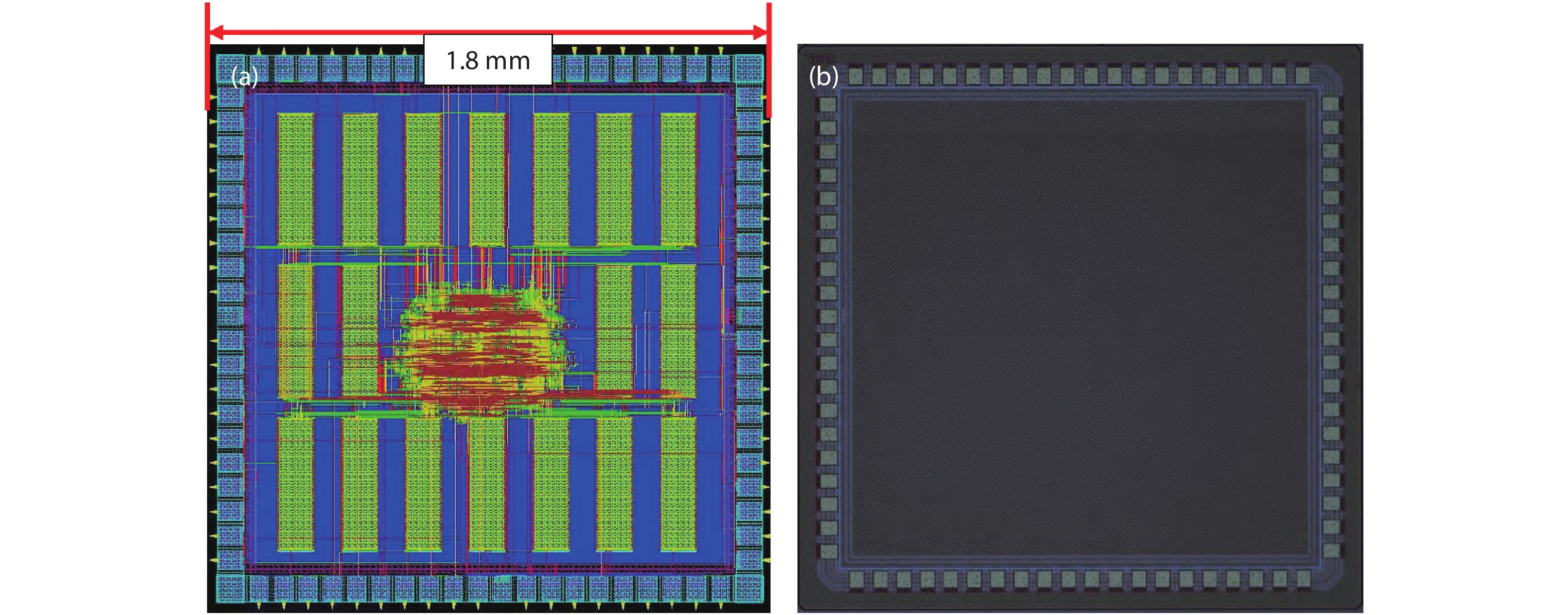

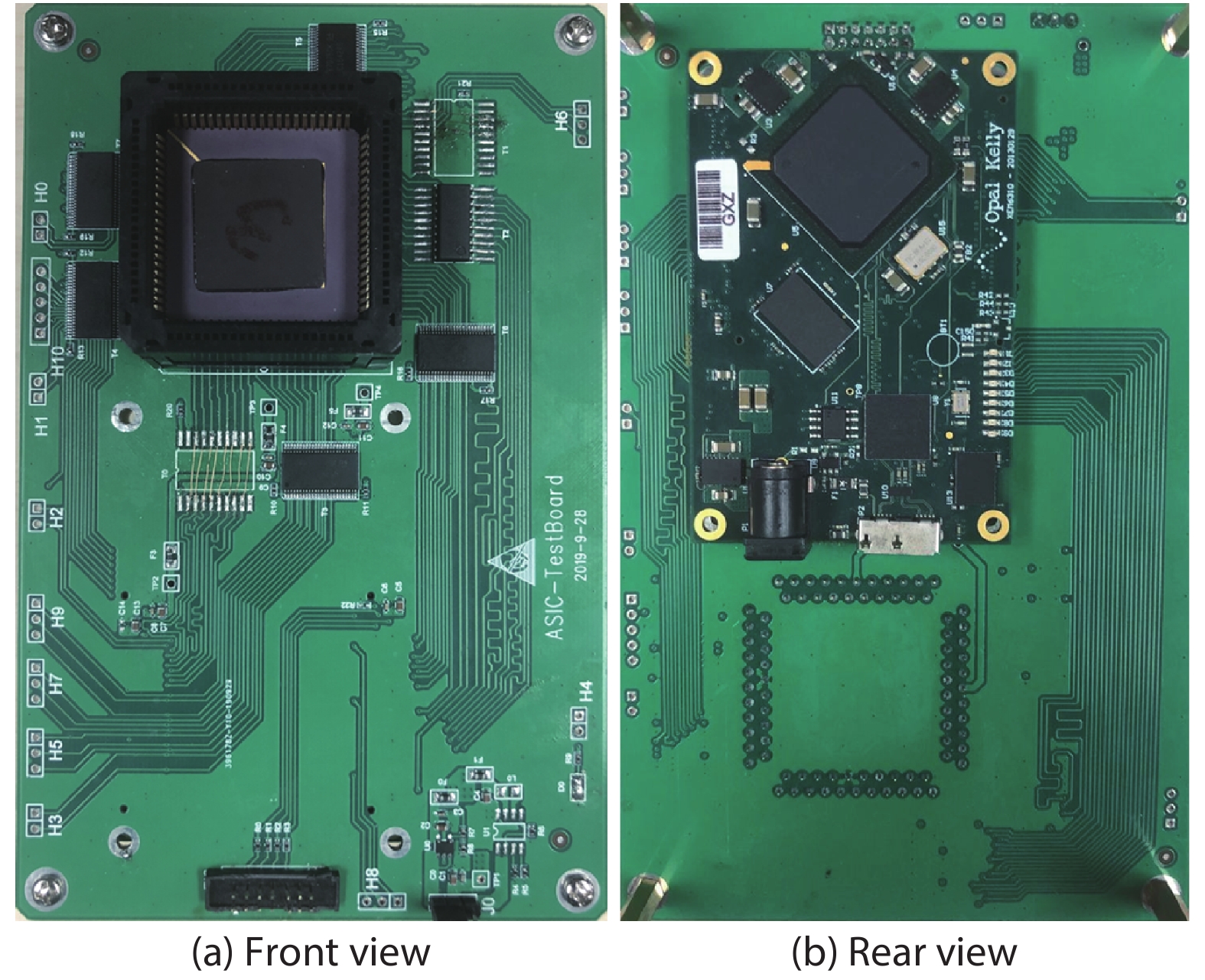

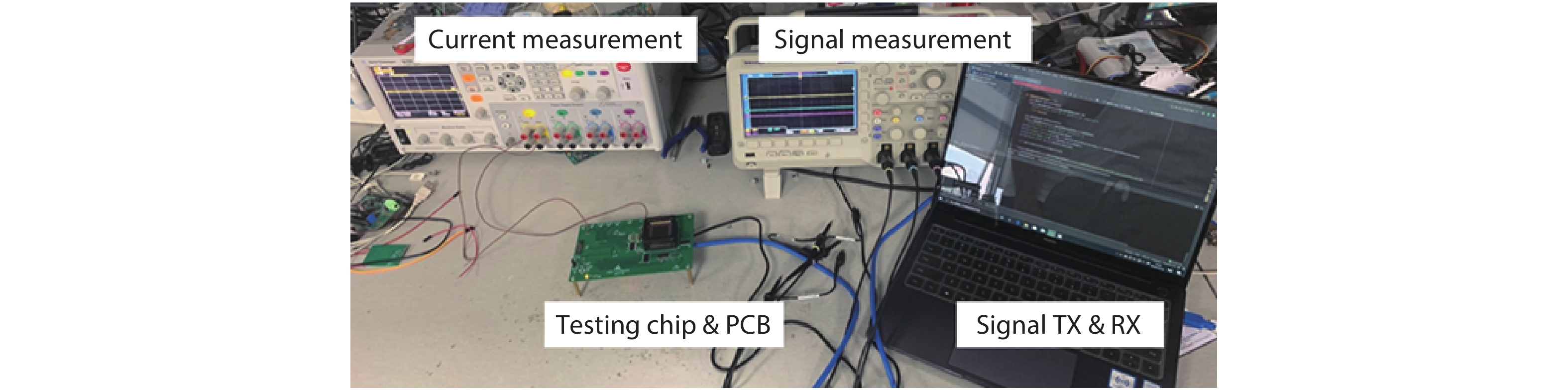

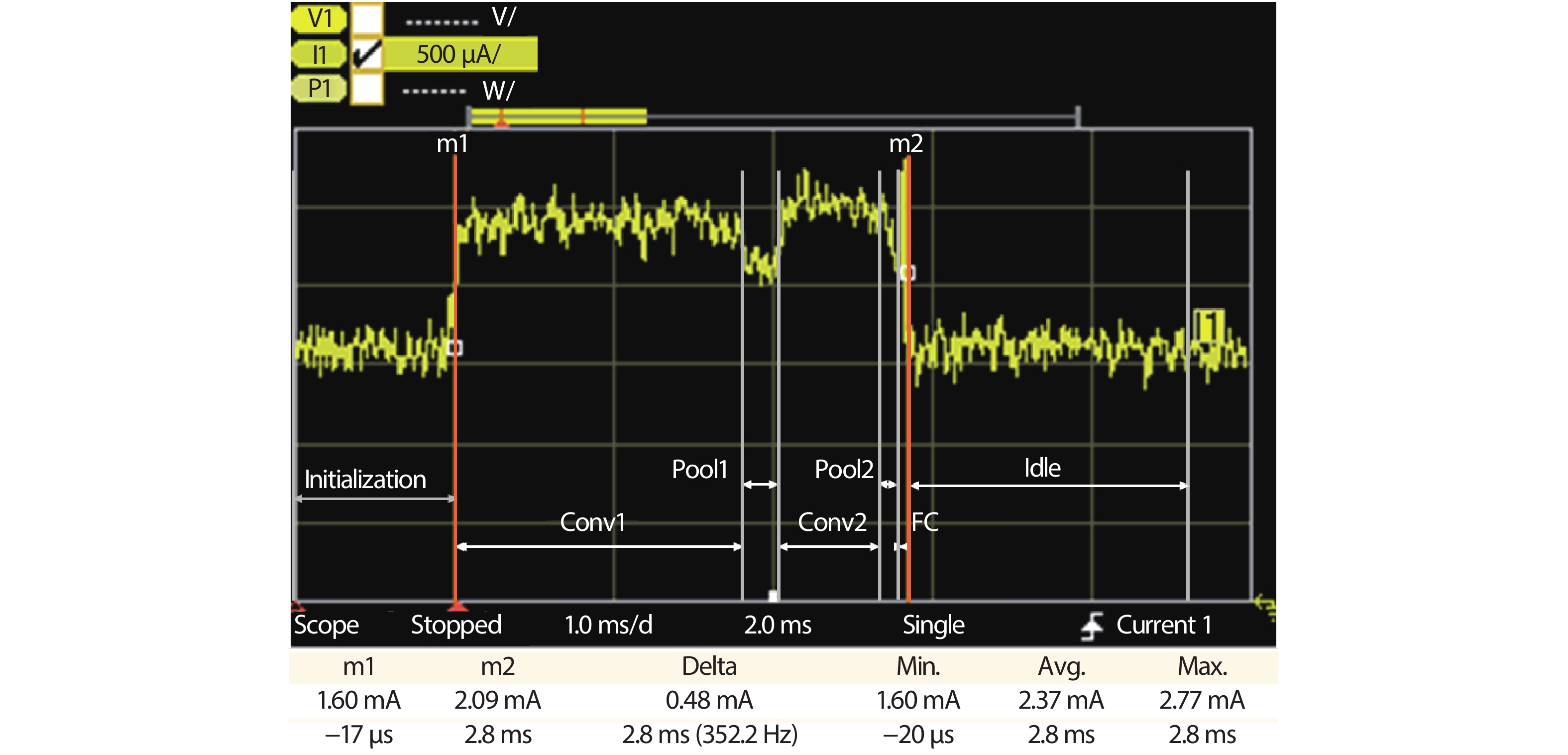

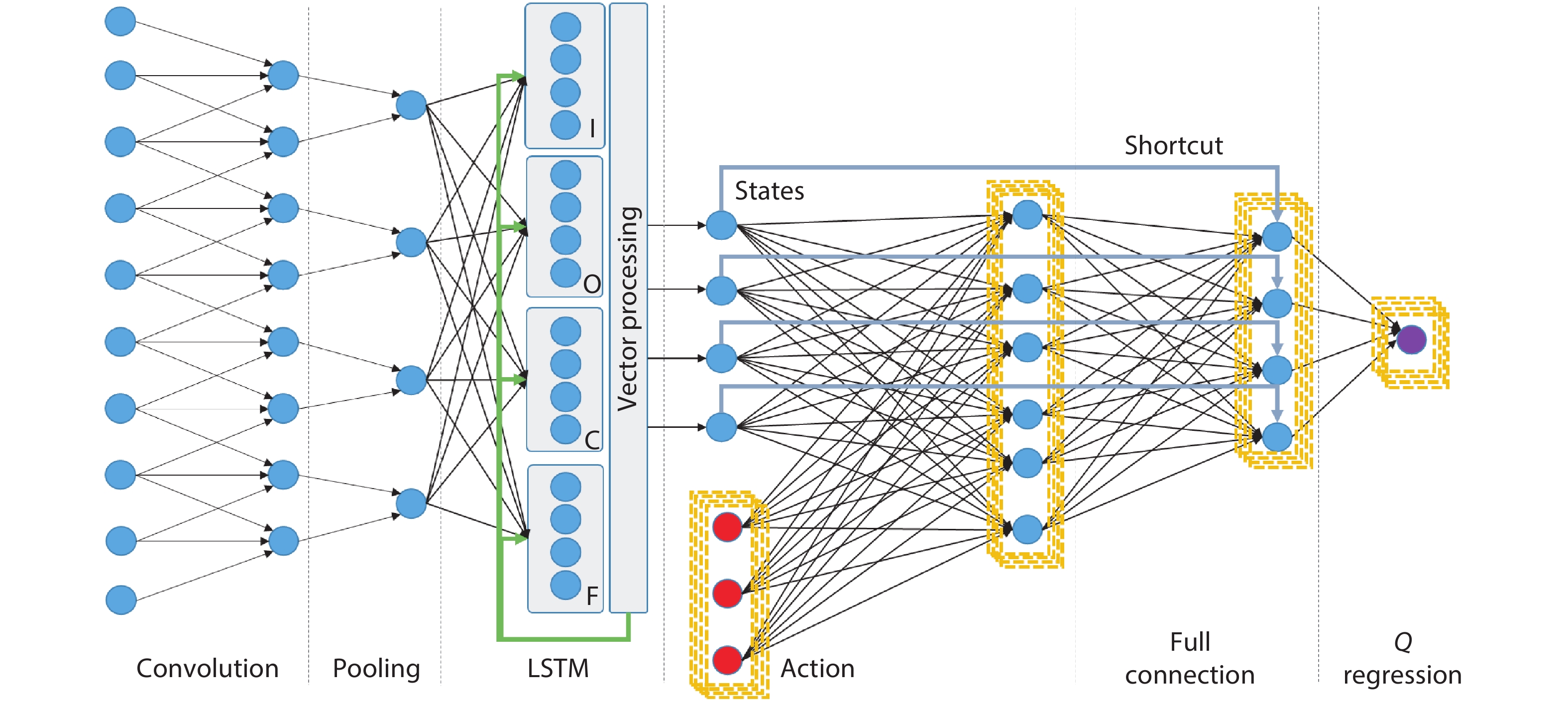

Driven by continuous scaling of nanoscale semiconductor technologies, the past years have witnessed the progressive advancement of machine learning techniques and applications. Recently, dedicated machine learning accelerators, especially for neural networks, have attracted the research interests of computer architects and VLSI designers. State-of-the-art accelerators increase performance by deploying a huge amount of processing elements, however still face the issue of degraded resource utilization across hybrid and non-standard algorithmic kernels. In this work, we exploit the properties of important neural network kernels for both perception and control to propose a reconfigurable dataflow processor, which adjusts the patterns of data flowing, functionalities of processing elements and on-chip storages according to network kernels. In contrast to state-of-the-art fine-grained data flowing techniques, the proposed coarse-grained dataflow reconfiguration approach enables extensive sharing of computing and storage resources. Three hybrid networks for MobileNet, deep reinforcement learning and sequence classification are constructed and analyzed with customized instruction sets and toolchain. A test chip has been designed and fabricated under UMC 65 nm CMOS technology, with the measured power consumption of 7.51 mW under 100 MHz frequency on a die size of 1.8 × 1.8 mm2. -

References

[1] Chen Y, Krishna T, Emer J, et al. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Solid-State Circuits, 2017, 52, 127 doi: 10.1109/JSSC.2016.2616357[2] Jouppi N, Young C, Patil N, et al. In-datacenter performance analysis of a tensor processing unit. ACM/IEEE International Symposium on Computer Architecture, 2017, 1[3] Chen Y, Tao L, Liu S, et al. DaDianNao: A machine-learning supercomputer. ACM/IEEE International Symposium on Microarchitecture, 2015, 609[4] Cong J, Xiao B. Minimizing computation in convolutional neural networks. Artificial Neural Networks and Machine Learning, 2014, 281[5] Yin S, Ouyang P, Tang S, et al. A high energy efficient reconfigurable hybrid neural network processor for deep learning applications. IEEE J Solid-State Circuits, 2017, 53, 968 doi: 10.1109/JSSC.2017.2778281[6] Russakovsky O, Deng J, Su H, et al. ImageNet large scale visual recognition challenge. Int J Comput Vision, 2015, 115, 211 doi: 10.1007/s11263-015-0816-y[7] Iandola F, Han S, Moskewicz M, et al. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and < 0.5 MB model size. arXiv: 1602.07360, 2016[8] Howard A, Zhu M, Chen B, et al. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv: 1704.04861, 2017[9] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014,[10] Yang C, Wang Y, Wang X, et al. A reconfigurable accelerator based on fast winograd algorithm for convolutional neural network in internet of things. IEEE International Conference on Solid-State and Integrated Circuit Technology, 2018, 1[11] Vasilache N, Johnson J, Mathieu M, et al. Fast convolutional nets with fbfft: A GPU performance evaluation. arXiv: 1412.7580, 2014[12] Guo K, Zeng S, Yu J, et al. A survey of FPGA-based neural network accelerator. arXiv: 1712.08934, 2017[13] Mnih V, Kavukcuoglu K, Silver D, et al. Playing atari with deep reinforcement learning. arXiv: 1312.5602, 2013[14] Mnih V, Kavukcuoglu K, Silver D, et al. Human-level control through deep reinforcement learning. Nature, 2015, 518, 529 doi: 10.1038/nature14236[15] Silver D, Huang A, Maddison C, et al. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529, 484 doi: 10.1038/nature16961[16] Silver D, Schrittwieser J, Simonyan K, et al. Mastering the game of Go without human knowledge. Nature, 2017, 550, 354 doi: 10.1038/nature24270[17] Chen Y, Emer J, Sze V. Eyeriss: A spatial architecture for energy-efficient dataflow for convolutional neural networks. ACM/IEEE International Symposium on Computer Architecture, 2016, 44, 367[18] Gers F, Schmidhuber J, Cummins F. Learning to forget: Continual prediction with LSTM. 9th International Conference on Artificial Neural Networks, 1999, 850 doi: 10.1049/cp:19991218[19] Basterretxea K, Tarela J, Del C. Approximation of sigmoid function and the derivative for hardware implementation of artificial neurons. IEE Proc Circuits, Devices Syst, 2004, 151, 18[20] Sutton R, Barto A. Reinforcement learning: An introduction. MIT Press, 2018[21] Gulli A, Sujit P. Deep learning with Keras. Packt Publishing Ltd, 2017[22] Li S, Ouyang N, Wang Z. Accelerator design for convolutional neural network with vertical data streaming. IEEE Asia Pacific Conference on Circuits and Systems, 2018, 544[23] Guo Y. Fixed point quantization of deep convolutional networks. International Conference on Machine Learning, 2016, 2849[24] Opalkelly product manual. https://opalkelly.com/products/frontpanel[25] Chen W, Wang Z, Li S, et al. Accelerating compact convolutional neural networks with multi-threaded data streaming. IEEE Computer Society Annual Symposium on VLSI, 2019, 519[26] MitchellSpryn solving a maze with Q learning. www.mitchellspryn.com/2017/10/28/Solving-A-Maze-With-Q-Learning.html[27] Liang M, Chen M, Wang Z. A CGRA based neural network inference engine for deep reinforcement learning. IEEE Asia Pacific Conference on Circuits and Systems, 2018, 519[28] Chen Y, Krishna T, Emer J, et al. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE International Solid-State Circuits Conference (ISSCC), 2016, 127[29] Moons B, Uytterhoeven R, Dehaene W, et al. ENVISION: A 0.26-to-10 TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable convolutional neural network processor in 28 nm FDSOI. IEEE International Solid-State Circuits Conference (ISSCC), 2017, 246[30] Yin S, Ouyang P, Tang S, et al. 1.06-to-5.09 TOPS/W reconfigurable hybrid-neural-network processor for deep learning applications. Symposium on VLSI Circuits, 2017 -

Proportional views

DownLoad:

DownLoad: