| Citation: |

Haihua Wang, Mingjian Zhou, Xiaolong Jia, Hualong Wei, Zhenjie Hu, Wei Li, Qiumeng Chen, Lei Wang. Recent progress on artificial intelligence-enhanced multimodal sensors integrated devices and systems[J]. Journal of Semiconductors, 2025, 46(1): 011610. doi: 10.1088/1674-4926/24090041

****

H H Wang, M J Zhou, X L Jia, H L Wei, Z J Hu, W Li, Q M Chen, and L Wang, Recent progress on artificial intelligence-enhanced multimodal sensors integrated devices and systems[J]. J. Semicond., 2025, 46(1), 011610 doi: 10.1088/1674-4926/24090041

|

Recent progress on artificial intelligence-enhanced multimodal sensors integrated devices and systems

DOI: 10.1088/1674-4926/24090041

CSTR: 32376.14.1674-4926.24090041

More Information-

Abstract

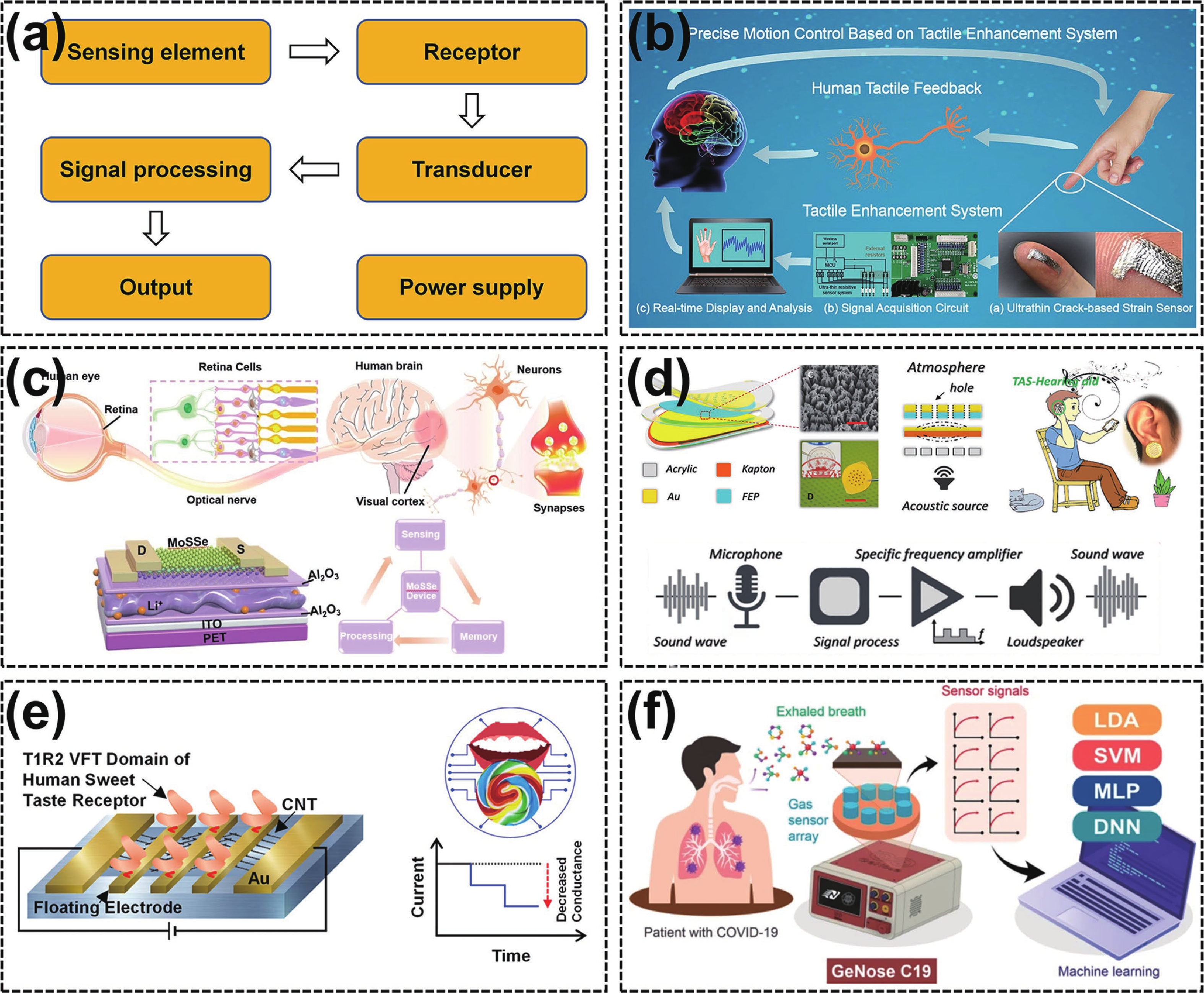

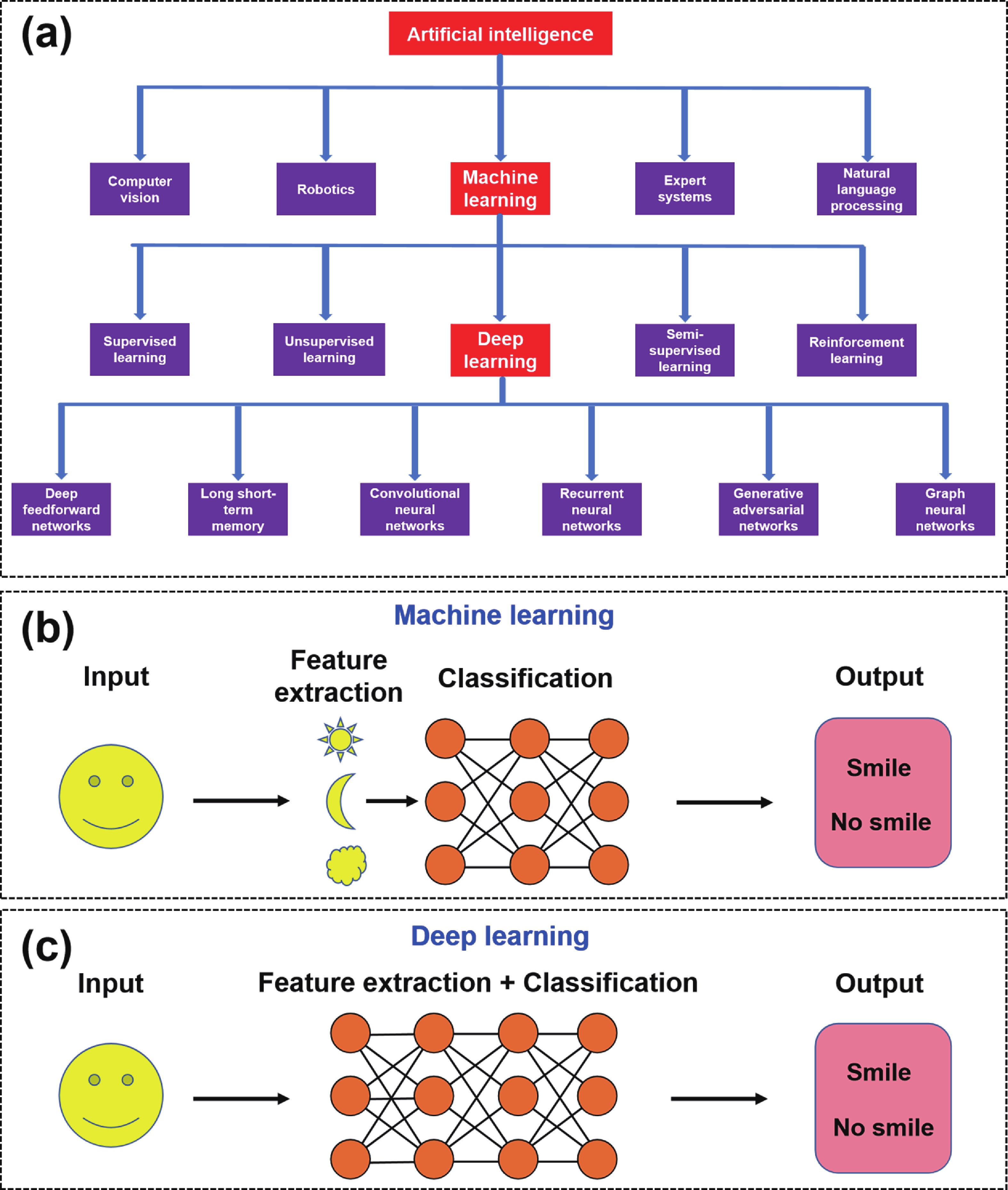

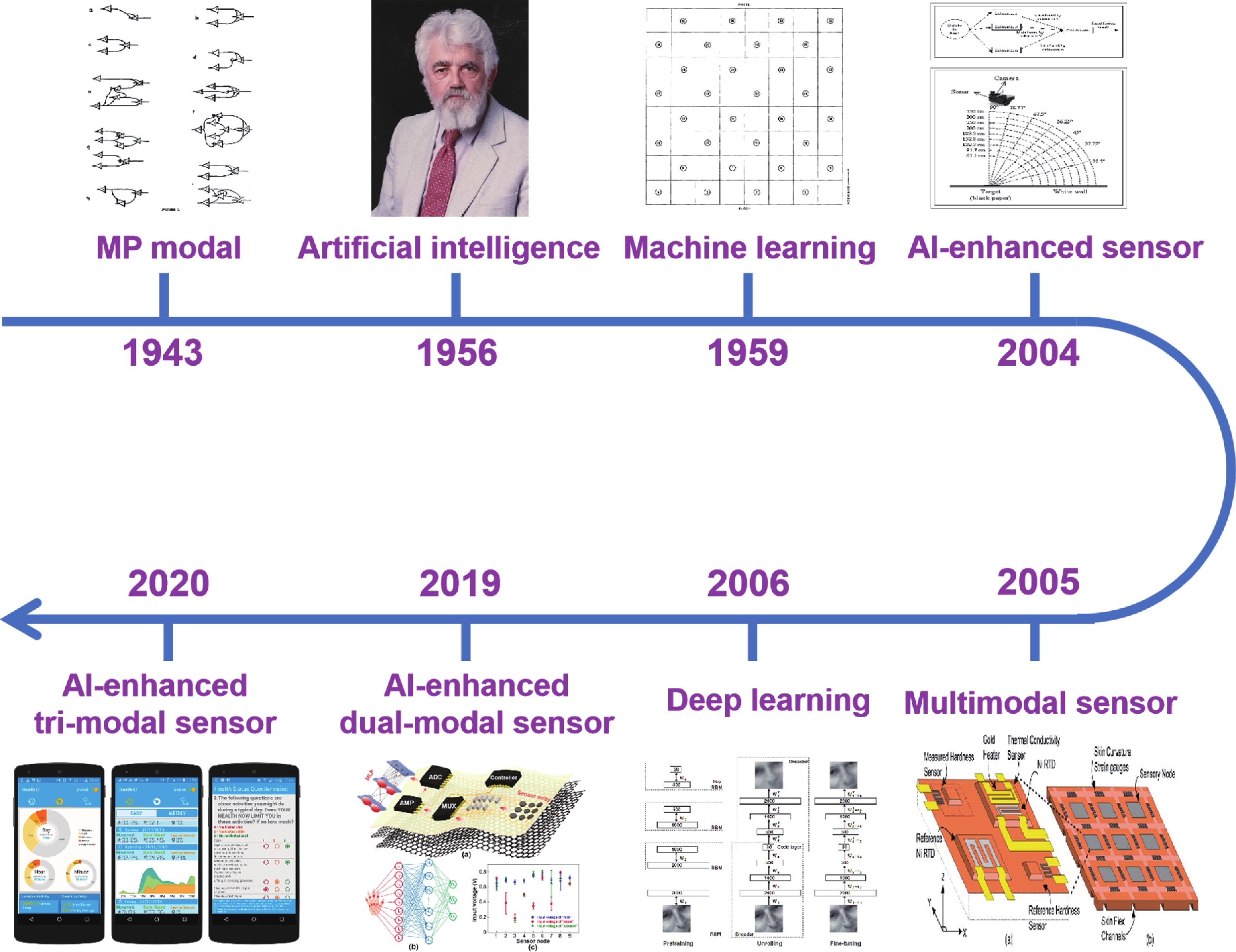

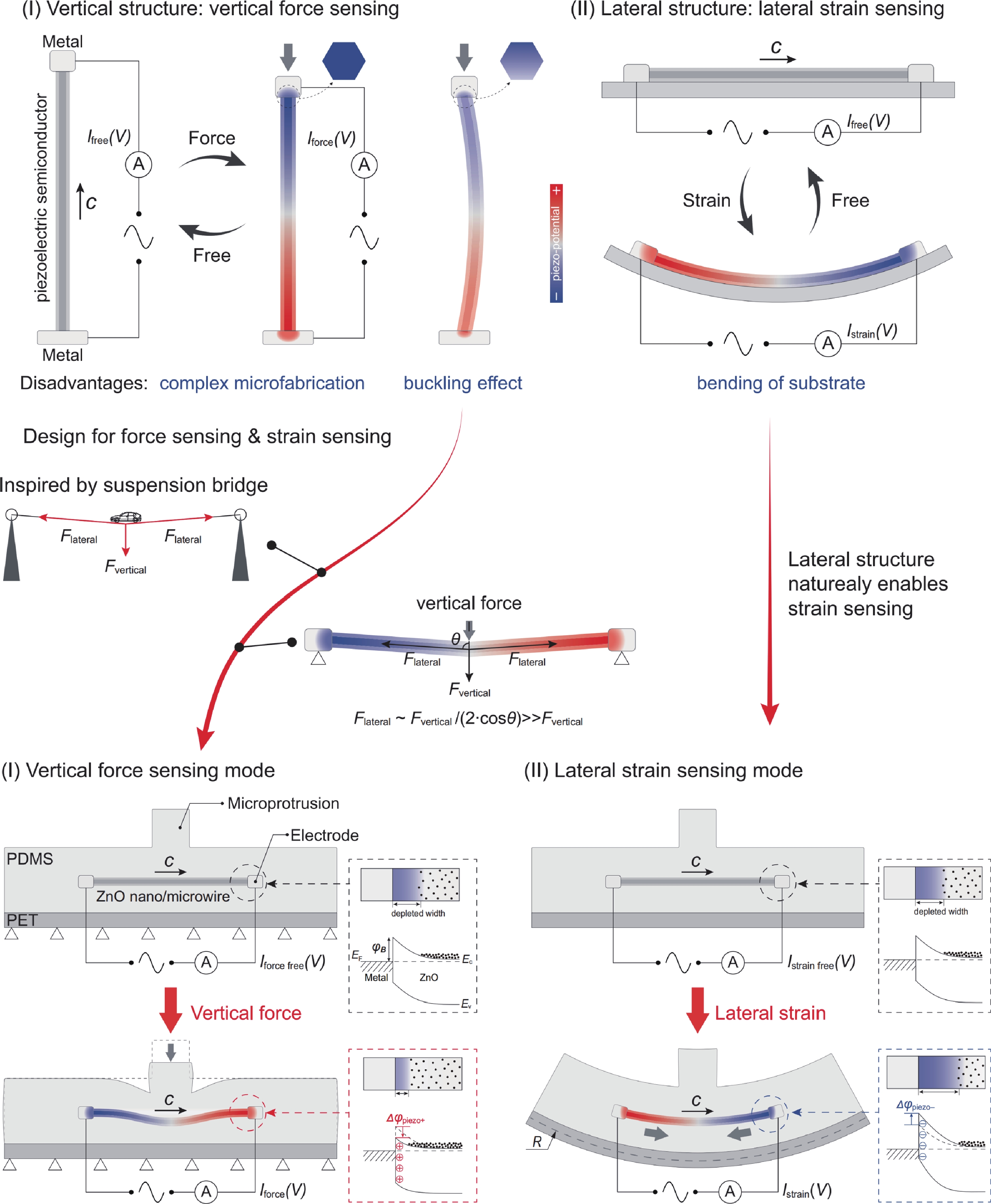

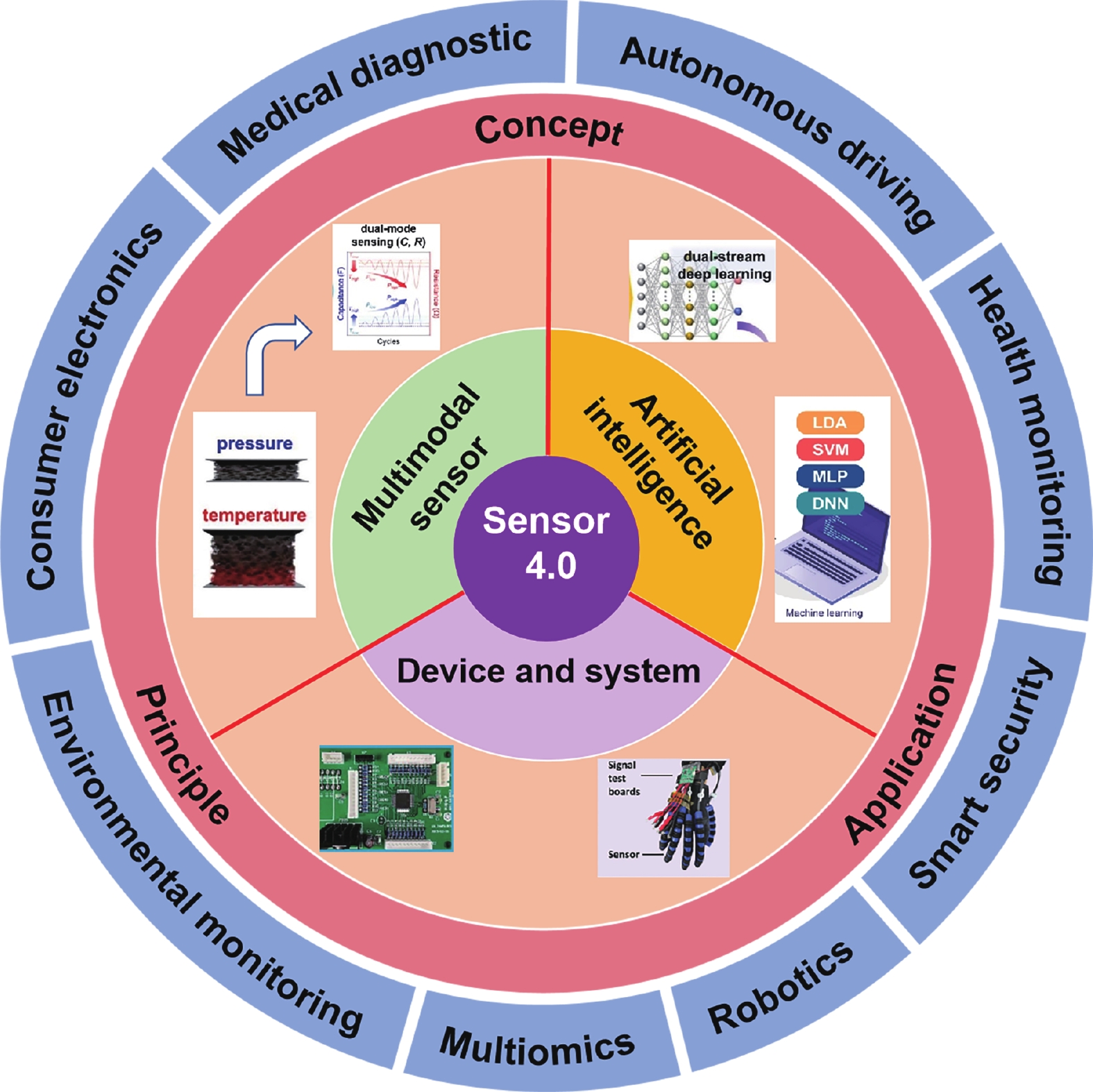

Multimodal sensor fusion can make full use of the advantages of various sensors, make up for the shortcomings of a single sensor, achieve information verification or information security through information redundancy, and improve the reliability and safety of the system. Artificial intelligence (AI), referring to the simulation of human intelligence in machines that are programmed to think and learn like humans, represents a pivotal frontier in modern scientific research. With the continuous development and promotion of AI technology in Sensor 4.0 age, multimodal sensor fusion is becoming more and more intelligent and automated, and is expected to go further in the future. With this context, this review article takes a comprehensive look at the recent progress on AI-enhanced multimodal sensors and their integrated devices and systems. Based on the concept and principle of sensor technologies and AI algorithms, the theoretical underpinnings, technological breakthroughs, and pragmatic applications of AI-enhanced multimodal sensors in various fields such as robotics, healthcare, and environmental monitoring are highlighted. Through a comparative study of the dual/tri-modal sensors with and without using AI technologies (especially machine learning and deep learning), AI-enhanced multimodal sensors highlight the potential of AI to improve sensor performance, data processing, and decision-making capabilities. Furthermore, the review analyzes the challenges and opportunities afforded by AI-enhanced multimodal sensors, and offers a prospective outlook on the forthcoming advancements.-

Keywords:

- sensor,

- multimodal sensors,

- machine learning,

- deep learning,

- intelligent system

-

References

[1] Ates H C, Nguyen P Q, Gonzalez-Macia L, et al. End-to-end design of wearable sensors. Nat Rev Mater, 2022, 7, 887 doi: 10.1038/s41578-022-00460-x[2] Shi Y Q, Zhang Z Y, Huang Q Y, et al. Wearable sweat biosensors on textiles for health monitoring. J Semicond, 2023, 44, 021601 doi: 10.1088/1674-4926/44/2/021601[3] Varshney A, Garg N, Nagla K S, et al. Challenges in sensors technology for industry 4.0 for futuristic metrological applications. Mapan, 2021, 36, 215 doi: 10.1007/s12647-021-00453-1[4] Wang H, Liu J, Wei J, et al. Au nanoparticles/HfO2/fully depleted silicon-on-insulator MOSFET enabled rapid detection of zeptomole COVID-19 gene with electrostatic enrichment process. IEEE Trans Electron Devices, 2023, 70, 1236 doi: 10.1109/TED.2022.3233544[5] Luo F, Khan S, Huang Y, et al. Activity-based person identification using multimodal wearable sensor data. IEEE Internet Things J, 2022, 10, 1711 doi: 10.1109/JIOT.2022.3209084[6] Jeon S, Lim S C, Trung T Q, et al. Flexible multimodal sensors for electronic skin: Principle, materials, device, array architecture, and data acquisition method. Proc IEEE, 2019, 107, 2065 doi: 10.1109/JPROC.2019.2930808[7] Roser M. The brief history of artificial intelligence: the world has changed fast–what might be next? Our world in data, 2024[8] Shen Y, Zhang X. The impact of artificial intelligence on employment: the role of virtual agglomeration. Humanities and Social Sciences Communications, 2024, 11, 1 doi: 10.1057/s41599-023-02237-1[9] Erion G, Janizek J D, Hudelson C, et al. A cost-aware framework for the development of AI models for healthcare applications. Nat Biomed Eng, 2022, 6, 1384 doi: 10.1038/s41551-022-00872-8[10] Ramezani M, Takian A, Bakhtiari A, et al. The application of artificial intelligence in health financing: a scoping review. Cost Effectiveness and Resource Allocation, 2023, 21, 83 doi: 10.1186/s12962-023-00492-2[11] Wan H, Zhao J, Lo L W, et al. Multimodal artificial neurological sensory–memory system based on flexible carbon nanotube synaptic transistor. ACS Nano, 2021, 15, 14587 doi: 10.1021/acsnano.1c04298[12] Wang T, Jin T, Lin W, et al. Multimodal sensors enabled autonomous soft robotic system with self-adaptive manipulation. ACS Nano, 2024, 18, 9980 doi: 10.1021/acsnano.3c11281[13] Wang C, He T, Zhou H, et al. Artificial intelligence enhanced sensors-enabling technologies to next-generation healthcare and biomedical platform. Bioelectronic Medicine, 2023, 9, 17 doi: 10.1186/s42234-023-00118-1[14] Shin Y E, Sohn S D, Han H, et al. Self-powered triboelectric/pyroelectric multimodal sensors with enhanced performances and decoupled multiple stimuli. Nano Energy, 2020, 72, 104671 doi: 10.1016/j.nanoen.2020.104671[15] Rodriguez A. The unstable queen: Uncertainty, mechanics, and tactile feedback. Sci Rob, 2021, 6, eabi4667 doi: 10.1126/scirobotics.abi4667[16] Krishnan S, Shi Y, Webb R C, et al. Multimodal epidermal devices for hydration monitoring. Microsyst Nanoeng, 2017, 3, 1 doi: 10.1038/micronano.2017.14[17] Billard A, Kragic D. Trends and challenges in robot manipulation. Science, 2019, 364, eaat8414 doi: 10.1126/science.aat8414[18] Noah B, Keller M S, Mosadeghi S, et al. Impact of remote patient monitoring on clinical outcomes: an updated meta-analysis of randomized controlled trials. npj Digital Med, 2018, 1, 20172 doi: 10.1038/s41746-017-0002-4[19] Arceo J C, Yu L, Bai S. Robust sensor fusion and biomimetic control of a lower-limb exoskeleton with multimodal sensors. IEEE Trans Autom Sci Eng, 2024[20] Wilk M P, Walsh M, O’Flynn B. Multimodal sensor fusion for low-power wearable human motion tracking systems in sports applications. IEEE Sens J, 2020, 21, 5195 doi: 10.1109/JSEN.2020.3030779[21] Guo Y, Zhong M, Fang Z, et al. A wearable transient pressure sensor made with MXene nanosheets for sensitive broad-range human–machine interfacing. Nano Lett, 2019, 19, 1143 doi: 10.1021/acs.nanolett.8b04514[22] Zheng J, Feng C, Qiu S, et al. Application and prospect of semiconductor biosensors in detection of viral zoonoses. J Semicond, 2023, 44, 023102 doi: 10.1088/1674-4926/44/2/023102[23] Shi R, Lei T, Xia Z, et al. Low-temperature metal–oxide thin-film transistor technologies for implementing flexible electronic circuits and systems. J Semicond, 2023, 44, 091601 doi: 10.1088/1674-4926/44/9/091601[24] Wang S, Chen X, Zhao C, et al. An organic electrochemical transistor for multi-modal sensing, memory and processing. Nat Electron, 2023, 6, 281 doi: 10.1038/s41928-023-00950-y[25] Yao G, Mo X, Yin C, et al. A programmable and skin temperature–activated electromechanical synergistic dressing for effective wound healing. Sci Adv, 2022, 8, eabl8379 doi: 10.1126/sciadv.abl8379[26] Li J, Bao R, Tao J, et al. Visually aided tactile enhancement system based on ultrathin highly sensitive crack-based strain sensors. Appl Phys Lett, 2020, 7 doi: 10.1063/1.5129468[27] Meng J, Wang T, Zhu H, et al. Integrated in-sensor computing optoelectronic device for environment-adaptable artificial retina perception application. Nano Lett, 2021, 22, 81 doi: 10.1021/acs.nanolett.1c03240[28] Guo H, Pu X, Chen J, et al. A highly sensitive, self-powered triboelectric auditory sensor for social robotics and hearing aids. Sci Rob, 2018, 3, eaat2516 doi: 10.1126/scirobotics.aat2516[29] Jeong J Y, Cha Y K, Ahn S R, et al. Ultrasensitive bioelectronic tongue based on the Venus flytrap domain of a human sweet taste receptor. ACS Appl Mater Interfaces, 2022, 14, 2478 doi: 10.1021/acsami.1c17349[30] Nurputra D K, Kusumaatmaja A, Hakim M S, et al. Fast and noninvasive electronic nose for sniffing out COVID-19 based on exhaled breath-print recognition. npj Digital Med, 2022, 5, 115 doi: 10.1038/s41746-022-00661-2[31] Chen X, Xi X, Liu Y, et al. Advances in healthcare electronics enabled by triboelectric nanogenerators. Adv Funct Mater, 2020, 30, 2004673 doi: 10.1002/adfm.202004673[32] Sun Y, Li J, Li S, et al. Advanced synaptic devices and their applications in biomimetic sensory neural system. Chip, 2023, 2, 100031 doi: 10.1016/j.chip.2022.100031[33] Mukhamediev R I, Popova Y, Kuchin Y, et al. Review of artificial intelligence and machine learning technologies: classification, restrictions, opportunities and challenges. Mathematics, 2022, 10, 2552 doi: 10.3390/math10152552[34] Jordan M I, Mitchell T M. Machine learning: Trends, perspectives, and prospects. Science, 2015, 349, 255 doi: 10.1126/science.aaa8415[35] LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521, 436 doi: 10.1038/nature14539[36] Ma Z, Zhang J, Li J, et al. Frequency-enabled decouplable dual-modal flexible pressure and temperature sensor. IEEE Electron Device Lett, 2020, 41, 1568 doi: 10.1109/LED.2020.3020937[37] Mascia A, Spanu A, Bonfiglio A, et al. Multimodal force and temperature tactile sensor based on a short-channel organic transistor with high sensitivity. Sci Rep, 2023, 13, 16232 doi: 10.1038/s41598-023-43360-y[38] Mahato K, Saha T, Ding S, et al. Hybrid multimodal wearable sensors for comprehensive health monitoring. Nat Electron, 2024, 1 doi: 10.1038/s41928-024-01247-4[39] Xie M, Yao G, Zhang T, et al. Multifunctional flexible contact lens for eye health monitoring using inorganic magnetic oxide nanosheets. J Nanobiotechnol, 2022, 20, 202 doi: 10.1186/s12951-022-01415-8[40] Yao G, Gan X, Lin Y. Flexible self-powered bioelectronics enables personalized health management from diagnosis to therapy. Sci Bull, 2024, 69, 2289 doi: 10.1016/j.scib.2024.05.012[41] Xu W, Chen Q, Ren Q, et al. Recent advances in enhancing the output performance of liquid-solid triboelectric nanogenerator (LS TENG): Mechanisms, materials, and structures. Nano Energy, 2024, 110191 doi: 10.1016/j.nanoen.2024.110191[42] McCulloch W S, Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys, 1943, 5, 115 doi: 10.1007/BF02478259[43] Samuel A L. Some studies in machine learning using the game of checkers. IBM J Res Dev, 1959, 3, 210 doi: 10.1147/rd.33.0210[44] Faceli K, De Carvalho A C, Rezende S O. Combining intelligent techniques for sensor fusion. Appl Intell, 2004, 20, 199 doi: 10.1023/B:APIN.0000021413.05467.20[45] Engel J, Chen J, Fan Z, et al. Polymer micromachined multimodal tactile sensors. Sensor Actuat A-Phys, 2005, 117, 50 doi: 10.1016/j.sna.2004.05.037[46] Hinton G E, Salakhutdinov R R. Reducing the dimensionality of data with neural networks. Science, 2006, 313, 504 doi: 10.1126/science.1127647[47] Tian X, Liu Z, Luo Z, et al. Dual-mode sensor and actuator to learn human-hand tracking and grasping. IEEE Trans Electron Devices, 2019, 66, 5407 doi: 10.1109/TED.2019.2949583[48] Kelly D, Condell J, Curran K, et al. A multimodal smartphone sensor system for behaviour measurement and health status inference. Inform Fusion, 2020, 53, 43 doi: 10.1016/j.inffus.2019.06.008[49] Ballard Z, Brown C, Madni A M, et al. Machine learning and computation-enabled intelligent sensor design. Nat Mach Intell, 2021, 3, 556 doi: 10.1038/s42256-021-00360-9[50] Mucchi L, Shahabuddin S, Albreem M A M, et al. Signal processing techniques for 6G. J Signal Process Syst, 2023, 95, 435 doi: 10.1007/s11265-022-01827-7[51] Ge R, Yu Q, Zhou F, et al. Dual-modal piezotronic transistor for highly sensitive vertical force sensing and lateral strain sensing. Nat Commun, 2023, 14, 6315 doi: 10.1038/s41467-023-41983-3[52] Narkhede P, Walambe R, Mandaokar S, et al. Gas detection and identification using multimodal artificial intelligence based sensor fusion. Applied System Innovation, 2021, 4, 3 doi: 10.3390/asi4010003[53] Kaczmarczyk R, Wilhelm T I, Martin R, et al. Evaluating multimodal AI in medical diagnostics. npj Digital Med, 2024, 7, 205 doi: 10.1038/s41746-024-01208-3[54] Tang Q, Liang J, Zhu F. A comparative review on multi-modal sensors fusion based on deep learning. Signal Process, 2023, 109165 doi: 10.1016/j.sigpro.2023.109165[55] Yang R, Zhang W, Tiwari N, et al. Multimodal sensors with decoupled sensing mechanisms. Adv Sci, 2022, 9, 2202470 doi: 10.1002/advs.202202470[56] Kong H, Li W, Song Z, et al. Recent advances in multimodal sensing integration and decoupling strategies for tactile perception. Materials Futures, 2024, 3, 022501 doi: 10.1088/2752-5724/ad305e[57] Liu J, Zhao W, Li J, et al. Multimodal and flexible hydrogel-based sensors for respiratory monitoring and posture recognition. Biosens Bioelectron, 2024, 243, 115773 doi: 10.1016/j.bios.2023.115773[58] Zhang S, Zhou H, Tchantchane R, et al. Hand gesture recognition across various limb positions using a multi-modal sensing system based on self-adaptive data-fusion and convolutional neural networks (CNNs). IEEE Sens J, 2024, 1 doi: 10.1109/JSEN.2024.3389963[59] Keum K, Kwak J Y, Rim J, et al. Dual-stream deep learning integrated multimodal sensors for complex stimulus detection in intelligent sensory systems. Nano Energy, 2024, 122, 109342 doi: 10.1016/j.nanoen.2024.109342[60] Xu M, Ma J, Sun Q, et al. Ionic liquid-optoelectronics-based multimodal soft sensor. IEEE Sens J, 2023, 23, 14809 doi: 10.1109/JSEN.2023.3279527[61] Yu J, Tang J, Wang L, et al. Dual-mode sensor for intelligent solution monitoring: Enhancing sensitivity and recognition accuracy through capacitive and triboelectric sensing. Nano Energy, 2023, 118, 109009 doi: 10.1016/j.nanoen.2023.109009[62] Zhao X, Sun Z, Lee C. Augmented tactile perception of robotic fingers enabled by AI-enhanced triboelectric multimodal sensors. Adv Funct Mater, 2024, 2409558 doi: 10.1002/adfm.202409558[63] Wang L, Qi X, Li C, et al. Multifunctional tactile sensors for object recognition. Adv Funct Mater, 2024, 2409358 doi: 10.1002/adfm.202409358[64] Zhao P, Song Y, Xie P, et al. All-organic smart textile sensor for deep-learning-assisted multimodal sensing. Adv Funct Mater, 2023, 33, 2301816 doi: 10.1002/adfm.202301816[65] Thombre S, Zhao Z, Ramm-Schmidt H, et al. Sensors and AI techniques for situational awareness in autonomous ships: A review. IEEE Trans Intell Transp Syst, 2020, 23, 64 doi: 10.1109/TITS.2020.3023957[66] Abbas F, Yan Y, Wang L. Mass flow rate measurement of pneumatically conveyed solids through multimodal sensing and data-driven modeling. IEEE Trans Instrum Meas, 2021, 70, 1 doi: 10.1109/TIM.2021.3107599 -

Proportional views

Haihua Wang received the B.S. degree from Northwest University, Xi’an, China, in 2017, the M.S. degree from Southwest University, Chongqing, China, in 2020, and the Ph.D. degree from Fudan University, Shanghai, China, in 2023. Then, he joined Nanjing University of Posts and Telecommunications, Nanjing, China. His research interests include fully-depleted silicon-on-insulator technology, emerging semiconductor devices and integrated biomedical sensors.

Haihua Wang received the B.S. degree from Northwest University, Xi’an, China, in 2017, the M.S. degree from Southwest University, Chongqing, China, in 2020, and the Ph.D. degree from Fudan University, Shanghai, China, in 2023. Then, he joined Nanjing University of Posts and Telecommunications, Nanjing, China. His research interests include fully-depleted silicon-on-insulator technology, emerging semiconductor devices and integrated biomedical sensors. Wei Li received a doctor’s degree in microelectronics and solid electronics from Nanjing University in June 2008. From March 2014 to March 2015, he went to the University of California, San Diego in the United States for academic visits and research work. He has been selected for the "Six Talents" peak program in Jiangsu Province (China), for the young and middle-aged academic leader in the Jiangsu University Blue Project (China), and as a senior member of the Chinese Electronics Society.

Wei Li received a doctor’s degree in microelectronics and solid electronics from Nanjing University in June 2008. From March 2014 to March 2015, he went to the University of California, San Diego in the United States for academic visits and research work. He has been selected for the "Six Talents" peak program in Jiangsu Province (China), for the young and middle-aged academic leader in the Jiangsu University Blue Project (China), and as a senior member of the Chinese Electronics Society. Qiumeng Chen received the Ph.D. degree from Southwest University, Chongqing, China, in 2021. She is currently a Faculty Member with the College of Integrated Circuit Science and Engineering, Nanjing University of Posts and Telecommunications, Nanjing, China. Her research interests concern on the development of novel colorimetric sensors for bioanalysis.

Qiumeng Chen received the Ph.D. degree from Southwest University, Chongqing, China, in 2021. She is currently a Faculty Member with the College of Integrated Circuit Science and Engineering, Nanjing University of Posts and Telecommunications, Nanjing, China. Her research interests concern on the development of novel colorimetric sensors for bioanalysis. Lei Wang received the B. Eng. degree in electrical engineering from the Beijing University of Science and Technology, Beijing, China, in 2003, the M. Sc. degree in electronic instrumentation systems from the University of Manchester, Manchester, U. K., in 2004, and the Ph.D degree in "Tbit/sq.in. scanning probe phase-change memory" from the University of Exeter, Exeter, U.K., in 2009. Between 2008 and 2011, he was employed as a Postdoctoral Research Fellow in the University of Exeter to work on a fellowship funded by European Commission. These works included the study of phase-change probe memory and phase-change memristor. Since 2020, he joined the Nanjing University of Posts and Telecommunications, Nanjing, P. R. China as a Professor, where he is engaged in the phase-change memories, phase-change neural networks, and other phase-change based optoelectronic devices and their potential applications.

Lei Wang received the B. Eng. degree in electrical engineering from the Beijing University of Science and Technology, Beijing, China, in 2003, the M. Sc. degree in electronic instrumentation systems from the University of Manchester, Manchester, U. K., in 2004, and the Ph.D degree in "Tbit/sq.in. scanning probe phase-change memory" from the University of Exeter, Exeter, U.K., in 2009. Between 2008 and 2011, he was employed as a Postdoctoral Research Fellow in the University of Exeter to work on a fellowship funded by European Commission. These works included the study of phase-change probe memory and phase-change memristor. Since 2020, he joined the Nanjing University of Posts and Telecommunications, Nanjing, P. R. China as a Professor, where he is engaged in the phase-change memories, phase-change neural networks, and other phase-change based optoelectronic devices and their potential applications.

DownLoad:

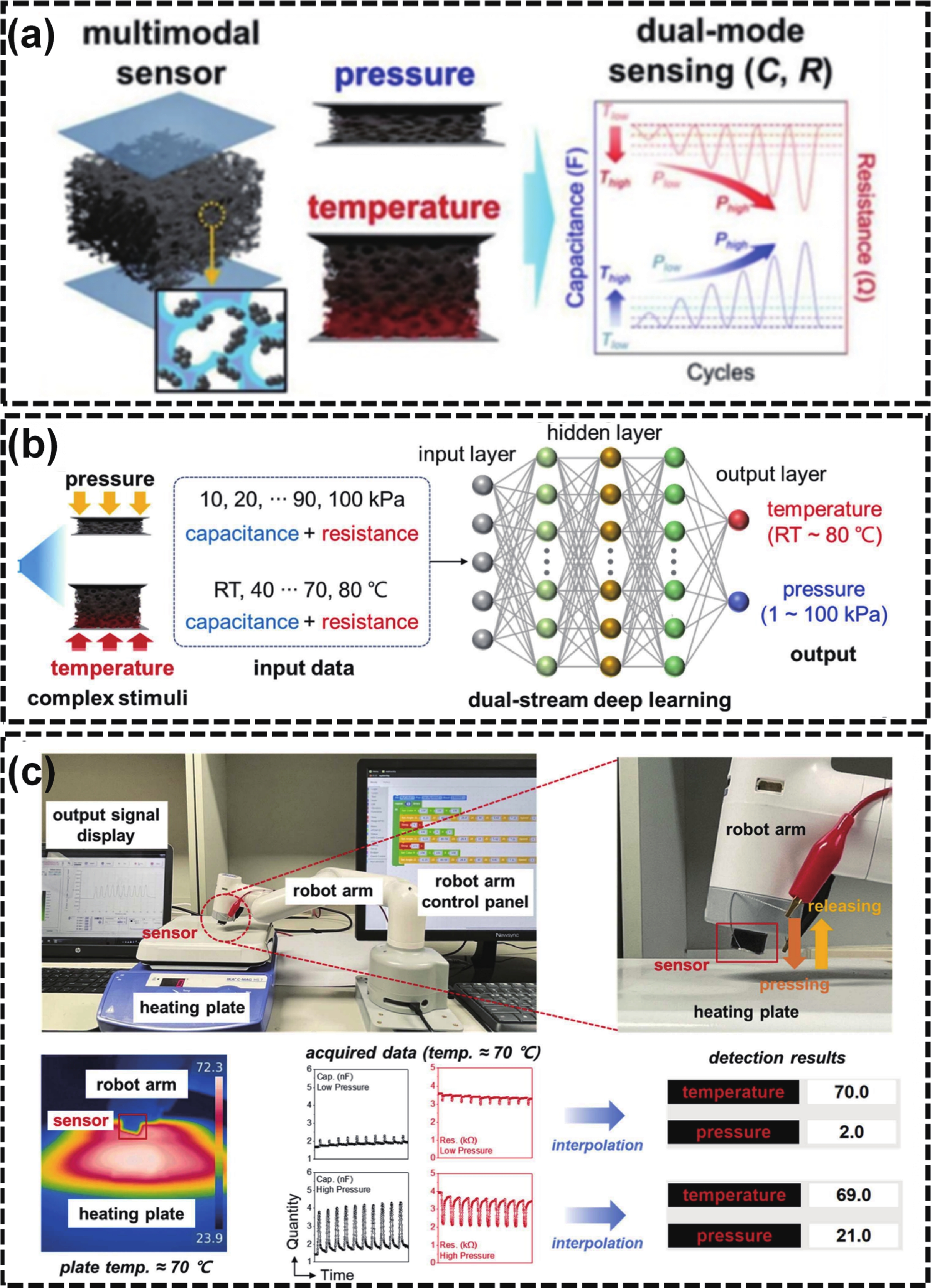

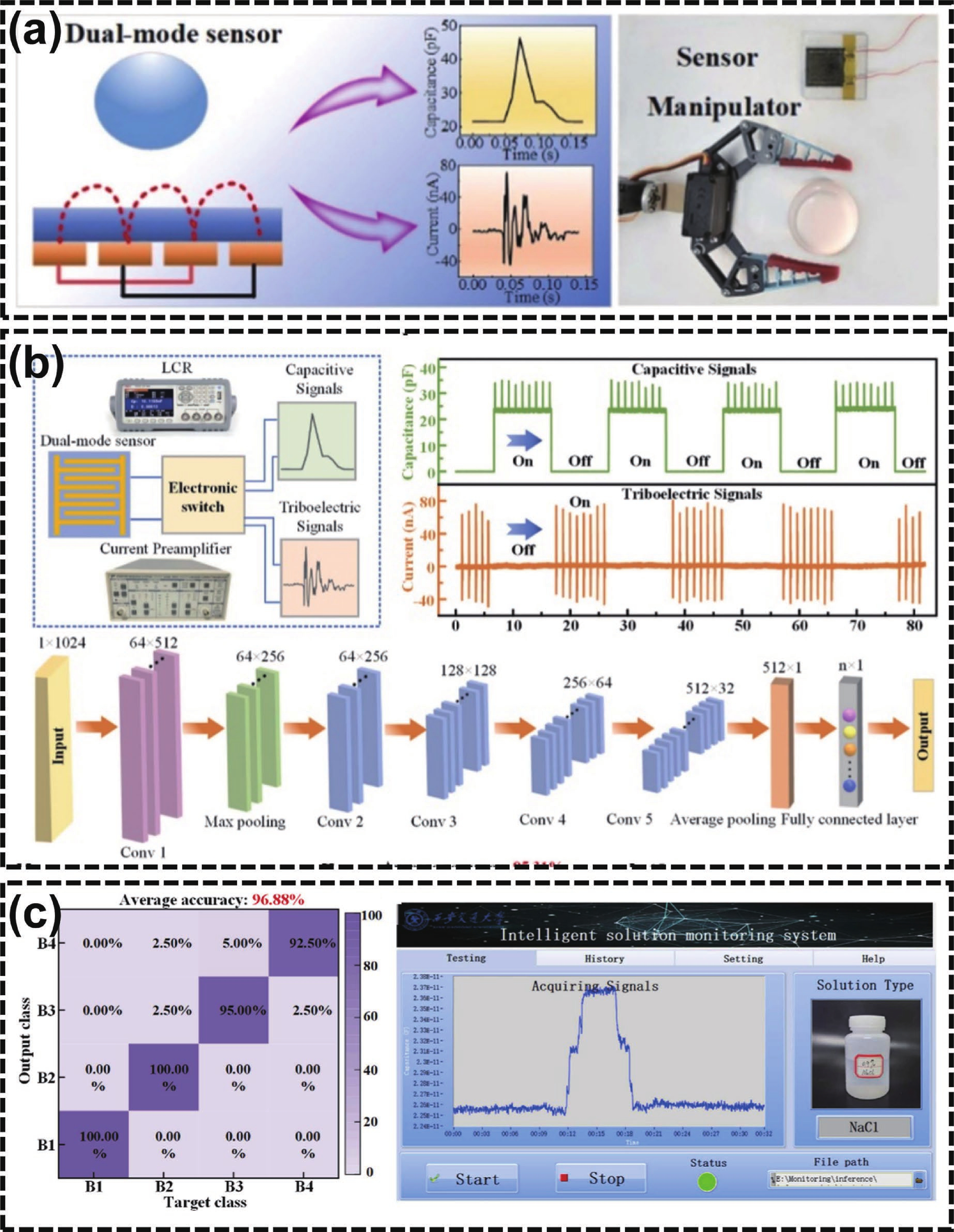

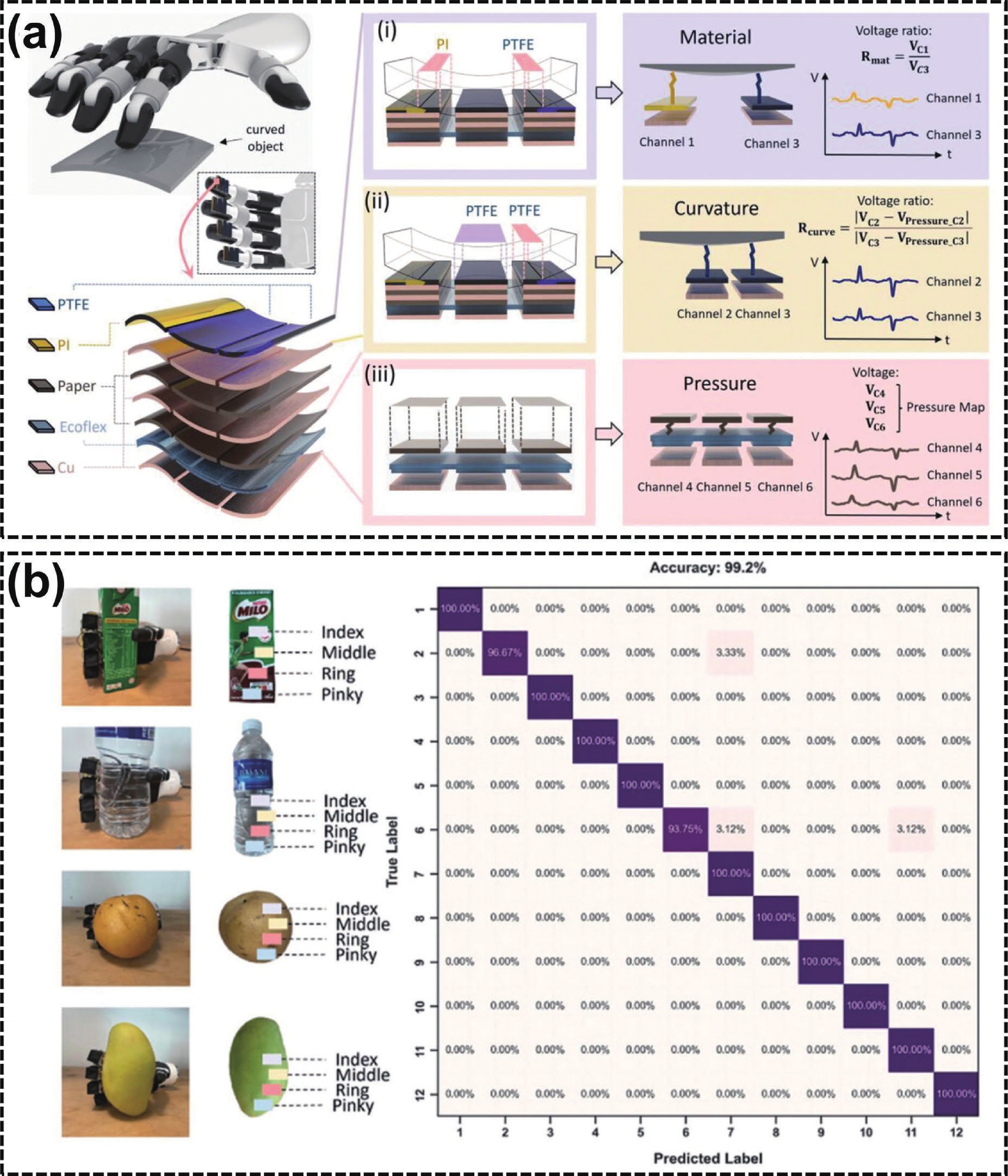

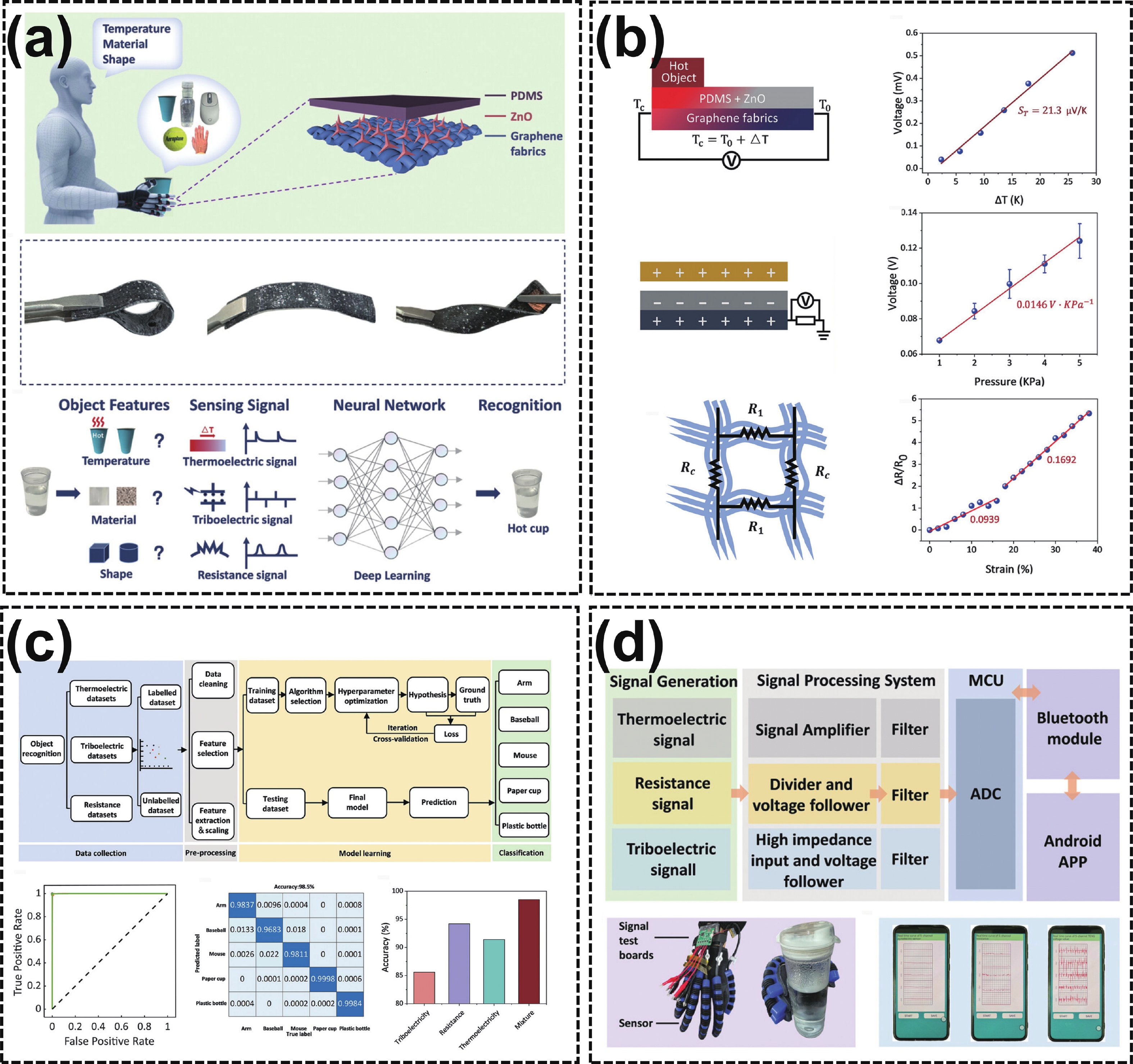

DownLoad: