| Citation: |

Siqi Liu, Songtao Wei, Peng Yao, Dong Wu, Lu Jie, Sining Pan, Jianshi Tang, Bin Gao, He Qian, Huaqiang Wu. A 28 nm 576K RRAM-based computing-in-memory macro featuring hybrid programming with area efficiency of 2.82 TOPS/mm2[J]. Journal of Semiconductors, 2025, 46(6): 062304. doi: 10.1088/1674-4926/24100017

****

S Q Liu, S T Wei, P Yao, D Wu, L Jie, S N Pan, J S Tang, B Gao, H Qian, and H Q Wu, A 28 nm 576K RRAM-based computing-in-memory macro featuring hybrid programming with area efficiency of 2.82 TOPS/mm2[J]. J. Semicond., 2025, 46(6), 062304 doi: 10.1088/1674-4926/24100017

|

A 28 nm 576K RRAM-based computing-in-memory macro featuring hybrid programming with area efficiency of 2.82 TOPS/mm2

DOI: 10.1088/1674-4926/24100017

CSTR: 32376.14.1674-4926.24100017

More Information-

Abstract

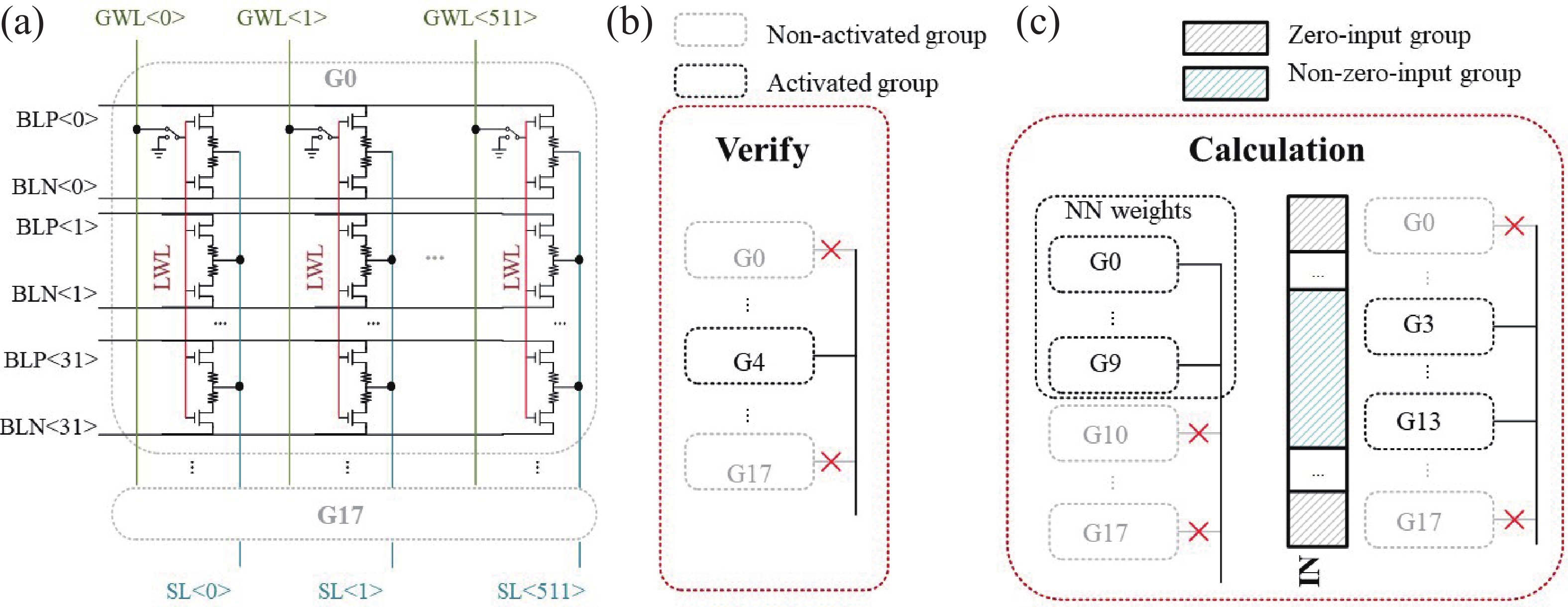

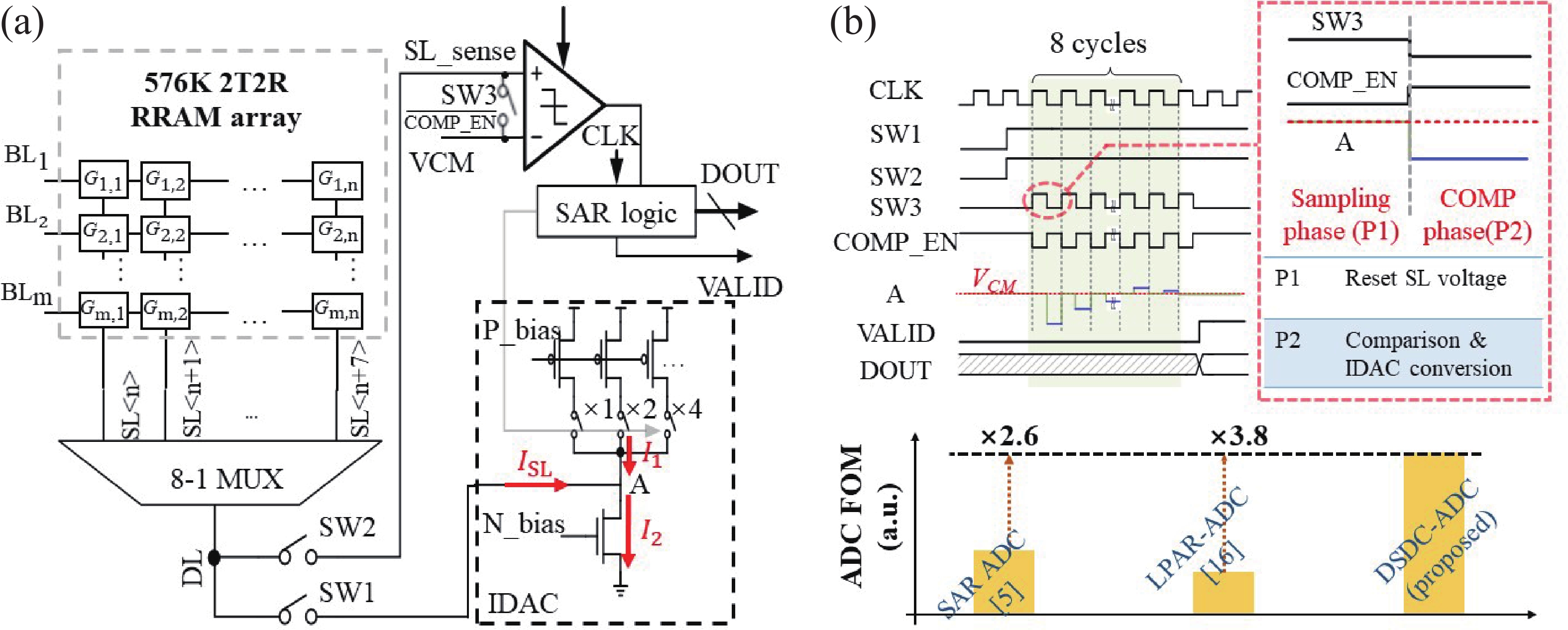

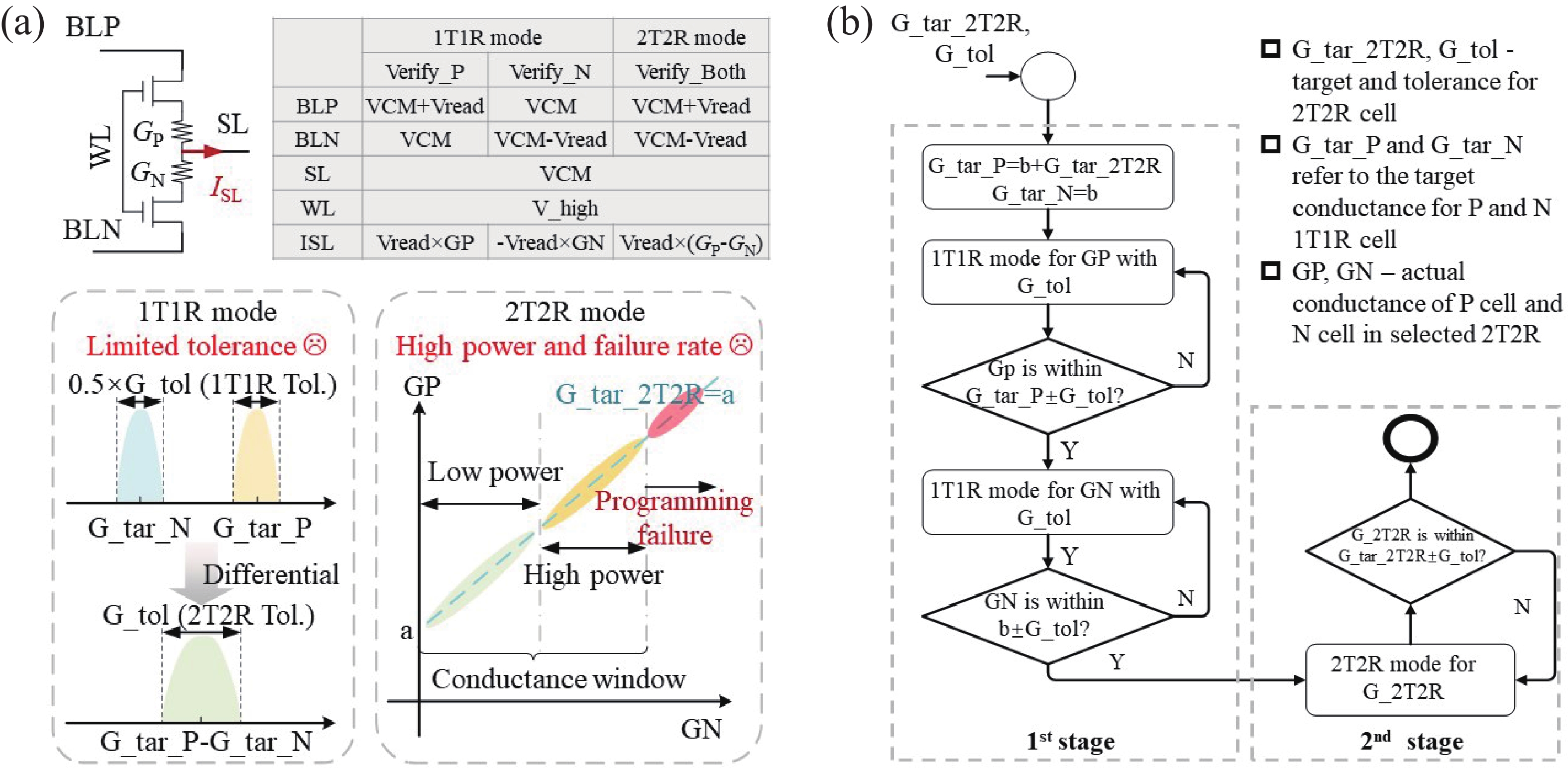

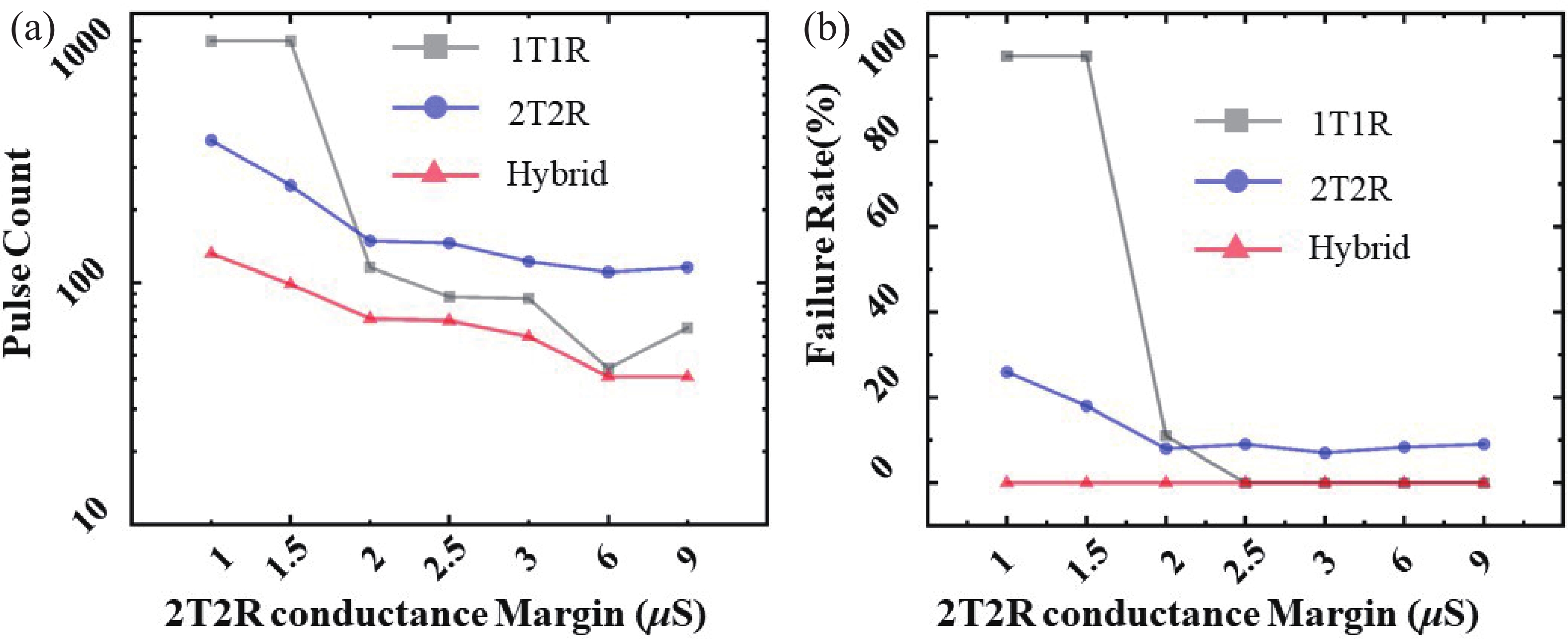

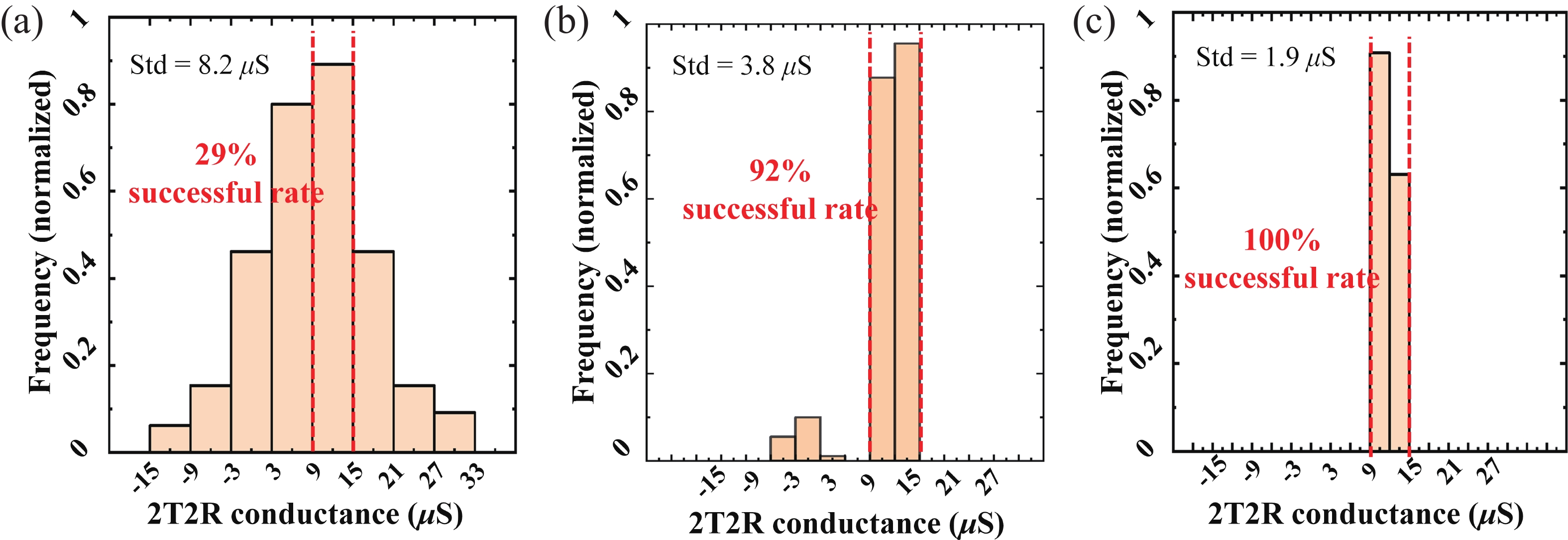

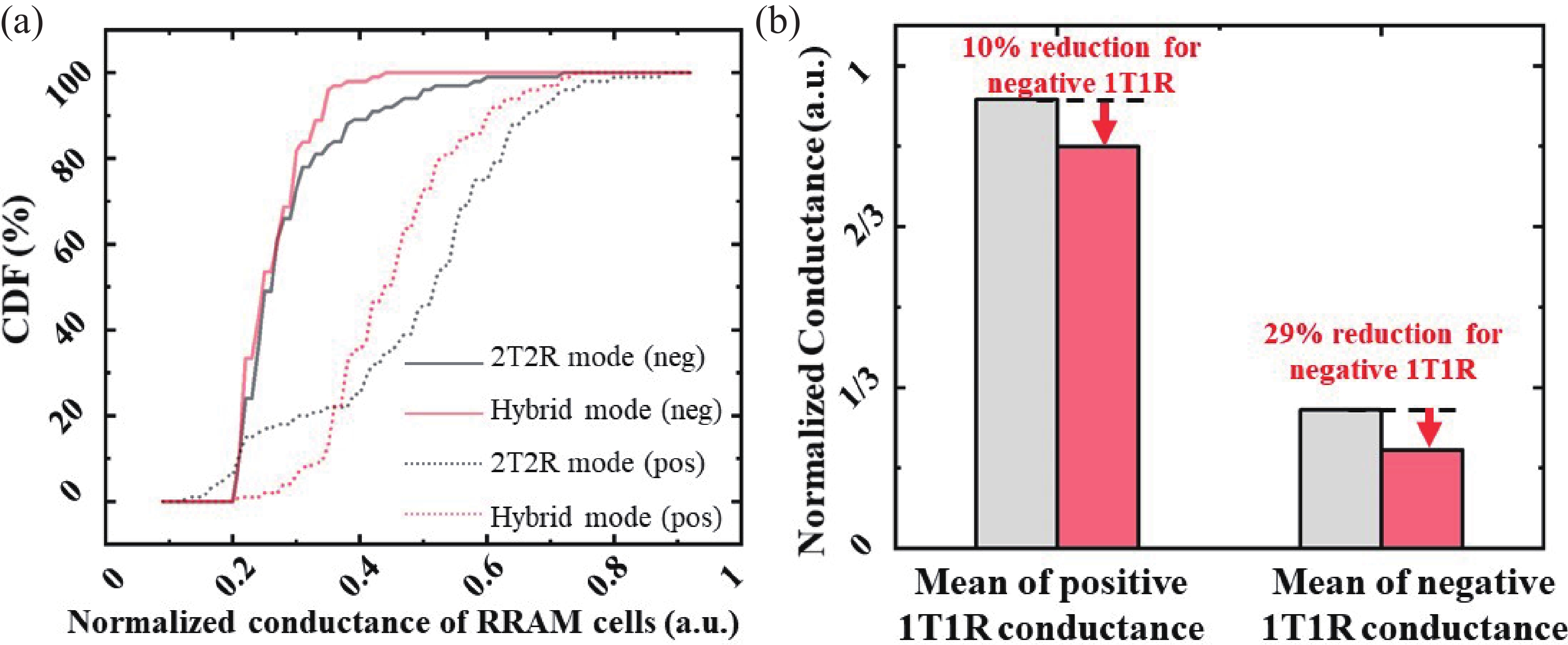

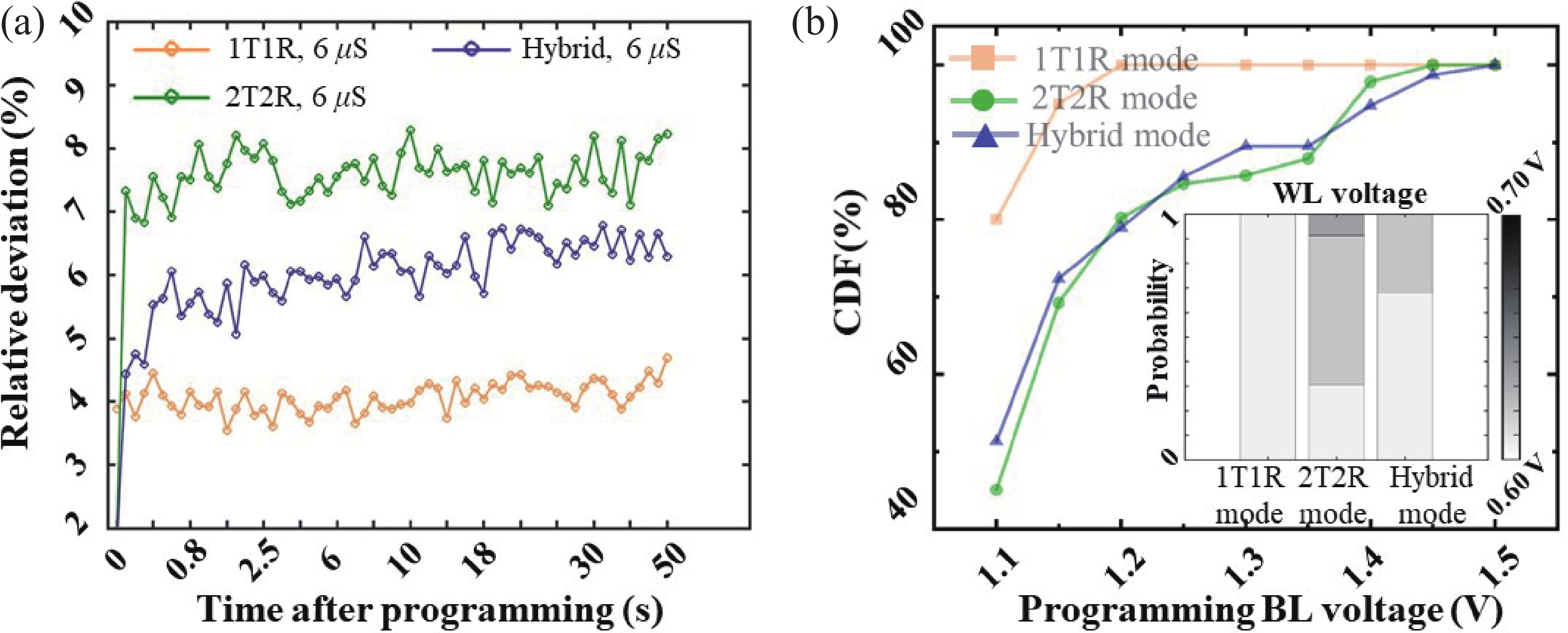

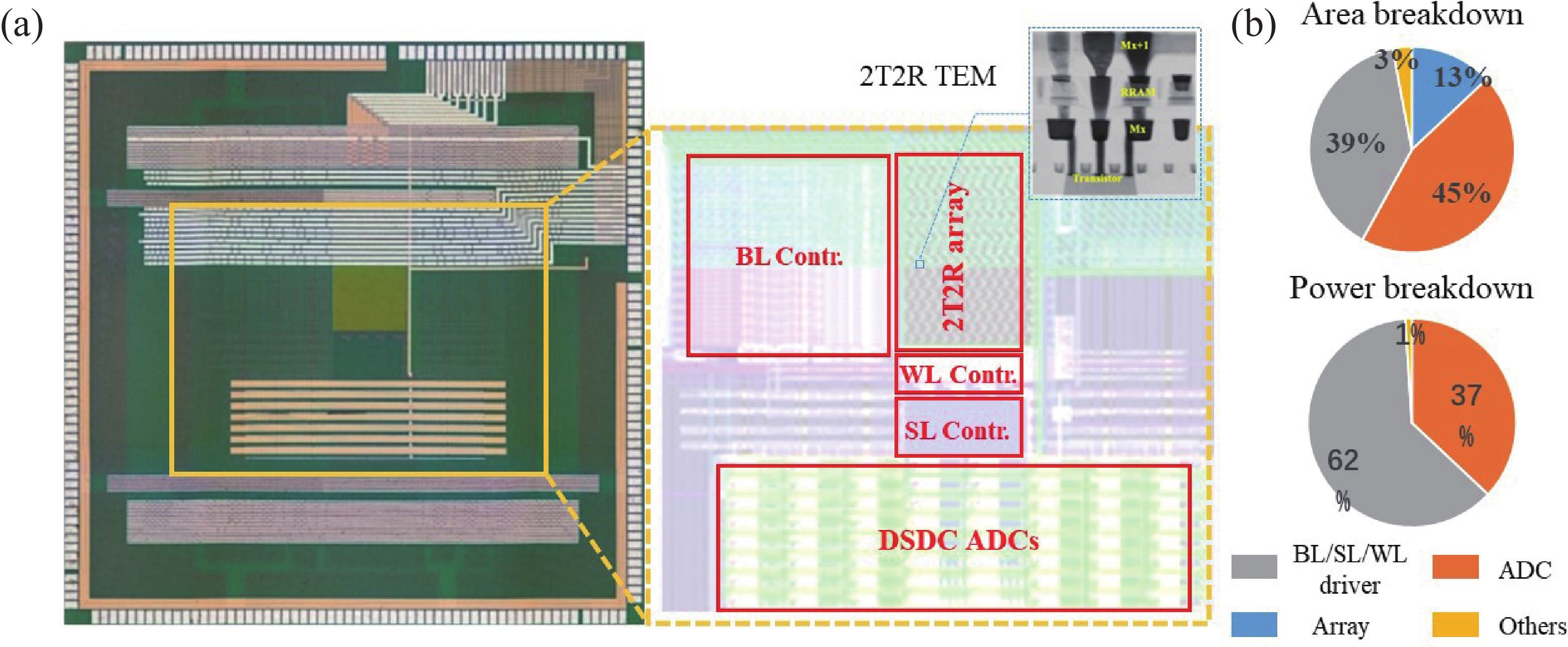

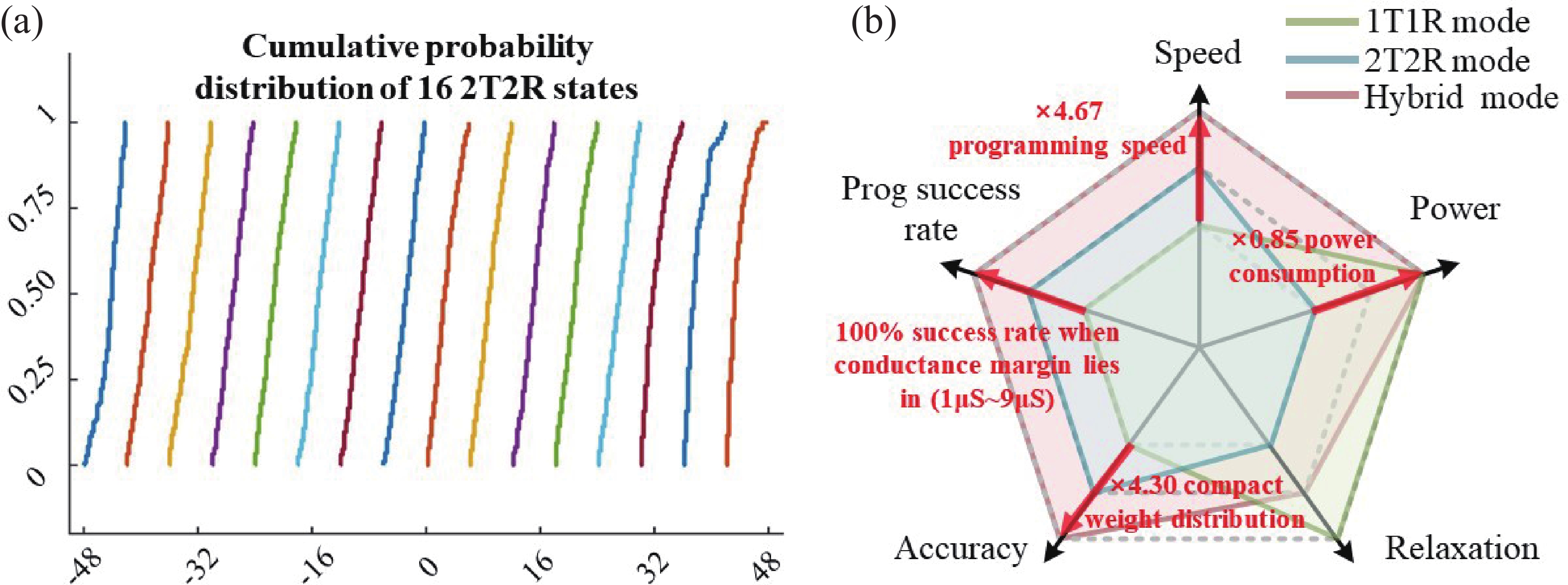

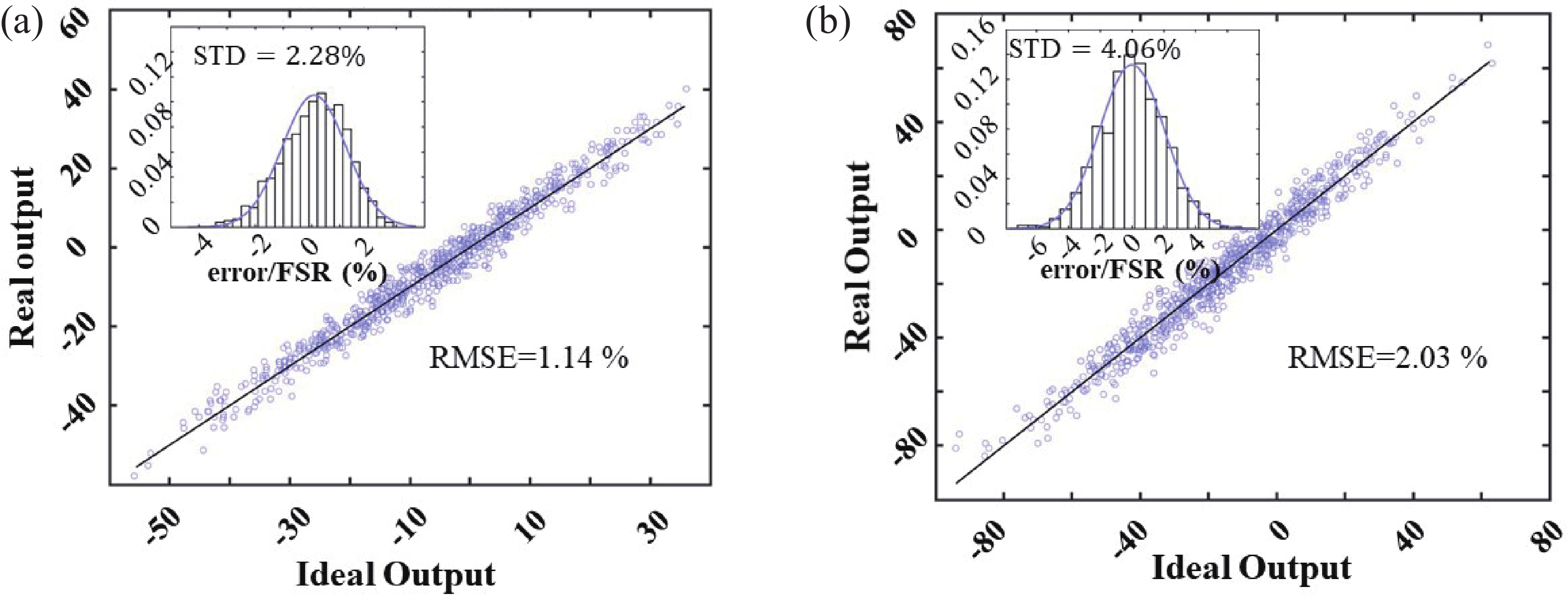

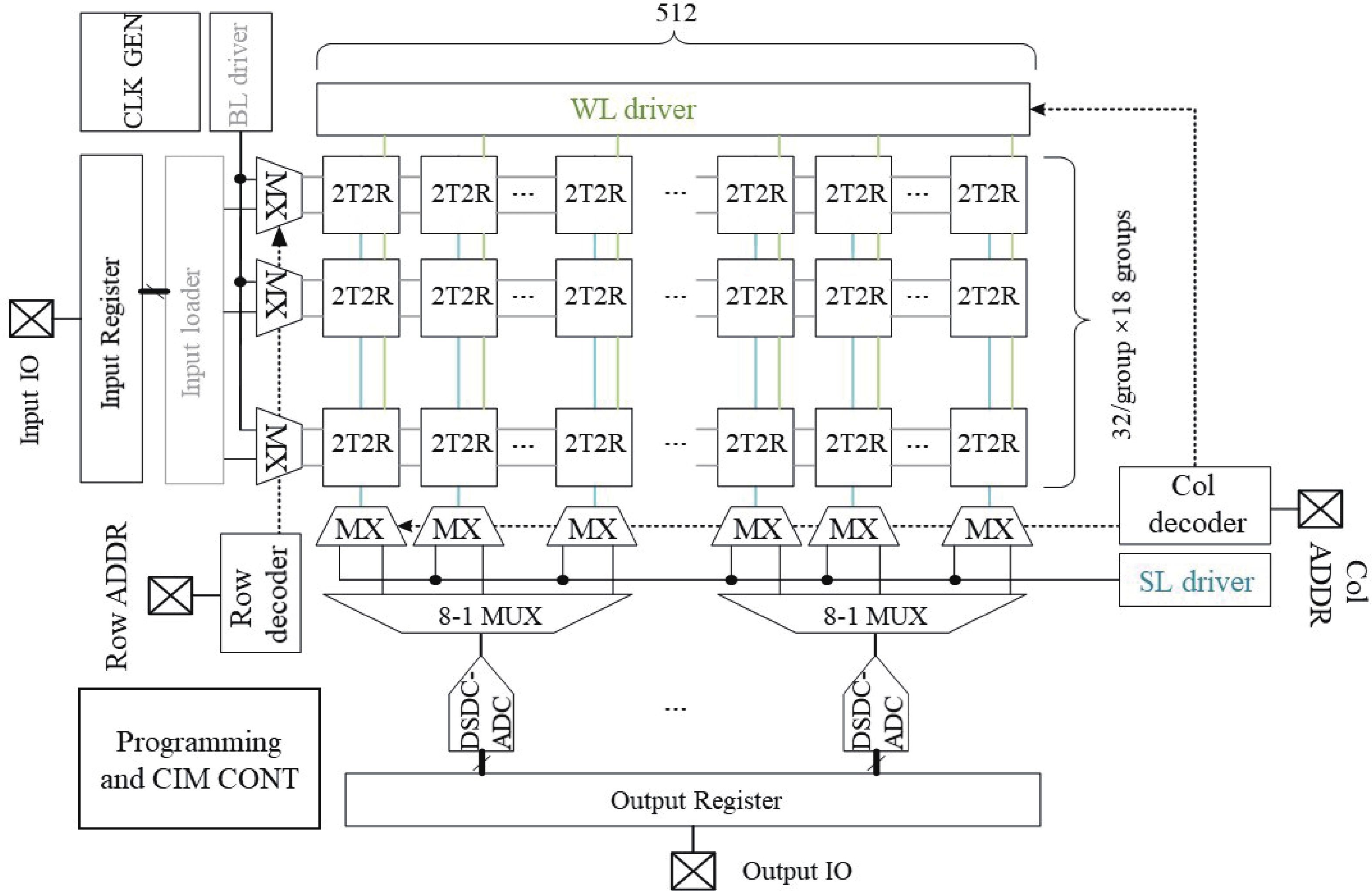

Computing-in-memory (CIM) has been a promising candidate for artificial-intelligent applications thanks to the absence of data transfer between computation and storage blocks. Resistive random access memory (RRAM) based CIM has the advantage of high computing density, non-volatility as well as high energy efficiency. However, previous CIM research has predominantly focused on realizing high energy efficiency and high area efficiency for inference, while little attention has been devoted to addressing the challenges of on-chip programming speed, power consumption, and accuracy. In this paper, a fabricated 28 nm 576K RRAM-based CIM macro featuring optimized on-chip programming schemes is proposed to address the issues mentioned above. Different strategies of mapping weights to RRAM arrays are compared, and a novel direct-current ADC design is designed for both programming and inference stages. Utilizing the optimized hybrid programming scheme, 4.67× programming speed, 0.15× power saving and 4.31× compact weight distribution are realized. Besides, this macro achieves a normalized area efficiency of 2.82 TOPS/mm2 and a normalized energy efficiency of 35.6 TOPS/W. -

References

[1] Sebastian A, Le Gallo M, Khaddam-Aljameh R, et al. Memory devices and applications for in-memory computing. Nat Nanotechnol, 2020, 15(7), 529 doi: 10.1038/s41565-020-0655-z[2] Jia H Y, Valavi H, Tang Y Q, et al. A programmable heterogeneous microprocessor based on bit-scalable in-memory computing. IEEE J Solid State Circuits, 2020, 55(9), 2609 doi: 10.1109/JSSC.2020.2987714[3] Lee K, Kim J, Park J. A 28-nm 50.1-tops/w p-8t sram compute-in-memory macro design with bl charge-sharing-based in-sram dac/adc Operations. IEEE J Solid State Circuits, 2024, 59(6), 1926 doi: 10.1109/JSSC.2023.3334566[4] Song J H, Tang X Y, Luo H Y, et al. A 4-bit calibration-free computing-In-memory macro with 3T1C current-programed dynamic-cascode multi-level-cell eDRAM. IEEE J Solid State Circuits, 2024, 59(3), 842 doi: 10.1109/JSSC.2023.3339887[5] Wan W, Kubendran R, Schaefer C, et al. A compute-in-memory chip based on resistive random-access memory. Nature, 2022, 608(7923), 504 doi: 10.1038/s41586-022-04992-8[6] Yao P, Wu H Q, Gao B, et al. Fully hardware-implemented memristor convolutional neural network. Nature, 2020, 577, 641 doi: 10.1038/s41586-020-1942-4[7] Chi P, Li S C, Xu C, et al. PRIME: A novel processing-in-memory architecture for neural network computation in ReRAM-based main memory. 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), 2016, 27 doi: 10.1109/ISCA.2016.13[8] Shafiee A, Nag A, Muralimanohar N, et al. ISAAC: A convolutional neural network accelerator with in situ analog arithmetic in crossbars. 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), 2016, 14 doi: 10.1109/ISCA.2016.12[9] Le Gallo M, Khaddam-Aljameh R, Stanisavljevic M, et al. A 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. Nat Electron, 2023, 6(9), 680 doi: 10.1038/s41928-023-01010-1[10] Huang W H, Wen T H, Hung J M, et al. A nonvolatile Al-edge processor with 4MB SLC-MLC hybrid-mode ReRAM compute-in-memory macro and 51.4-251TOPS/W. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 15 doi: 10.1109/ISSCC42615.2023.10067610[11] Liu Y Y, Gao B, Tang J S, et al. Architecture-circuit-technology co-optimization for resistive random access memory-based computation-in-memory chips. Sci China Inf Sci, 2023, 66(10), 200408 doi: 10.1007/s11432-023-3785-8[12] Zhou Y, Gao B, Zhang Q T, et al. Application of mathematical morphology operation with memristor-based computation-in-memory architecture for detecting manufacturing defects. Fundam Res, 2022, 2(1), 123 doi: 10.1016/j.fmre.2021.06.020[13] Spetalnick S D, Chang M Y, Konno S, et al. A 2.38 MCells/mm2 9.81−350 TOPS/W RRAM compute-in-memory macro in 40nm CMOS with hybrid offset/IOFF cancellation and ICELLRBLSL drop mitigation. 2023 IEEE Symposium on VLSI Technology and Circuits (VLSI Technology and Circuits), 2023, 1 doi: 10.23919/VLSITechnologyandCir57934.2023.10185424[14] Correll J M, Jie L, Song S, et al. An 8-bit 20.7 TOPS/W multi-level cell ReRAM-based compute engine. 2022 IEEE Symposium on VLSI Technology and Circuits (VLSI Technology and Circuits), 2022, 264 doi: 10.1109/VLSITechnologyandCir46769.2022.9830490[15] Liu Q, Gao B, Yao P, et al. 33.2 A fully integrated analog ReRAM based 78.4TOPS/W compute-in-memory chip with fully parallel MAC computing. 2020 IEEE International Solid-State Circuits Conference-(ISSCC), 2020, 500 doi: 10.1109/ISSCC19947.2020.9062953[16] Zhang W B, Yao P, Gao B, et al. Edge learning using a fully integrated neuro-inspired memristor chip. Science, 2023, 381(6663), 1205 doi: 10.1126/science.ade3483[17] Jiang Z X, Xi Y, Tang J S, et al. COPS: An efficient and reliability-enhanced programming scheme for analog RRAM and on-chip implementation of denoising diffusion probabilistic model. 2023 International Electron Devices Meeting (IEDM), 2023, 1 doi: 10.1109/IEDM45741.2023.10413764 -

Proportional views

Siqi Liu received her B.S. degree from Harbin Institute of Technology, Harbin, China, in 2021, and her M.S. degree from Tsinghua University, Beijing, China, in 2024. She is currently a Ph.D. student at the Institute of Neuroinformatics, University of Zurich and ETH Zurich, Zurich, Switzerland, with a primary research interest in NVM-based computing-in-memory circuits design.

Siqi Liu received her B.S. degree from Harbin Institute of Technology, Harbin, China, in 2021, and her M.S. degree from Tsinghua University, Beijing, China, in 2024. She is currently a Ph.D. student at the Institute of Neuroinformatics, University of Zurich and ETH Zurich, Zurich, Switzerland, with a primary research interest in NVM-based computing-in-memory circuits design. Peng Yao received his B.S. degree in microelectronics from Xi’an Jiaotong University, Xi’an, China, in 2014, and his Ph.D. degree from Tsinghua University, Beijing, China, in 2020. His research interests include in-memory and neuromorphic computing, and he has authored or coauthored several papers in Nature, Science, Nature Communications, ISSCC, IEDM, and VLSI.

Peng Yao received his B.S. degree in microelectronics from Xi’an Jiaotong University, Xi’an, China, in 2014, and his Ph.D. degree from Tsinghua University, Beijing, China, in 2020. His research interests include in-memory and neuromorphic computing, and he has authored or coauthored several papers in Nature, Science, Nature Communications, ISSCC, IEDM, and VLSI.

DownLoad:

DownLoad: