| Citation: |

Huanhui Zhang, Chi Zhang, Xu Yang, Zhe Wang, Cong Shi, Runjiang Dou, Shuangming Yu, Jian Liu, Nanjian Wu, Peng Feng, Liyuan Liu. A 128 × 128 monolithic spike-based hybrid-vision sensor with 0.96 Geps and 117 kfps[J]. Journal of Semiconductors, 2025, 46(9): 092201. doi: 10.1088/1674-4926/25020010

****

H H Zhang, C Zhang, X Yang, Z Wang, C Shi, R J Dou, S M Yu, J Liu, N J Wu, P Feng, and L Y Liu, A 128 × 128 monolithic spike-based hybrid-vision sensor with 0.96 Geps and 117 kfps[J]. J. Semicond., 2025, 46(9), 092201 doi: 10.1088/1674-4926/25020010

|

A 128 × 128 monolithic spike-based hybrid-vision sensor with 0.96 Geps and 117 kfps

DOI: 10.1088/1674-4926/25020010

CSTR: 32376.14.1674-4926.25020010

More Information-

Abstract

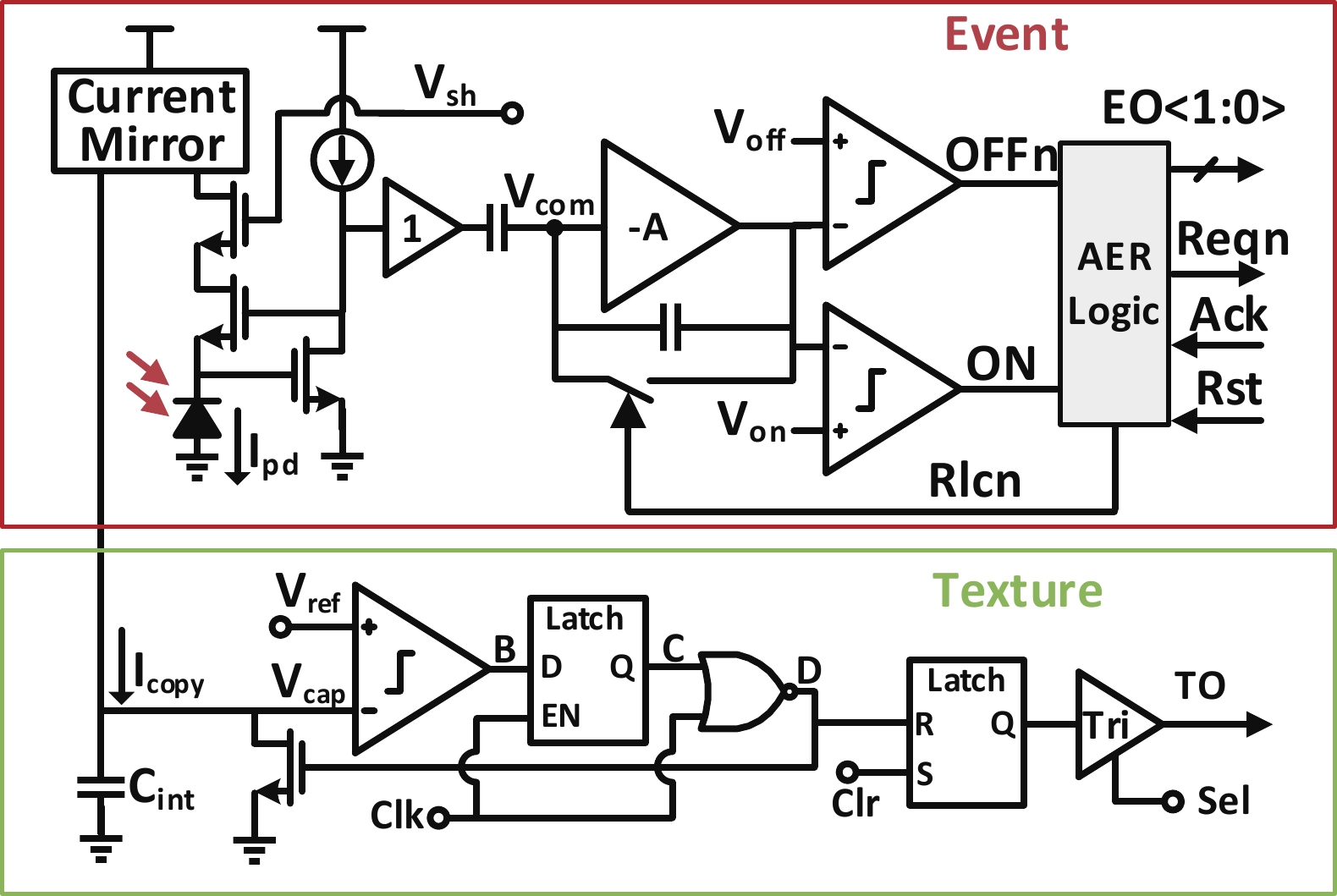

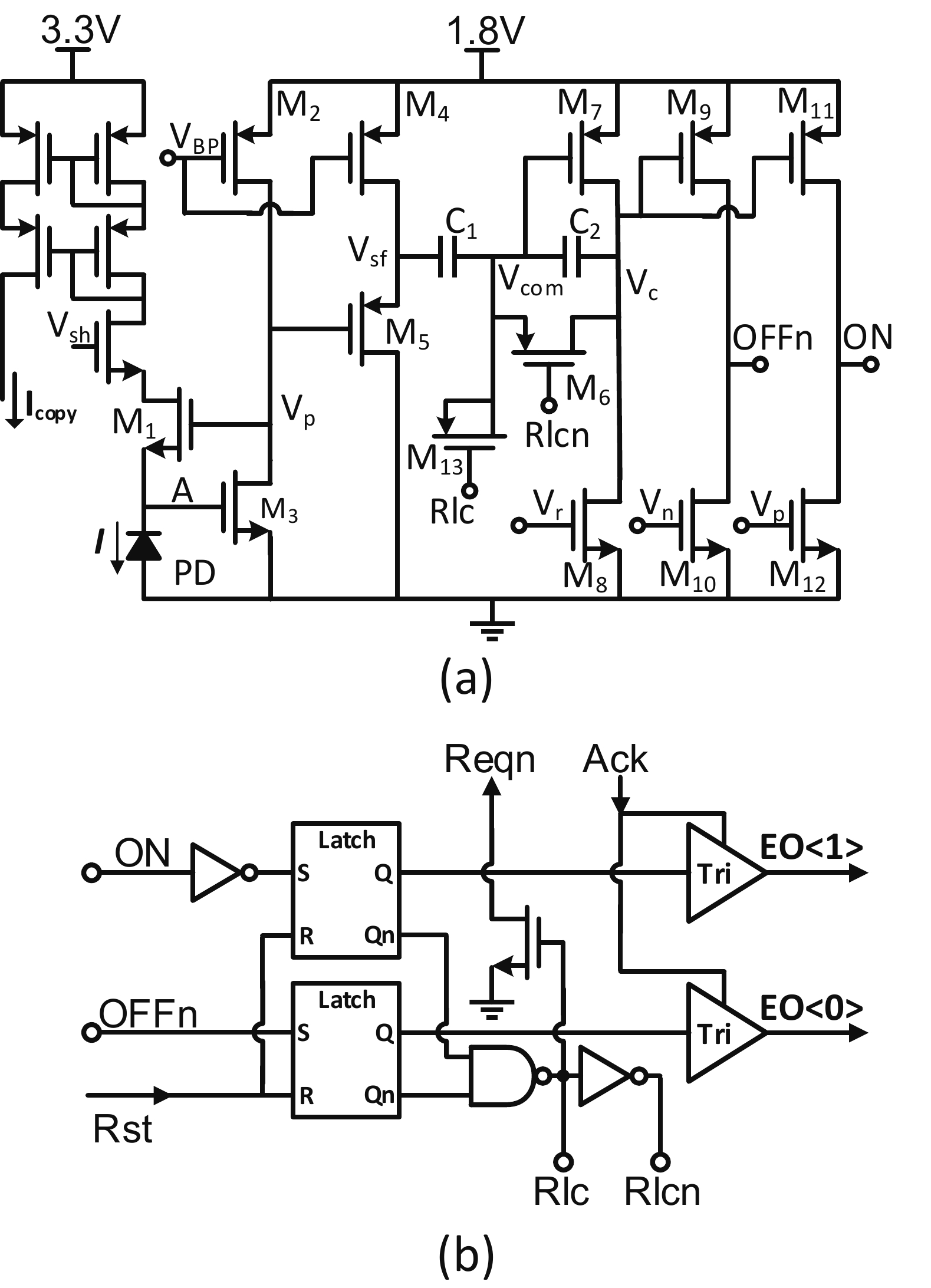

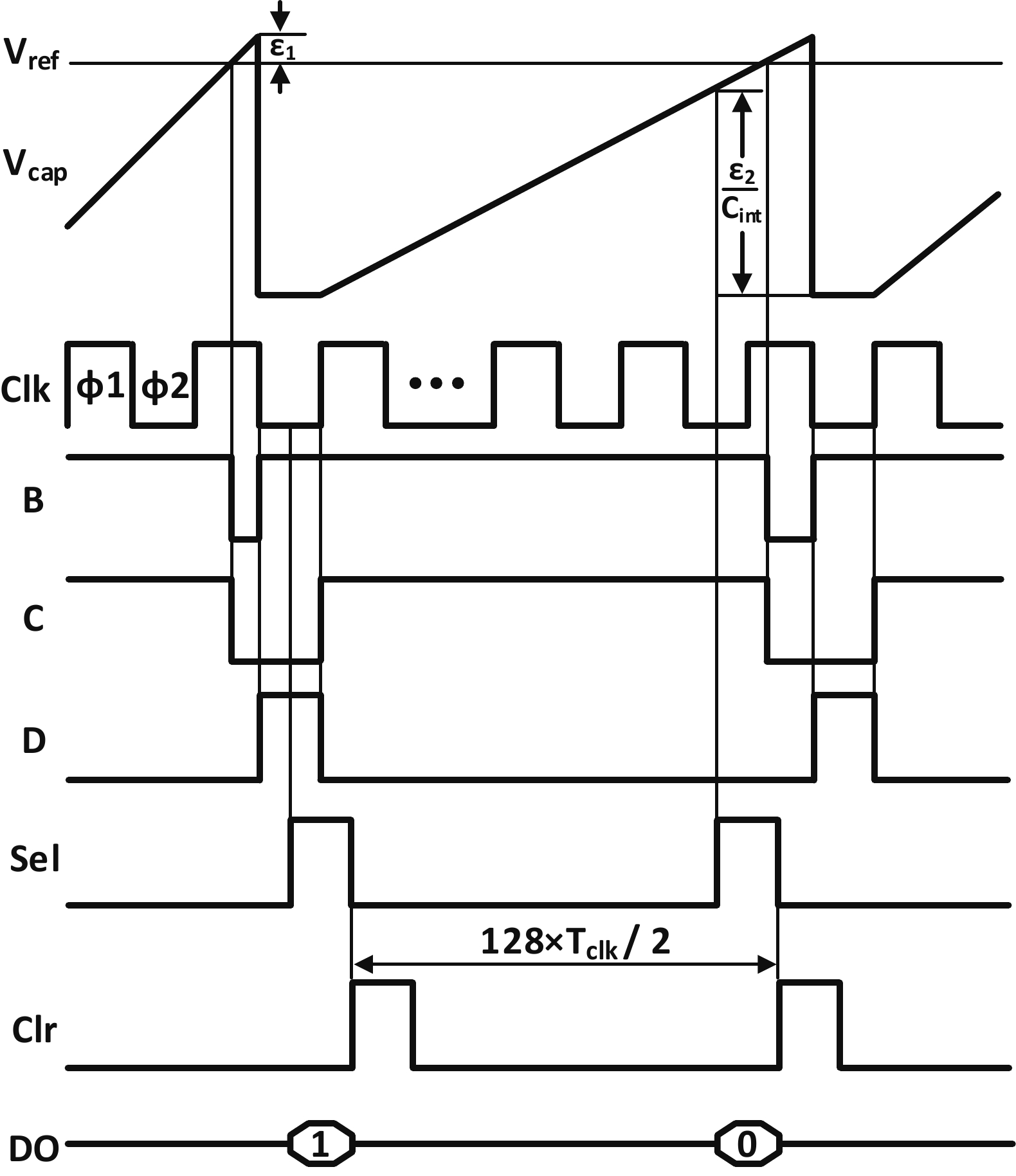

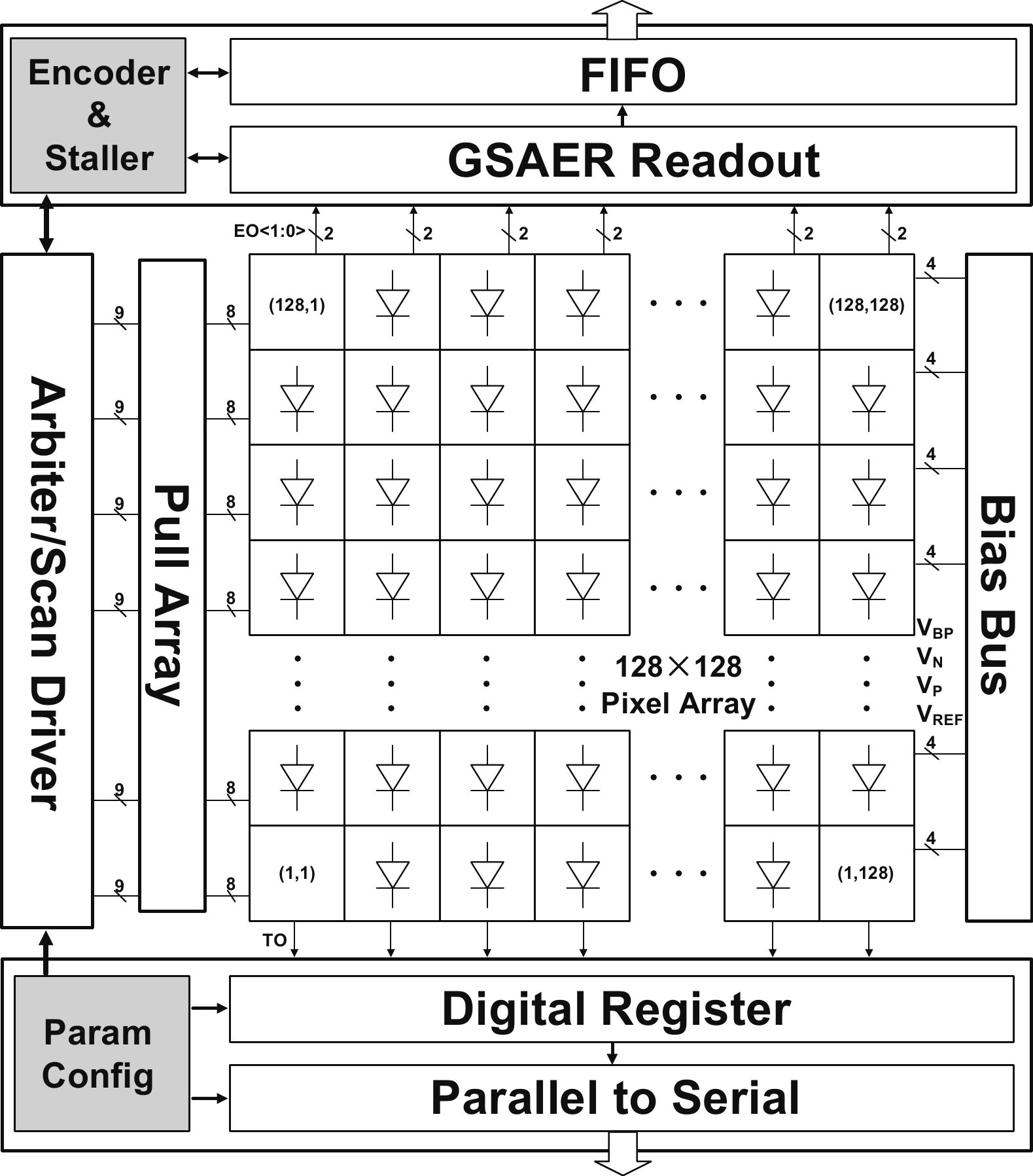

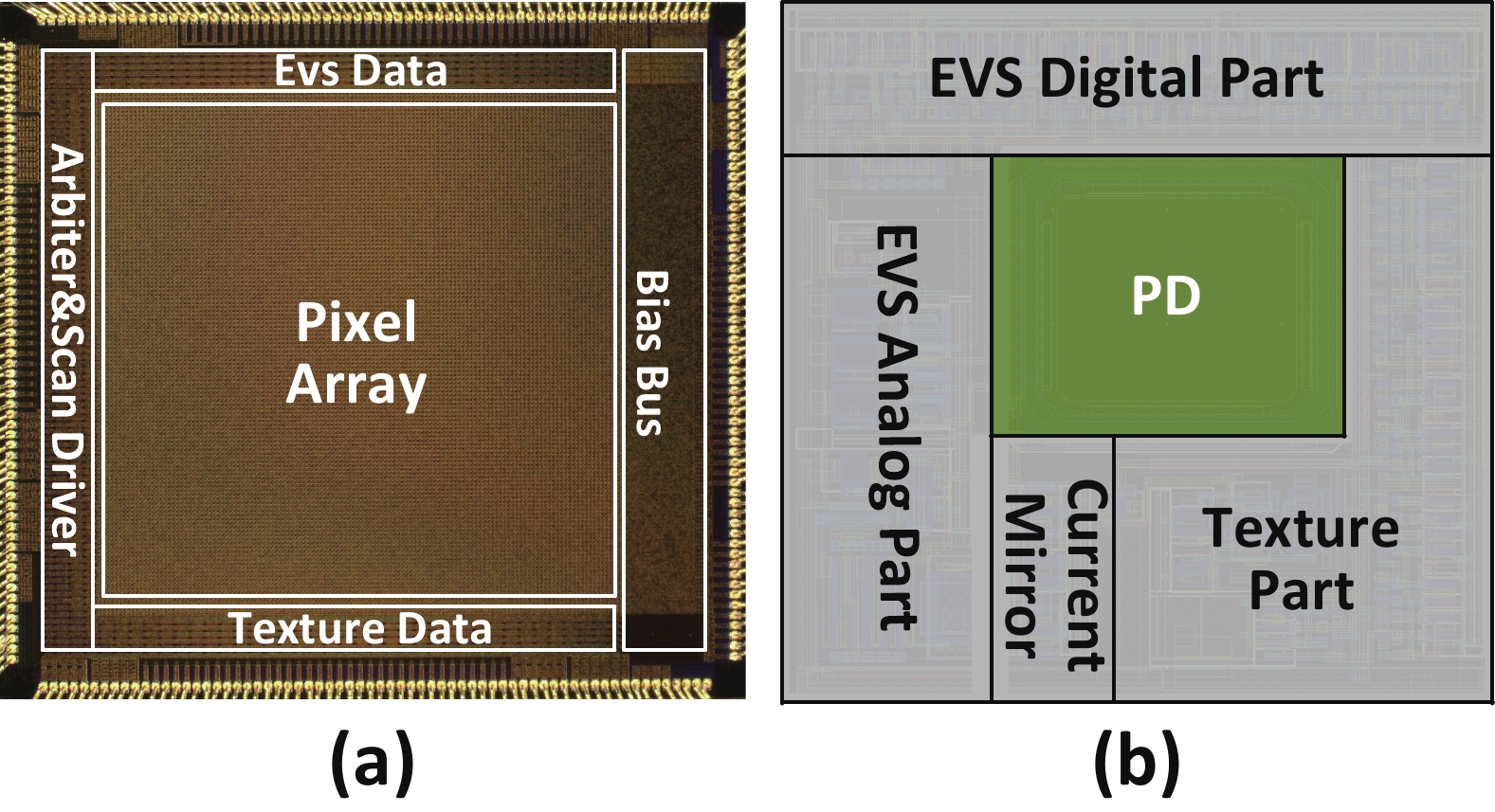

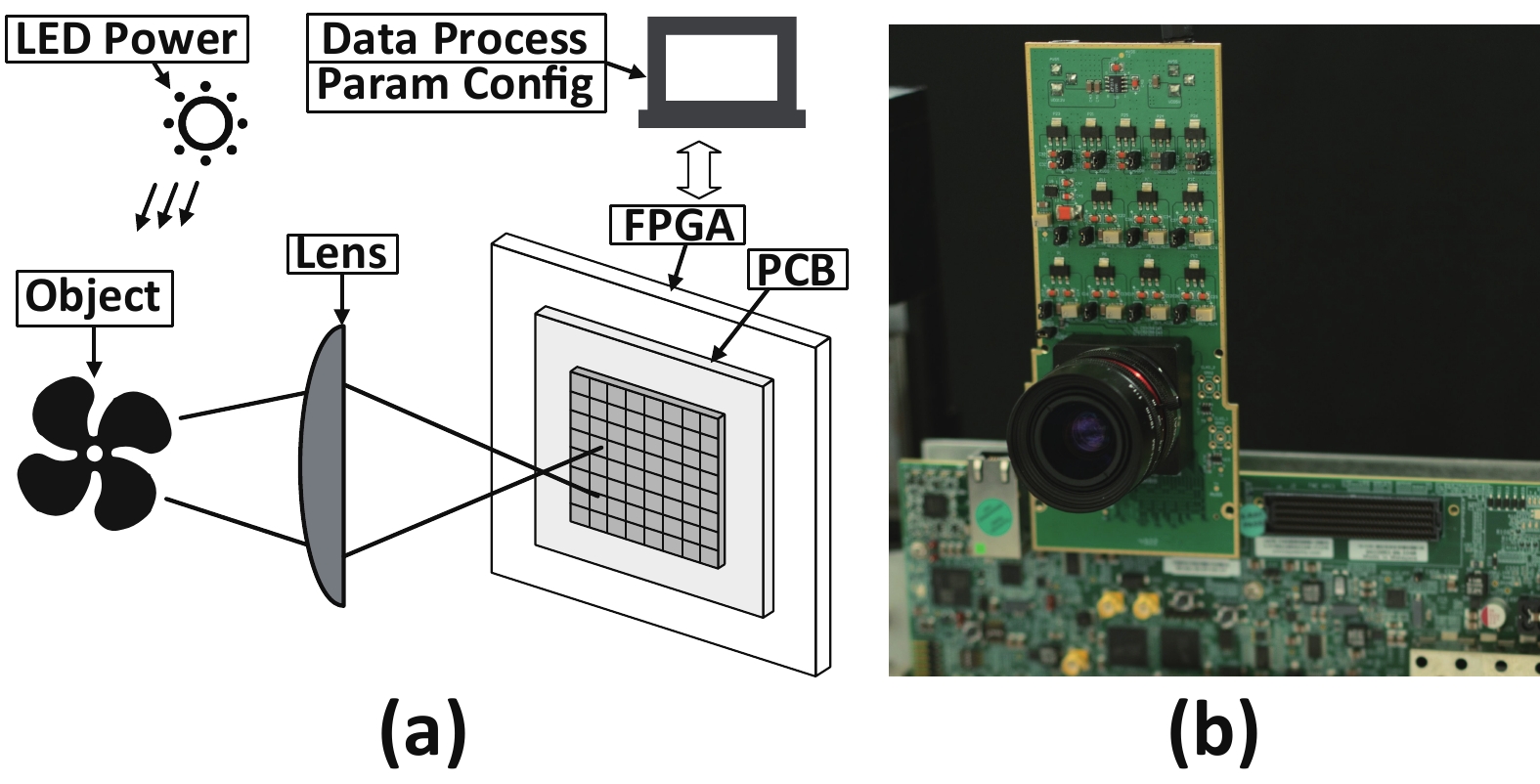

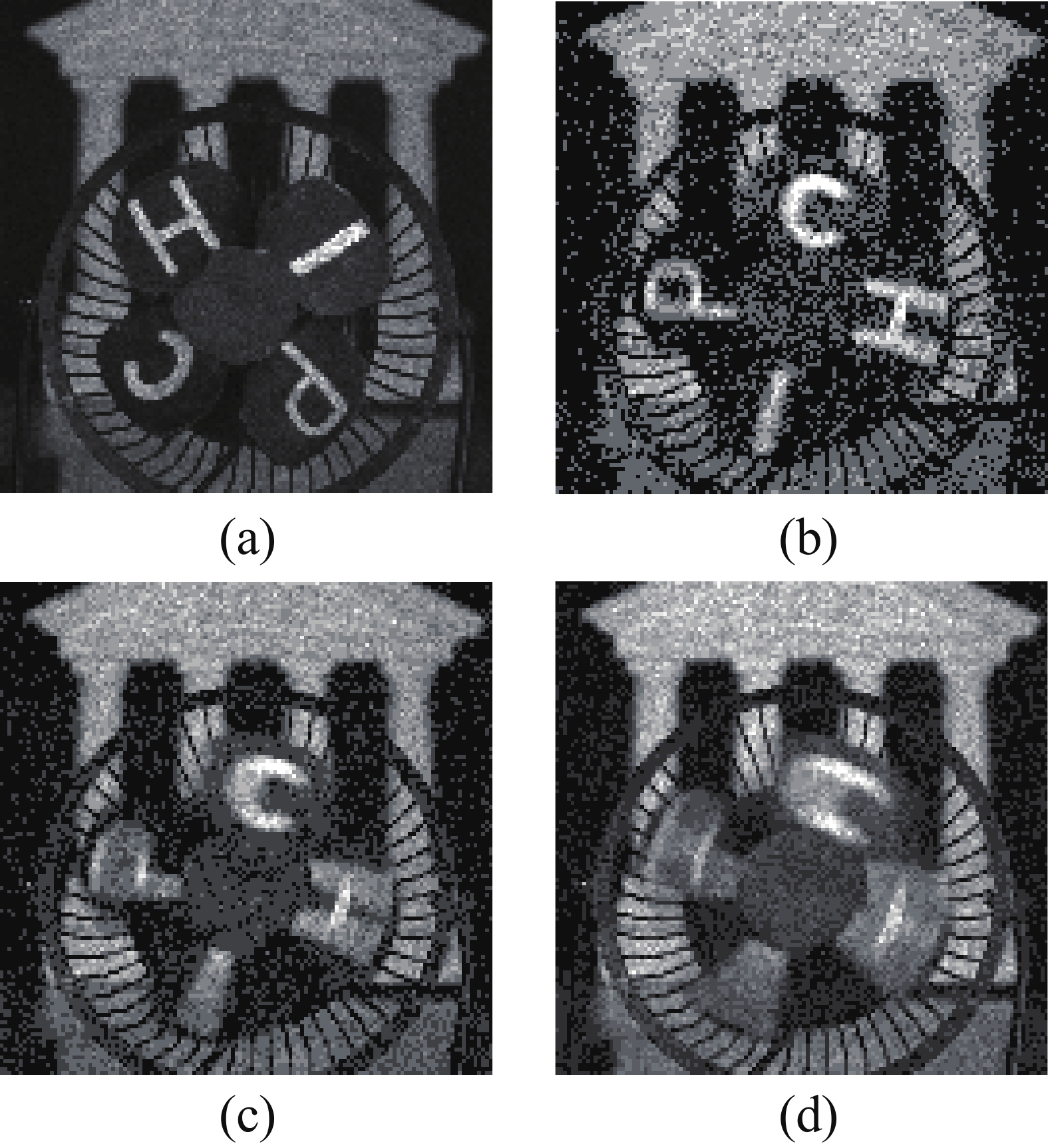

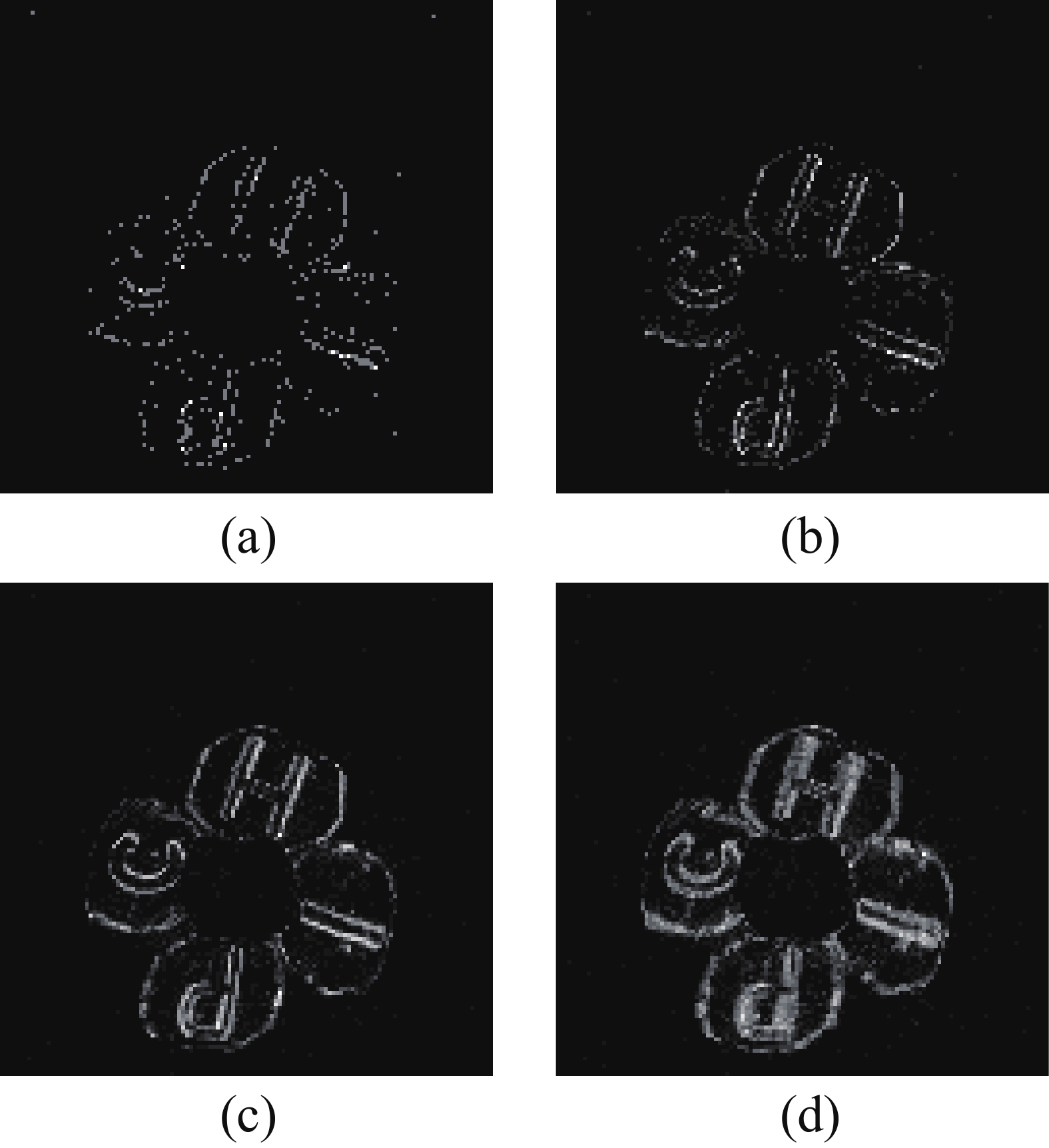

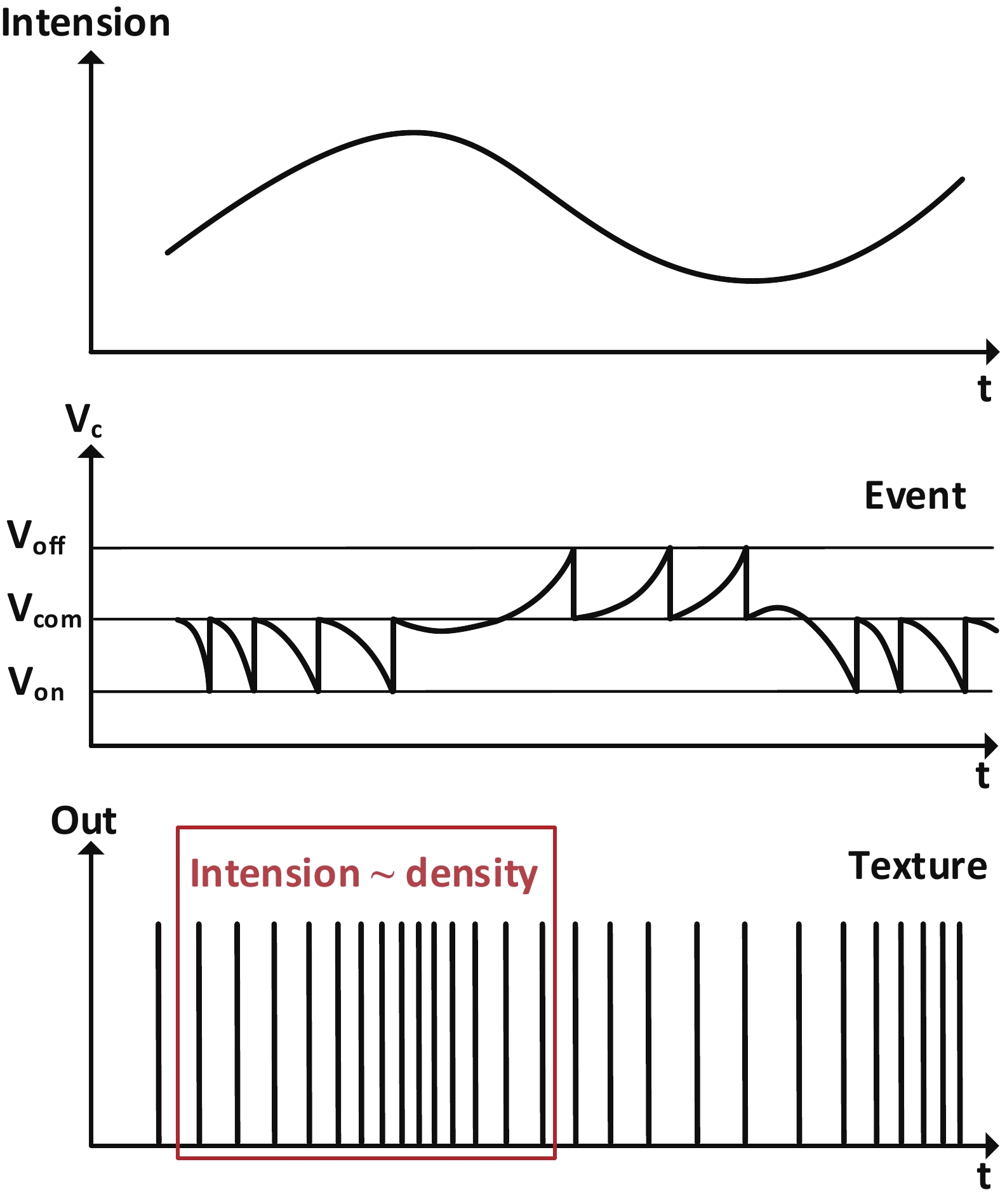

The event-based vision sensor (EVS), which can generate efficient spiking data streams by exclusively detecting motion, exemplifies neuromorphic vision methodologies. Generally, its inherent lack of texture features limits effectiveness in complex vision processing tasks, necessitating supplementary visual information. However, to date, no event-based hybrid vision solution has been developed that preserves the characteristics of complete spike data streams to support synchronous computation architectures based on spiking neural network (SNN). In this paper, we present a novel spike-based sensor with digitized pixels, which integrates the event detection structure with the pulse frequency modulation (PFM) circuit. This design enables the simultaneous output of spiking data that encodes both temporal changes and texture information. Fabricated in 180 nm process, the proposed sensor achieves a resolution of 128 × 128, a maximum event rate of 960 Meps, a grayscale frame rate of 117.1 kfps, and a measured power consumption of 60.1 mW, which is suited for high-speed, low-latency, edge SNN-based vision computing systems. -

References

[1] Lichtsteiner P, Posch C, Delbruck T. A 128×128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J Solid State Circuits, 2008, 43(2), 566 doi: 10.1109/JSSC.2007.914337[2] Gallego G, Delbrück T, Orchard G, et al. Event-based vision: A survey. IEEE Trans Pattern Anal Mach Intell, 2022, 44(1), 154 doi: 10.1109/TPAMI.2020.3008413[3] Li C H, Longinotti L, Corradi F, et al. A 132 by 104 10μm-pixel 250μW 1kefps dynamic vision sensor with pixel-parallel noise and spatial redundancy suppression. 2019 Symposium on VLSI Circuits, 2019, C216 doi: 10.23919/VLSIC.2019.8778050[4] Finateu T, Niwa A, Matolin D, et al. A 1280×720 back-illuminated stacked temporal contrast event-based vision sensor with 4.86µm pixels, 1.066GEPS readout, programmable event-rate controller and compressive data-formatting pipeline. 2020 IEEE International Solid- State Circuits Conference (ISSCC), 2020, 112 doi: 10.1109/ISSCC19947.2020.9063149[5] Suh Y, Choi S, Ito M, et al. A 1280×960 dynamic vision sensor with a 4.95-μm pixel pitch and motion artifact minimization. 2020 IEEE International Symposium on Circuits and Systems (ISCAS), 2020, 1 doi: 10.1109/ISCAS45731.2020.9180436[6] Kang L, Yang X, Zhang C, et al. A 24.3 μJ/image SNN accelerator for DVS-gesture with WS-LOS dataflow and sparse methods. IEEE Trans Circuits Syst II Express Briefs, 2023, 70(11), 4226 doi: 10.1109/TCSII.2023.3282589[7] Yang X, Yao C H, Kang L, et al. A bio-inspired spiking vision chip based on SPAD imaging and direct spike computing for versatile edge vision. IEEE J Solid State Circuits, 2024, 59(6), 1883 doi: 10.1109/JSSC.2023.3340018[8] Zheng Y J, Zheng L X, Yu Z F, et al. High-speed image reconstruction through short-term plasticity for spiking cameras. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, 6358 doi: 10.1109/CVPR46437.2021.00629[9] Zhu L, Li J N, Wang X, et al. NeuSpike-net: High speed video reconstruction via bio-inspired neuromorphic cameras. 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 2021, 2380 doi: 10.1109/ICCV48922.2021.00240[10] Posch C, Matolin D, Wohlgenannt R. A QVGA 143 dB dynamic range frame-free PWM image sensor with lossless pixel-level video compression and time-domain CDS. IEEE J Solid State Circuits, 2011, 46(1), 259 doi: 10.1109/JSSC.2010.2085952[11] Brandli C, Berner R, Yang M H, et al. A 240 × 180 130 dB 3 µs latency global shutter spatiotemporal vision sensor. IEEE J Solid State Circuits, 2014, 49(10), 2333 doi: 10.1109/JSSC.2014.2342715[12] Guo M H, Chen S S, Gao Z, et al. A 3-wafer-stacked hybrid 15MPixel CIS + 1MPixel EVS with 4.6GEvent/s readout, in-pixel TDC and on-chip ISP and ESP function. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 90 doi: 10.1109/ISSCC42615.2023.10067476[13] Kodama K, Sato Y, Yorikado Y, et al. 1.22μm 35.6Mpixel RGB hybrid event-based vision sensor with 4.88μm-pitch event pixels and up to 10K event frame rate by adaptive control on event sparsity. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 92 doi: 10.1109/ISSCC42615.2023.10067520[14] Niwa A, Mochizuki F, Berner R, et al. A 2.97μm-pitch event-based vision sensor with shared pixel front-end circuitry and low-noise intensity readout mode. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 4 doi: 10.1109/ISSCC42615.2023.10067566[15] Ohta, J. Smart CMOS image sensors and applications. Boca Raton: CRC Press, 2020 doi: 10.1201/9781315156255[16] Yang W. A wide-dynamic-range, low-power photosensor array. Proceedings of IEEE International Solid-State Circuits Conference-ISSCC '94, 1994, 230 doi: 10.1109/ISSCC.1994.344657[17] Kitchen A, Bermak A, Bouzerdoum A. PWM digital pixel sensor based on asynchronous self-resetting scheme. IEEE Electron Device Lett, 2004, 25(7), 471 doi: 10.1109/LED.2004.831222[18] Mallik U, Clapp M, Choi E, et al. Temporal change threshold detection imager. 2005 IEEE International Digest of Technical Papers. Solid-State Circuits Conference, 2005, 362 doi: 10.1109/ISSCC.2005.1494019[19] Niclass C, Soga M, Matsubara H, et al. A 0.18-μm CMOS SoC for a 100-m-range 10-frame/s 200 × 96-pixel time-of-flight depth sensor. IEEE J Solid-State Circuits, 49(1), 315 doi: 10.1109/JSSC.2012.2227607[20] Gao J, Wang Y Z, Nie K M, et al. The analysis and suppressing of non-uniformity in a high-speed spike-based image sensor. Sensors, 2018, 18(12), 4232 doi: 10.3390/s18124232[21] Han J, Yang Y X, Duan P Q, et al. Hybrid high dynamic range imaging fusing neuromorphic and conventional images. IEEE Trans Pattern Anal Mach Intell, 2023, 45(7), 8553 doi: 10.1109/TPAMI.2022.3231334[22] Rebecq H, Ranftl R, Koltun V, et al. High speed and high dynamic range video with an event camera. IEEE Trans Pattern Anal Mach Intell, 2021, 43(6), 1964 doi: 10.1109/TPAMI.2019.2963386[23] Yang X, Yu S M, Liu L Y, et al. Efficient reservoir encoding method for near-sensor classification with rate-coding based spiking convolutional neural networks. Advances in Neural Networks – ISNN 2019. Cham: Springer International Publishing, 2019, 242 doi: 10.1007/978-3-030-22808-8_25 -

Proportional views

Huanhui Zhang is pursuing a Ph.D. with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His research activity focuses on the design of spike-based image sensor, and mixed-signal circuits.

Huanhui Zhang is pursuing a Ph.D. with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His research activity focuses on the design of spike-based image sensor, and mixed-signal circuits. Chi Zhang is pursuing a Ph.D. with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His research interests include the co-design of software/hardware and neural network deployment acceleration technology.

Chi Zhang is pursuing a Ph.D. with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His research interests include the co-design of software/hardware and neural network deployment acceleration technology. Xu Yang finished her Post-Doctoral Fellow Training at the Institute of Semiconductors, Chinese Academy of Sciences, in 2023, where she is currently a Research Assistant. Her research interests include bio-inspired spiking vision chips, spiking neural network and image processing algorithms, and parallel image processor architectures.

Xu Yang finished her Post-Doctoral Fellow Training at the Institute of Semiconductors, Chinese Academy of Sciences, in 2023, where she is currently a Research Assistant. Her research interests include bio-inspired spiking vision chips, spiking neural network and image processing algorithms, and parallel image processor architectures. Zhe Wang engaged in postdoctoral research work at the Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China, in 2021, where he became an Assistant Researcher in 2023. His research interests include analog and mixed-signal integrated circuits design and SPAD image sensor design.

Zhe Wang engaged in postdoctoral research work at the Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China, in 2021, where he became an Assistant Researcher in 2023. His research interests include analog and mixed-signal integrated circuits design and SPAD image sensor design. Cong Shi has been a Research Professor with the School of Microelectronics and Communication Engineering, Chongqing University, Chongqing, China, since 2019, where he leads a Research Group in brain-inspired AI chip designs for high-speed visual processors and energy-efficient neuromorphic systems. He has authored and coauthored more than 50 research papers on computer vision and machine learning algorithms, integrated circuit, and system designs.

Cong Shi has been a Research Professor with the School of Microelectronics and Communication Engineering, Chongqing University, Chongqing, China, since 2019, where he leads a Research Group in brain-inspired AI chip designs for high-speed visual processors and energy-efficient neuromorphic systems. He has authored and coauthored more than 50 research papers on computer vision and machine learning algorithms, integrated circuit, and system designs. Runjiang Dou is a Senior Engineer at the Institute of Semiconductors, Chinese Academy of Sciences, Beijing. His research interests include the design of vision systems based on intelligent hardware.

Runjiang Dou is a Senior Engineer at the Institute of Semiconductors, Chinese Academy of Sciences, Beijing. His research interests include the design of vision systems based on intelligent hardware. Shuangming Yu received the B.S. degree in electronic science and technology from Beijing University of Posts and Telecommunications, China, in 2010. He received the Ph.D. degree in microelectronics and solid-state electronics at the institute of Semiconductors, Chinese Academy of Sciences, China, in 2015. He is now an Associate Professor with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His current research interest includes vision chip design and ultra-low power circuit design.

Shuangming Yu received the B.S. degree in electronic science and technology from Beijing University of Posts and Telecommunications, China, in 2010. He received the Ph.D. degree in microelectronics and solid-state electronics at the institute of Semiconductors, Chinese Academy of Sciences, China, in 2015. He is now an Associate Professor with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His current research interest includes vision chip design and ultra-low power circuit design. Jian Liu has been a Full Professor in microelectronics and solid-state electronics at the State Key Laboratory of Semiconductor Physics and Chip Technologies (formerly State Key Laboratory of Superlattices and Microstructures), Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China since 2005. His research interests include semiconductor optoelectronic detectors, terahertz imagers, and metamaterials.

Jian Liu has been a Full Professor in microelectronics and solid-state electronics at the State Key Laboratory of Semiconductor Physics and Chip Technologies (formerly State Key Laboratory of Superlattices and Microstructures), Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China since 2005. His research interests include semiconductor optoelectronic detectors, terahertz imagers, and metamaterials. Nanjian Wu has been a Professor with the State Key Laboratory of Semiconductor Physics and Chip Technologies (formerly State Key Laboratory of Superlattices and Microstructures), Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China, since 2000. His research includes the field of mixed-signal VLSI and vision chip design.

Nanjian Wu has been a Professor with the State Key Laboratory of Semiconductor Physics and Chip Technologies (formerly State Key Laboratory of Superlattices and Microstructures), Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China, since 2000. His research includes the field of mixed-signal VLSI and vision chip design. Peng Feng is a Professor in Integrated Circuit Science and Engineering with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His research interests include ultra-low power mixed signal/RF integrated circuits and CMOS image sensors.

Peng Feng is a Professor in Integrated Circuit Science and Engineering with the State Key Laboratory of Semiconductor Physics and Chip Technologies, Institute of Semiconductors, Chinese Academy of Sciences, Beijing, China. His research interests include ultra-low power mixed signal/RF integrated circuits and CMOS image sensors. Liyuan Liu joined the State Key Laboratory of Semiconductor Physics and Chip Technologies (formerly State Key Laboratory of Superlattices and Microstructures), Institute of Semiconductors, Chinese Academy of Sciences, Beijing, as an Associate Professor in 2012, where he became a Professor in 2018. His research interests include mixed-signal IC design, CMOS image sensors, terahertz image sensors, and monolithic vision chip design.

Liyuan Liu joined the State Key Laboratory of Semiconductor Physics and Chip Technologies (formerly State Key Laboratory of Superlattices and Microstructures), Institute of Semiconductors, Chinese Academy of Sciences, Beijing, as an Associate Professor in 2012, where he became a Professor in 2018. His research interests include mixed-signal IC design, CMOS image sensors, terahertz image sensors, and monolithic vision chip design.

DownLoad:

DownLoad: