| Citation: |

Longke Yan, Xin Zhao, Bohan Yang, Yongkun Wu, Guangnan Dai, Jiancong Li, Chi-Ying Tsui, Kwang-Ting Cheng, Yihan Zhang, Fengbin Tu. Robotic computing system and embodied AI evolution: an algorithm-hardware co-design perspective[J]. Journal of Semiconductors, 2025, 46(10): 101201. doi: 10.1088/1674-4926/25020034

****

L K Yan, X Zhao, B H Yang, Y K Wu, G N Dai, J C Li, C Y Tsui, K T Cheng, Y H Zhang, and F B Tu, Robotic computing system and embodied AI evolution: an algorithm-hardware co-design perspective[J]. J. Semicond., 2025, 46(10), 101201 doi: 10.1088/1674-4926/25020034

|

Robotic computing system and embodied AI evolution: an algorithm-hardware co-design perspective

DOI: 10.1088/1674-4926/25020034

CSTR: 32376.14.1674-4926.25020034

More Information-

Abstract

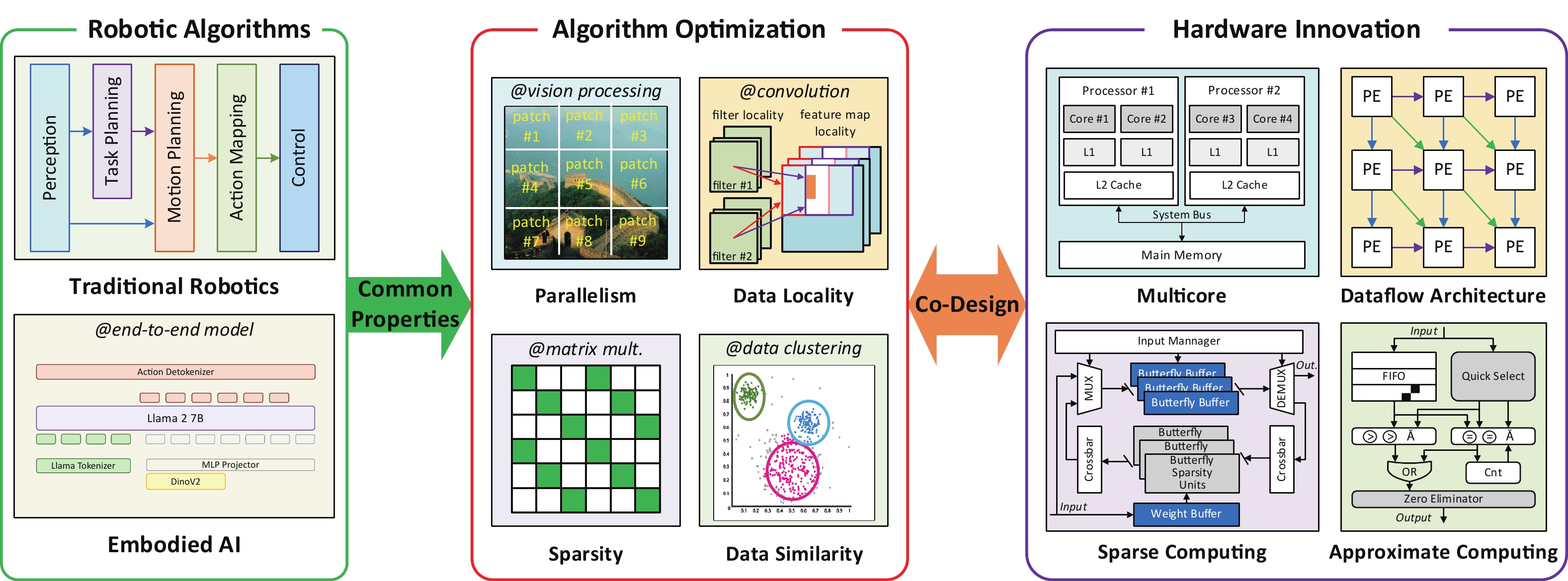

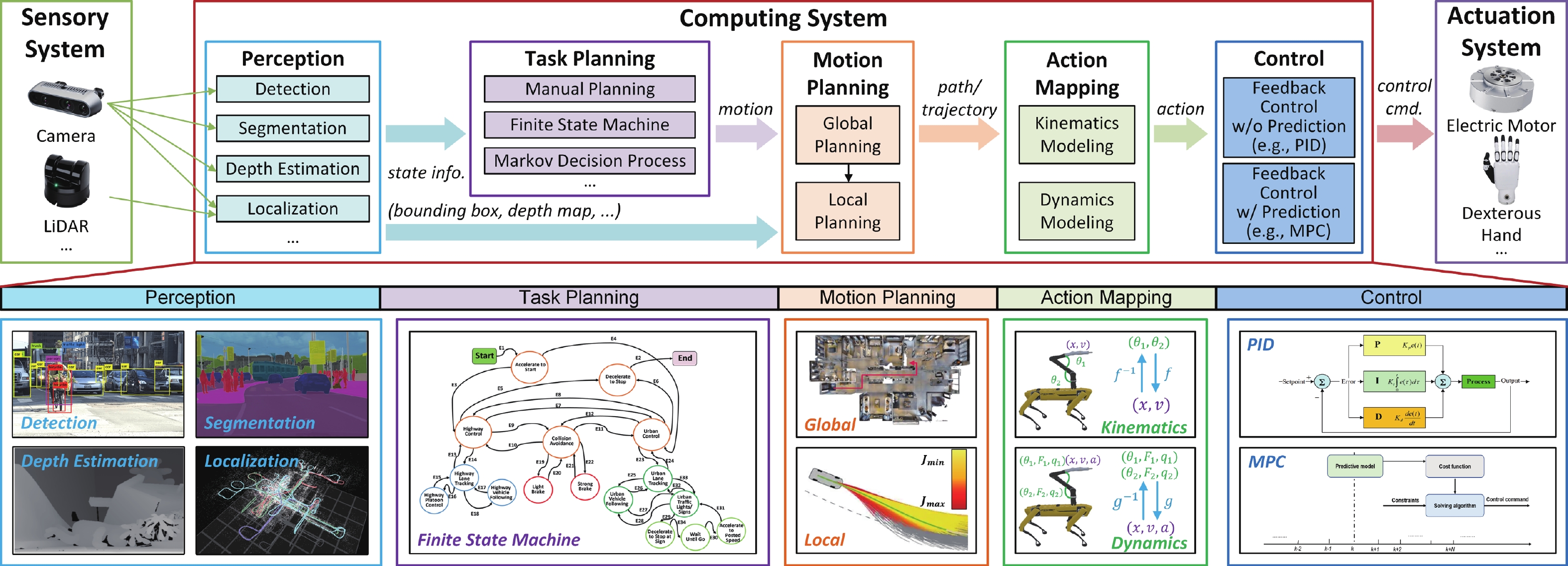

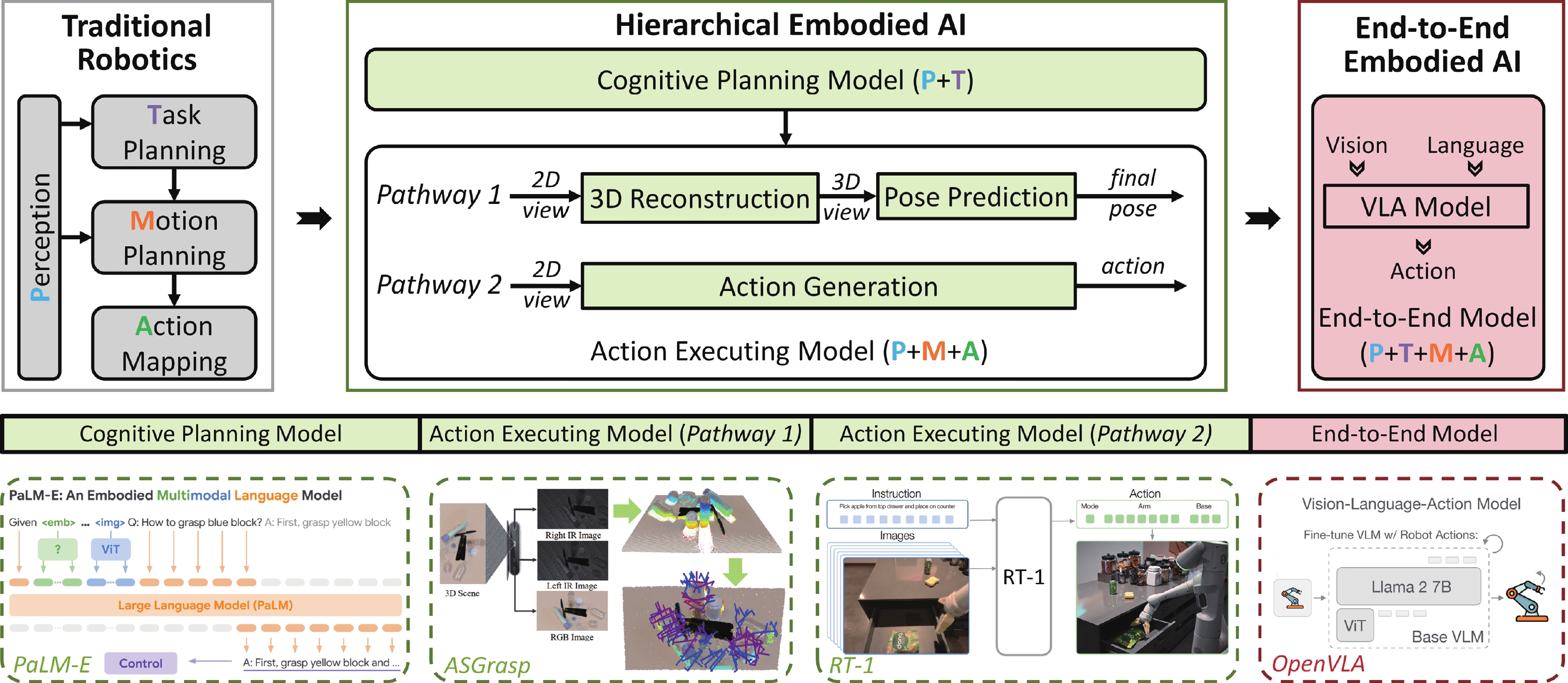

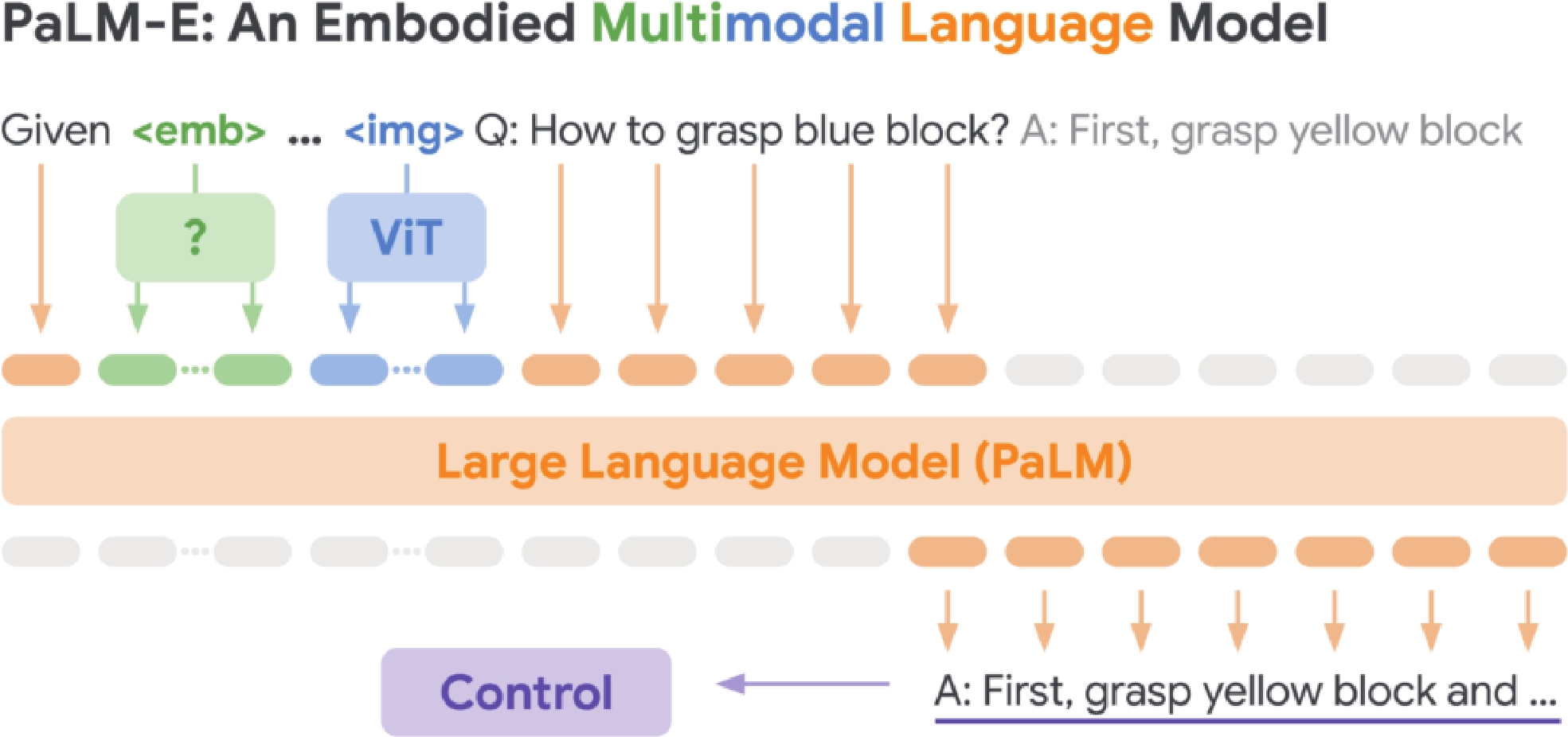

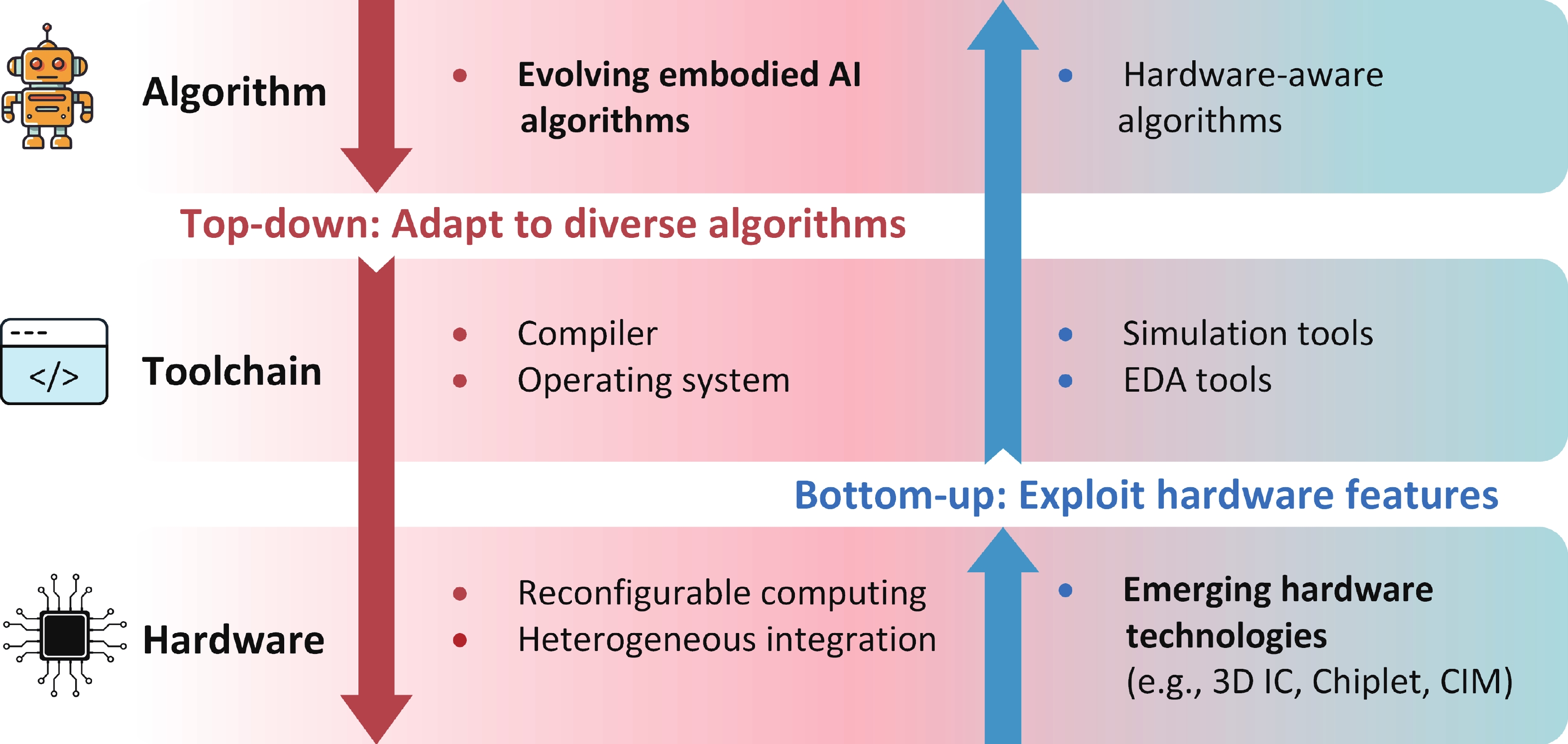

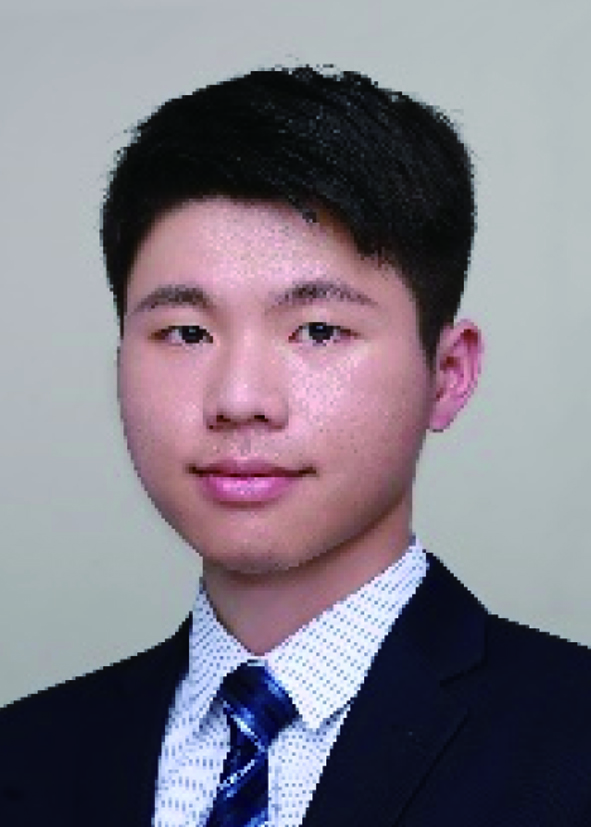

Robotic computing systems play an important role in enabling intelligent robotic tasks through intelligent algorithms and supporting hardware. In recent years, the evolution of robotic algorithms indicates a roadmap from traditional robotics to hierarchical and end-to-end models. This algorithmic advancement poses a critical challenge in achieving balanced system-wide performance. Therefore, algorithm-hardware co-design has emerged as the primary methodology, which analyzes algorithm behaviors on hardware to identify common computational properties. These properties can motivate algorithm optimization to reduce computational complexity and hardware innovation from architecture to circuit for high performance and high energy efficiency. We then reviewed recent works on robotic and embodied AI algorithms and computing hardware to demonstrate this algorithm-hardware co-design methodology. In the end, we discuss future research opportunities by answering two questions: (1) how to adapt the computing platforms to the rapid evolution of embodied AI algorithms, and (2) how to transform the potential of emerging hardware innovations into end-to-end inference improvements. -

References

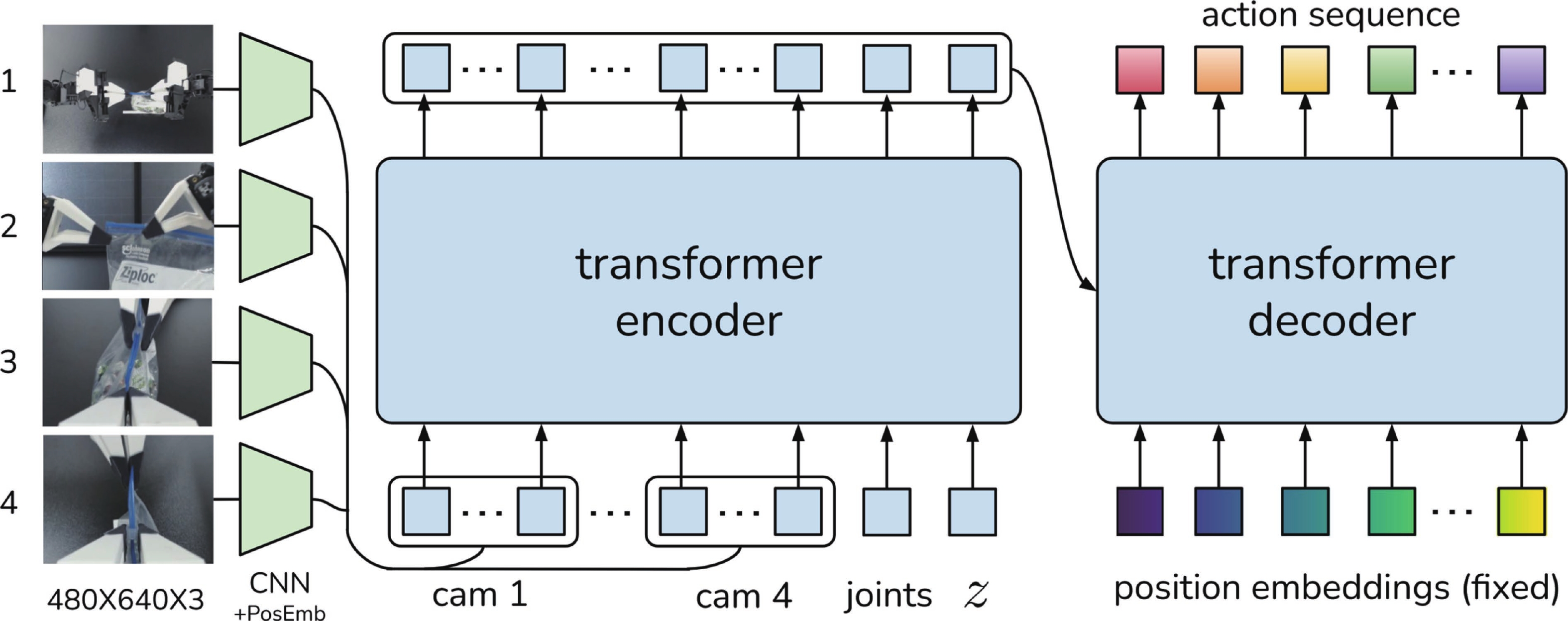

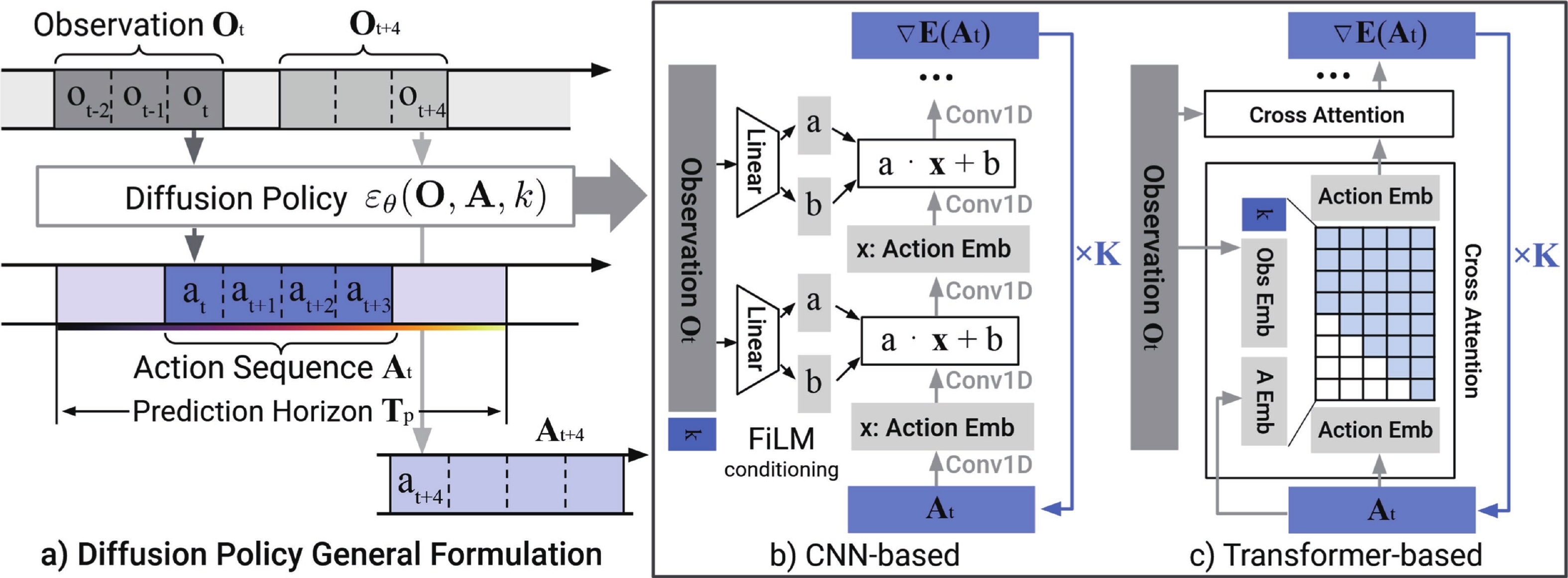

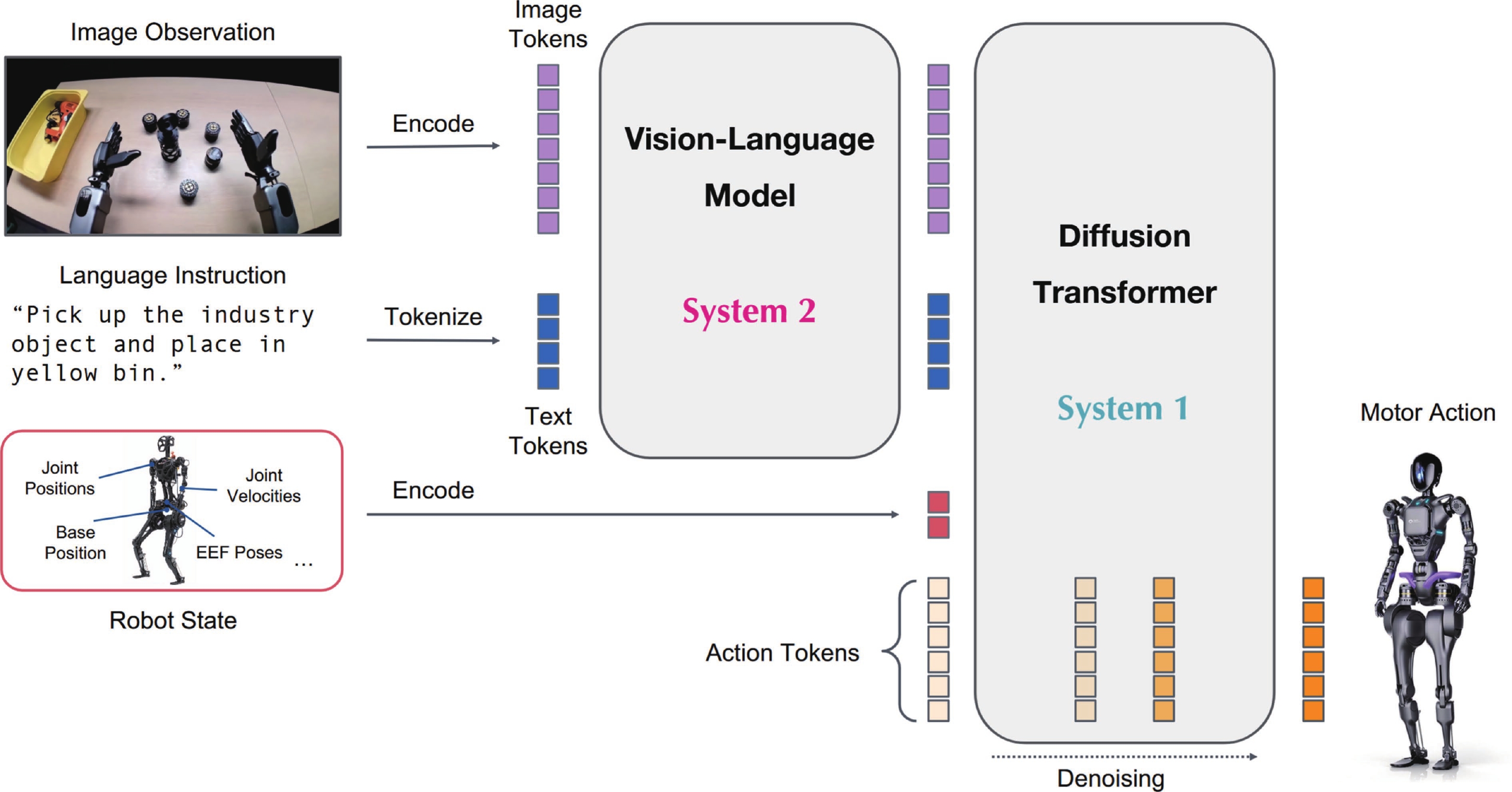

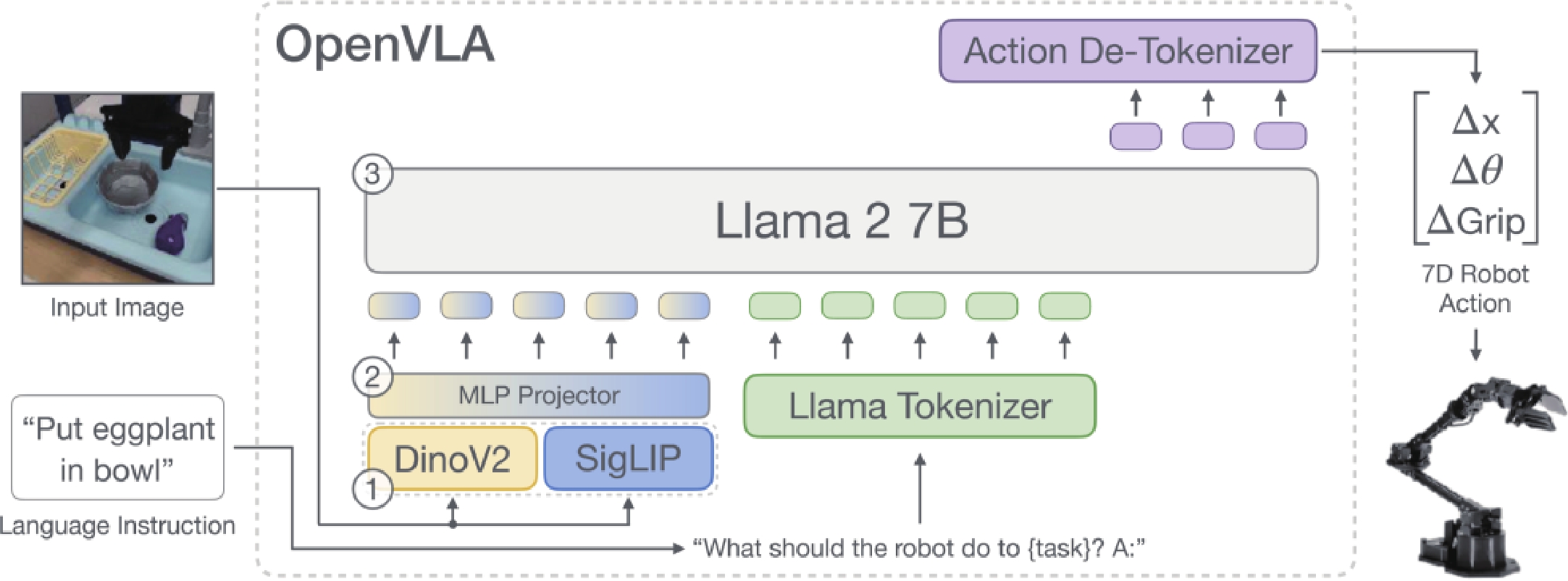

[1] Attanasio A, Scaglioni B, Momi E D, et al. Autonomy in surgical robotics. Annu Rev Control Rob Auton Syst, 2021, 4, 651 doi: 10.1146/annurev-control-062420-090543[2] Guizzo E. Universal robots introduces its strongest robotic arm yet. 2019[3] Wan S, Goudos S. Faster r-cnn for multi-class fruit detection using a robotic vision system. Comput Networks, 2020, 168, 107036 doi: 10.1016/j.comnet.2019.107036[4] LaValle S. Rapidly-exploring random trees : a new tool for path planning. Annu Res Rep, 1998[5] Hart P E, Nilsson N J, Raphael B. A formal basis for the heuristic determination of minimum cost paths. IEEE Trans Syst Sci Cybern, 1968, 4, 100 doi: 10.1109/TSSC.1968.300136[6] Fang HS, Wang C, Fang H, et al. Anygrasp: robust and efficient grasp perception in spatial and temporal domains. IEEE Trans Robot, 2023, 39, 39293 doi: 10.1109/TRO.2023.3281153[7] Zhao T Z, Kumar V, Levine S, et al. Learning fine-grained bimanual manipulation with low-cost hardware. arXiv, 2023[8] Chi C, Xu Z, Feng S, et al. Diffusion policy: visuomotor policy learning via action diffusion. arXiv, 2023[9] Vemprala S, Bonatti R, Bucker A, et al. ChatGPT for robotics: design principles and model abilities. arXiv, 2023[10] Driess D, Xia F, Sajjadi M S M, et al. PaLM-e: an embodied multimodal language model. arXiv, 2023[11] Liang Z, Mu Y, Ma H, et al. SkillDiffuser: interpretable hierarchical planning via skill abstractions in diffusion-based task execution. arXiv, 2024[12] Black K, Brown N, Driess D, et al. π0: a vision-language-action flow model for general robot control. arXiv, 2024[13] Figure. Helix: a vision-language-action model for generalist humanoid control. Figure, 2025[14] NVIDIA, Bjorck J, Castañeda F, et al. GR00T n1: an open foundation model for generalist humanoid robots. arXiv, 2025[15] Brohan A, Brown N, Carbajal J, et al. RT-2: vision-language-action models transfer web knowledge to robotic control. arXiv, 2023[16] Collaboration O X E, O’Neill A, Rehman A, et al. Open x-embodiment: robotic learning datasets and rt-x models. arXiv, 2023[17] Kim M J, Pertsch K, Karamcheti S, et al. OpenVLA: an open-source vision-language-action model. arXiv, 2024[18] Zhao Q, Lu Y, Kim M J, et al. CoT-vla: visual chain-of-thought reasoning for vision-language-action models. arXiv, 2025[19] Karaman S, Frazzoli E. Sampling-based algorithms for optimal motion planning. arXiv, 2011[20] Wan Z, Yu B, Li T Y, et al. A survey of fpga-based robotic computing. IEEE Circuits Syst Mag, 2021, 21, 48 doi: 10.1109/MCAS.2021.3071609[21] Bakhshalipour M, Gibbons P B. Agents of autonomy: a systematic study of robotics on modern hardware. Proc, ACM Meas, Anal, Comput, Syst, 2023, 4(1), 651 doi: 10.1145/3626774[22] Suleiman A, Zhang Z, Carlone L, et al. Navion: a 2-mw fully integrated real-time visual-inertial odometry accelerator for autonomous navigation of nano drones. IEEE J Solid-State Circuits, 2019, 54, 1106 doi: 10.1109/JSSC.2018.2886342[23] Wang Y, Qin Y, Deng D, et al. A 28nm 27.5tops/w approximate-computing-based transformer processor with asymptotic sparsity speculating and out-of-order computing. 2022 IEEE International Solid-State Circuits Conference (ISSCC), 2022, 1 doi: 10.1109/ISSCC42614.2022.9731686[24] Li Z, Dong Q, Saligane M, et al. 3.7 a 1920×1080 30fps 2.3tops/w stereo-depth processor for robust autonomous navigation. 2017 IEEE International Solid-State Circuits Conference (ISSCC), 2017, 62 doi: 10.1109/ISSCC.2017.7870261[25] Tu F, Wu Z, Wang Y, et al. A 28nm 15.59µj/token full-digital bitline-transpose cim-based sparse transformer accelerator with pipeline/parallel reconfigurable modes. 2022 IEEE International Solid-State Circuits Conference (ISSCC), 2022, 466 doi: 10.1109/ISSCC42614.2022.9731645[26] Tesla. Tesla’s optimus. Tesla[27] Agility Robotics. Digit supports several manufacturing specific workflows. Agility Robotics[28] Hmidani O, Ismaili Alaoui E M. A comprehensive survey of the r-cnn family for object detection. 2022 5th International Conference on Advanced Communication Technologies and Networking (CommNet), 2022, 1 doi: 10.1109/CommNet56067.2022.9993862[29] Nazir A, Wani Mohd A. You only look once-object detection models: a review. 2023 10th International Conference on Computing for Sustainable Global Development (INDIACom), 2023, 1088[30] Lefebvre M, Moreau L, Dekimpe R, et al. A 0.2-to-3.6 tops/w programmable convolutional imager soc with in-sensor current-domain ternary-weighted mac operations for feature extraction and region-of-interest detection. 2021 IEEE international solid-state circuits conference (ISSCC), 2021, 118 doi: 10.1109/ISSCC42613.2021.9365839[31] Gong Y, Zhang T, Guo H, et al. 22.7 dl-vopu: an energy-efficient domain-specific deep-learning-based visual object processing unit supporting multi-scale semantic feature extraction for mobile object detection/tracking applications. 2023 IEEE international solid-state circuits conference (ISSCC), 2023, 1 doi: 10.1109/ISSCC42615.2023.10067704[32] Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, 2015, 234 doi: 10.1007/978-3-319-24574-4_28[33] Chen L C, Papandreou G, Kokkinos I, et al. Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell, 2017, 40, 834 doi: 10.1109/TPAMI.2017.2699184[34] Vohra J, Gupta A, Alioto M. 6.3 imager with in-sensor event detection and morphological transformations with 2.9 pj/pixel× frame object segmentation fom for always-on surveillance in 40nm. 2024 IEEE international solid-state circuits conference (ISSCC), 2024, 104 doi: 10.1109/ISSCC49657.2024.10454391[35] Guo A, Si X, Chen X, et al. A 28nm 64-kb 31.6-tflops/w digital-domain floating-point-computing-unit and double-bit 6t-sram computing-in-memory macro for floating-point cnns. 2023 IEEE international solid-state circuits conference (ISSCC), 2023, 128 doi: 10.1109/ISSCC42615.2023.10067260[36] Yoon S, Park S K, Kang S, et al. Fast correlation-based stereo matching with the reduction of systematic errors. Pattern Recognit Lett, 2005, 26, 2221 doi: 10.1016/j.patrec.2005.03.037[37] Hirschmuller H. Accurate and efficient stereo processing by semi-global matching and mutual information. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), 2005, 807 doi: 10.1109/CVPR.2005.56[38] Sekhar V C, Bora S, Das M, et al. Design and implementation of blind assistance system using real time stereo vision algorithms. 2016 29th International Conference on VLSI Design and 2016 15th International Conference on Embedded Systems (VLSID), 2016, 421 doi: 10.1109/VLSID.2016.11[39] Li M, Mourikis A I. High-precision, consistent ekf-based visual-inertial odometry. Int, J Rob, Res, 2013, 32, 690 doi: 10.1177/0278364913481251[40] Liu Q, Hao Y, Liu W, et al. An energy efficient and runtime reconfigurable accelerator for robotic localization. IEEE Trans Comput, 2023, 72, 1943 doi: 10.1109/TC.2022.3230899[41] Gan Y, Bo Y, Tian B, et al. Eudoxus: characterizing and accelerating localization in autonomous machines industry track paper. 2021 IEEE International Symposium on High-Performance Computer Architecture (HPCA), 2021, 827 doi: 10.1109/HPCA51647.2021.00074[42] Liu W, Yu B, Gan Y, et al. Archytas: a framework for synthesizing and dynamically optimizing accelerators for robotic localization. MICRO-54: 54th Annual IEEE/ACM International Symposium on Microarchitecture, 2021, 479 doi: 10.1145/3466752.3480077[43] Paden B, Čáp M, Yong S Z, et al. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans Intell Veh, 2016, 1, 33 doi: 10.1109/TIV.2016.2578706[44] Urmson C, Anhalt J, Bagnell D, et al. Tartan racing: a multi-modal approach to the darpa urban challenge. DARPA Grand Challenge Tech Report, 2007[45] Liu S, Zheng K, Zhao L, et al. A driving intention prediction method based on hidden markov model for autonomous driving. arXiv, 2019[46] Talpes E, Sarma D D, Venkataramanan G, et al. Compute solution for tesla’s full self-driving computer. IEEE Micro, 2020, 40, 25 doi: 10.1109/MM.2020.2975764[47] González D, Pérez J, Milanés V, et al. A review of motion planning techniques for automated vehicles. IEEE Trans Intell Transp Syst, 2016, 17, 1135 doi: 10.1109/TITS.2015.2498841[48] Dijkstra E W. A note on two problems in connexion with graphs. Numer, Math, 1959, 1, 269 doi: 10.1007/BF01386390[49] Koppen M. The curse of dimensionality. Proceedings of the 5th Online World Conference on Soft Computing in Industrial Applications, 2000, 4[50] Kavraki L E, Svestka P, Latombe J C, et al. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans Robot Autom, 1996, 12, 566 doi: 10.1109/70.508439[51] Bakhshalipour M, Ehsani S B, Qadri M, et al. RACOD: algorithm/hardware co-design for mobile robot path planning. Proceedings of the 49th Annual International Symposium on Computer Architecture, 2022, 597 doi: 10.1145/3470496.3527383[52] Lian S, Han Y, Chen X, et al. Dadu-p: a scalable accelerator for robot motion planning in a dynamic environment. 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC), 2018, 1 doi: 10.1145/3195970.3196020[53] Chung C, Yang C H. A 1.5-μj/task path-planning processor for 2-d/3-d autonomous navigation of microrobots. IEEE J Solid-State Circuits, 2021, 56, 112 doi: 10.1109/JSSC.2020.3037138[54] Gasparetto A, Boscariol P, Lanzutti A, et al. Path planning and trajectory planning algorithms: a general overview. Motion and Operation Planning of Robotic Systems: Background and Practical Approaches, 2015, 3 doi: 10.1007/978-3-319-14705-5_1[55] Betts J T. Survey of numerical methods for trajectory optimization. J Guid Control Dyn, 1998, 21, 193 doi: 10.2514/2.4231[56] Boyd S, Parikh N, Chu E, et al. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends® Mach Learn, 2011, 3, 1 doi: 10.1561/2200000016[57] Zhao Z, Cheng S, Ding Y, et al. A survey of optimization-based task and motion planning: from classical to learning approaches. IEEE/ASME Trans Mechatronics, 2024, 1 doi: 10.1109/TMECH.2024.3452509[58] Chrétien B, Escande A, Kheddar A. GPU robot motion planning using semi-infinite nonlinear programming. IEEE Trans Parallel Distrib Syst, 2016, 27, 2926 doi: 10.1109/TPDS.2016.2521373[59] Hao Y, Gan Y, Yu B, et al. BLITZCRANK: factor graph accelerator for motion planning. 2023 60th ACM/IEEE Design Automation Conference (DAC), 2023, 1 doi: 10.1109/DAC56929.2023.10247780[60] Hao Y, Gan Y, Yu B, et al. ORIANNA: an accelerator generation framework for optimization-based robotic applications. Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, 2024, 2, 813 doi: 10.1145/3620665.3640379[61] Aristidou A, Lasenby J, Chrysanthou Y, et al. Inverse kinematics techniques in computer graphics: a survey. Comput Graphics Forum, 2018, 37, 35 doi: 10.1111/cgf.13310[62] Tkachenko O, Song K T. Hardware accelerated inverse kinematics for low power surgical manipulators. 2020 International Conference on Advanced Robotics and Intelligent Systems (ARIS), 2020, 1 doi: 10.1109/ARIS50834.2020.9205769[63] Lian S, Han Y, Wang Y, et al. Dadu: accelerating inverse kinematics for high-dof robots. Proceedings of the 54th Annual Design Automation Conference 2017, 2017, 1 doi: 10.1145/3061639.306222[64] Plancher B, Neuman S M, Ghosal R, et al. GRiD: gpu-accelerated rigid body dynamics with analytical gradients. 2022 International Conference on Robotics and Automation (ICRA), 2022, 6253 doi: 10.1109/ICRA46639.2022.9812384[65] Neuman S M, Koolen T, Drean J, et al. Benchmarking and workload analysis of robot dynamics algorithms. 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, 5235 doi: 10.1109/IROS40897.2019.8967694[66] Neuman S M, Plancher B, Bourgeat T, et al. Robomorphic computing: a design methodology for domain-specific accelerators parameterized by robot morphology. Proceedings of the 26th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, 2021, 674 doi: 10.1145/3445814.3446746[67] Neuman S M, Ghosal R, Bourgeat T, et al. RoboShape: using topology patterns to scalably and flexibly deploy accelerators across robots. Proceedings of the 50th Annual International Symposium on Computer Architecture, 2023, 1 doi: 10.1145/3579371.3589104[68] Yang Y, Chen X, Han Y. Dadu-rbd: robot rigid body dynamics accelerator with multifunctional pipelines. Proceedings of the 56th Annual IEEE/ACM International Symposium on Microarchitecture, 2023, 297 doi: 10.1145/3613424.3614298[69] Liu W, Hua M, Deng Z, et al. A systematic survey of control techniques and applications in connected and automated vehicles. IEEE Internet Things J, 2023, 10, 21892 doi: 10.1109/JIOT.2023.3307002[70] Moro F L, Sentis L. Whole-body control of humanoid robots. Humanoid Robotics: A Reference, 2019, 1161[71] Sentis L, Khatib O. Synthesis of whole-body behaviors through hierarchical control of behavioral primitives. Int J Humanoid Rob, 2005, 02, 505 doi: 10.1142/S0219843605000594[72] Dietrich A, Ott C, Albu-Schäffer A. An overview of null space projections for redundant, torque-controlled robots. Int J Rob Res, 2015, 34, 1385 (in Chinese) doi: 10.1177/0278364914566516[73] Dario Bellicoso C, Jenelten F, Fankhauser P, et al. Dynamic locomotion and whole-body control for quadrupedal robots. 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2017, 3359 doi: 10.1109/IROS.2017.8206174[74] Sleiman J P, Farshidian F, Minniti M V, et al. A unified mpc framework for whole-body dynamic locomotion and manipulation. IEEE Robot Autom Lett, 2021, 6, 4688 doi: 10.1109/LRA.2021.3068908[75] Ngo H Q T, Duc Nguven H, Truong Q V. A design of pid controller using fpga-realization for motion control systems. 2020 International Conference on Advanced Computing and Applications (ACOMP), 2020, 150 doi: 10.1109/ACOMP50827.2020.00030[76] Li Y, Li S E, Jia X, et al. FPGA accelerated model predictive control for autonomous driving. J Intell Connected Veh, 2022, 5, 63 doi: 10.1108/JICV-03-2021-0002[77] Lin I T, Fu Z S, Chen W C, et al. 2.5 a 28nm 142mw motion-control soc for autonomous mobile robots. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 1 doi: 10.1109/ISSCC42615.2023.10067700[78] Huang J, Yong S, Ma X, et al. An embodied generalist agent in 3d world. arXiv, 2023[79] Kim M J, Finn C, Liang P. Fine-tuning vision-language-action models: optimizing speed and success. arXiv, 2025[80] Huang W, Xia F, Xiao T, et al. Inner monologue: embodied reasoning through planning with language models. arXiv, 2022[81] Chowdhery A, Narang S, Devlin J, et al. PaLM: scaling language modeling with pathways. arXiv, 2022[82] Dehghani M, Djolonga J, Mustafa B, et al. Scaling vision transformers to 22 billion parameters. arXiv, 2023[83] Samavati T, Soryani M. Deep learning-based 3d reconstruction: a survey. Artif Intell Rev, 2023, 56, 9175 doi: 10.1007/s10462-023-10399-2[84] Dai Q, Zhu Y, Geng Y, et al. Graspnerf: multiview-based 6-dof grasp detection for transparent and specular objects using generalizable nerf. 2023 IEEE international conference on robotics and automation (ICRA), 2023, 1757 doi: 10.1109/ICRA48891.2023.10160842[85] Shi J, A Y, Jin Y, et al. ASGrasp: generalizable transparent object reconstruction and grasping from rgb-d active stereo camera. arXiv, 2024[86] Shi J, Yong A, Jin Y, et al. Asgrasp: generalizable transparent object reconstruction and 6-dof grasp detection from rgb-d active stereo camera. 2024 IEEE international conference on robotics and automation (ICRA), 2024, 5441 doi: 10.1109/ICRA57147.2024.10611152[87] Qi C R, Su H, Mo K, et al. PointNet: deep learning on point sets for 3d classification and segmentation. arXiv, 2017[88] Zhou L, Wang H, Zhang Z, et al. You only scan once: a dynamic scene reconstruction pipeline for 6-dof robotic grasping of novel objects. 2024 IEEE international conference on robotics and automation (ICRA), 2024, 13891 doi: 10.1109/ICRA57147.2024.10611371[89] Zheng Y, Chen X, Zheng Y, et al. GaussianGrasper: 3d language gaussian splatting for open-vocabulary robotic grasping. arXiv prepr arXiv: 2403, 2024, 9(9), 7827 doi: 10.1109/LRA.2024.3432348[90] Ze Y, Yan G, Wu Y H, et al. Gnfactor: multi-task real robot learning with generalizable neural feature fields. Conference on robot learning, 2023, 284[91] Huang S, Chen L, Zhou P, et al. EnerVerse: envisioning embodied future space for robotics manipulation. arXiv, 2025[92] Wang C, Fang H S, Gou M, et al. Graspness discovery in clutters for fast and accurate grasp detection. Proceedings of the IEEE/CVF international conference on computer vision, 2021, 15964 doi: 10.1109/ICCV48922.2021.01566[93] Brohan A, Brown N, Carbajal J, et al. RT-1: robotics transformer for real-world control at scale. arXiv, 2023[94] He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, 770 doi: 10.1109/CVPR.2016.90[95] Du Y, Yang S, Dai B, et al. Learning universal policies via text-guided video generation. Adv Neural Inf Process Syst, 2023, 36, 9156[96] Ko P C, Mao J, Du Y, et al. Learning to act from actionless videos through dense correspondences. arXiv, 2023[97] Liu S, Wu L, Li B, et al. RDT-1b: a diffusion foundation model for bimanual manipulation. arXiv, 2024[98] Bu Q, Cai J, Chen L, et al. AgiBot world colosseo: a large-scale manipulation platform for scalable and intelligent embodied systems. arXiv, 2025[99] Karamcheti S, Nair S, Balakrishna A, et al. Prismatic vlms: investigating the design space of visually-conditioned language models. arXiv, 2024[100] Tambe T, Zhang J, Hooper C, et al. 22.9 a 12nm 18.1tflops/w sparse transformer processor with entropy-based early exit, mixed-precision predication and fine-grained power management. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 342 doi: 10.1109/ISSCC42615.2023.10067817[101] Kim S, Kim S, Jo W, et al. 20.5 c-transformer: a 2.6-18.1μj/token homogeneous dnn-transformer/spiking-transformer processor with big-little network and implicit weight generation for large language models. 2024 IEEE International Solid-State Circuits Conference (ISSCC), 2024, 368 doi: 10.1109/ISSCC49657.2024.10454330[102] Tu F, Wu Z, Wang Y, et al. 16.1 muitcim: a 28nm 2.24 μJ/token attention-token-bit hybrid sparse digital cim-based accelerator for multimodal transformers. 2023 IEEE International Solid-State Circuits Conference (ISSCC), 2023, 248 doi: 10.1109/ISSCC42615.2023.10067842[103] Guo A, Chen X, Dong F, et al. 34.3 a 22nm 64kb lightning-like hybrid computing-in-memory macro with a compressed adder tree and analog-storage quantizers for transformer and cnns. 2024 IEEE International Solid-State Circuits Conference (ISSCC), 2024, 570 doi: 10.1109/ISSCC49657.2024.10454278[104] Im D, Park G, Li Z, et al. DSPU: a 281.6 mw real-time depth signal processing unit for deep learning-based dense rgb-d data acquisition with depth fusion and 3d bounding box extraction in mobile platforms. 2022 IEEE international solid-state circuits conference (ISSCC), 2022, 510 doi: 10.1109/ISSCC42614.2022.9731699[105] Sun W, Feng X, Tang C, et al. A 28nm 2d/3d unified sparse convolution accelerator with block-wise neighbor searcher for large-scaled voxel-based point cloud network. 2023 IEEE international solid-state circuits conference (ISSCC), 2023, 328 doi: 10.1109/ISSCC42615.2023.10067644[106] Jung J, Kim S, Seo B, et al. 20.6 lspu: a fully integrated real-time lidar-slam soc with point-neural-network segmentation and multi-level knn acceleration. 2024 IEEE International Solid-State Circuits Conference (ISSCC), 2024, 370 doi: 10.1109/ISSCC49657.2024.10454374[107] Han D, Ryu J, Kim S, et al. 2.7 metavrain: a 133mw real-time hyper-realistic 3d-nerf processor with 1d-2d hybrid-neural engines for metaverse on mobile devices. 2023 IEEE international solid-state circuits conference (ISSCC), 2023, 50 doi: 10.1109/ISSCC42615.2023.10067447[108] Ryu J, Kwon H, Park W, et al. 20.7 neugpu: a 18.5 mj/iter neural-graphics processing unit for instant-modeling and real-time rendering with segmented-hashing architecture. 2024 IEEE international solid-state circuits conference (ISSCC), 2024, 372 doi: 10.1109/ISSCC49657.2024.10454276[109] Park G, Song S, Sang H, et al. 20.8 space-mate: a 303.5mw real-time sparse mixture-of-experts-based nerf-slam processor for mobile spatial computing. 2024 IEEE International Solid-State Circuits Conference (ISSCC), 2024, 374 doi: 10.1109/ISSCC49657.2024.10454487[110] Lee J, Lee S, Lee J, et al. GSCore: efficient radiance field rendering via architectural support for 3d gaussian splatting. Proceedings of the 29th ACM international conference on architectural support for programming languages and operating systems, 2024, 3, 497 doi: 10.1145/3620666.3651385[111] Wu L, Zhu H, He S, et al. GauSPU: 3d gaussian splatting processor for real-time slam systems. 2024 57th IEEE/ACM International Symposium on Microarchitecture (MICRO), 2024, 1562 doi: 10.1109/MICRO61859.2024.00114[112] Kong W, Hao Y, Guo Q, et al. Cambricon-d: full-network differential acceleration for diffusion models. 2024 ACM/IEEE 51st annual international symposium on computer architecture (ISCA), 2024, 903 doi: 10.1109/ISCA59077.2024.00070[113] Guo R, Wang L, Chen X, et al. 20.2 a 28nm 74.34 tflops/w bf16 heterogenous cim-based accelerator exploiting denoising-similarity for diffusion models. 2024 IEEE international solid-state circuits conference (ISSCC), 2024, 362 doi: 10.1109/ISSCC49657.2024.10454308 -

Proportional views

Longke Yan received the B.S. degree from School of Integrated Circuit Science and Engineering, University of Electronic Science and Technology of China, Chengdu, China, in 2024. He is currently pursuing the Ph.D. degree with Department of Electronic and Computer Engineering, The Hong Kong University of Science and Technology, Hong Kong, China. His research interests include computer architecture, VLSI design, embodied AI, and algorithm-hardware co-design.

Longke Yan received the B.S. degree from School of Integrated Circuit Science and Engineering, University of Electronic Science and Technology of China, Chengdu, China, in 2024. He is currently pursuing the Ph.D. degree with Department of Electronic and Computer Engineering, The Hong Kong University of Science and Technology, Hong Kong, China. His research interests include computer architecture, VLSI design, embodied AI, and algorithm-hardware co-design. Fengbin Tu received the B.S. degree from the School of Electronic Engineering, Beijing University of Posts and Telecommunications, Beijing, China, in 2013, and received the Ph.D. degree from the Institute of Microelectronics, Tsinghua University, Beijing, China, in 2019. Dr. Tu is currently an Assistant Professor at the Department of Electronic and Computer Engineering and the Associate Director of the Institute of Integrated Circuits and Systems, The Hong Kong University of Science and Technology, Hong Kong, China. He was a Postdoctoral Fellow at the AI Chip Center for Emerging Smart Systems (ACCESS), Hong Kong, China, from 2022 to 2023, and a Postdoctoral Scholar at the Scalable Energy-efficient Architecture Lab (SEAL), the Department of Electrical and Computer Engineering, University of California, Santa Barbara, CA, USA, from 2019 to 2022. His research interests include AI chip, computing-in-memory, computer architecture, and reconfigurable computing. His Ph.D. thesis was recognized by the Tsinghua Excellent Dissertation Award. His AI chips ReDCIM and Thinker won the 2023 Top-10 Research Advances in China Semiconductors and 2017 ISLPED Design Contest Award. His research has been published at top conferences and journals on integrated circuits and computer architecture, including ISSCC, JSSC, DAC, ISCA, and MICRO.

Fengbin Tu received the B.S. degree from the School of Electronic Engineering, Beijing University of Posts and Telecommunications, Beijing, China, in 2013, and received the Ph.D. degree from the Institute of Microelectronics, Tsinghua University, Beijing, China, in 2019. Dr. Tu is currently an Assistant Professor at the Department of Electronic and Computer Engineering and the Associate Director of the Institute of Integrated Circuits and Systems, The Hong Kong University of Science and Technology, Hong Kong, China. He was a Postdoctoral Fellow at the AI Chip Center for Emerging Smart Systems (ACCESS), Hong Kong, China, from 2022 to 2023, and a Postdoctoral Scholar at the Scalable Energy-efficient Architecture Lab (SEAL), the Department of Electrical and Computer Engineering, University of California, Santa Barbara, CA, USA, from 2019 to 2022. His research interests include AI chip, computing-in-memory, computer architecture, and reconfigurable computing. His Ph.D. thesis was recognized by the Tsinghua Excellent Dissertation Award. His AI chips ReDCIM and Thinker won the 2023 Top-10 Research Advances in China Semiconductors and 2017 ISLPED Design Contest Award. His research has been published at top conferences and journals on integrated circuits and computer architecture, including ISSCC, JSSC, DAC, ISCA, and MICRO.

DownLoad:

DownLoad: